Best Way to Learn Data Engineering in 2025

Mar 03, 2025 7 Min Read 4120 Views

(Last Updated)

Have you ever wondered what it takes to become a data engineer? Is unraveling complex patterns and shaping the future through data-driven decision-making the best way to learn data engineering?

The world of data engineering is vast and captivating, but with so much information available, finding the best way to learn data engineering and master this field can be overwhelming.

But to make it easy for you, we laid out a way and this can act as the guide to explore the most effective methods to become a data engineer in this ever-growing technological world. So, let’s get started.

Table of contents

- What is Data Engineering?

- Best Resources to Learn Data Engineering

- Best Online Courses for Data Engineering

- Best Certifications for Data Engineers

- Best YouTube Channels for Data Engineers

- Best Books to Build Strong Foundations in Data Engineering

- A Guide to Learn Data Engineering

- Build a Strong Programming Foundation

- Gain Proficiency in Data Management and Databases

- Master Data Warehousing Solutions

- Understand ETL (Extract, Transform, Load) Processes

- Explore Big Data Technologies

- Conclusion

- FAQs

- What is data engineering, and what does a data engineer do?

- What skills are essential for a career in data engineering?

- How does data engineering differ from data science?

- What are ETL and ELT pipelines, and how do they differ?

- What are some common challenges faced in data engineering?

What is Data Engineering?

Data Engineering may sound complicated and in fact, when one hears the name data engineering, they tend to say “This sounds more complex” but trust me, in real, data engineering is simple.

Data engineering is like organizing and storing information. Data can be anything from numbers and words to pictures and videos. Data engineers are like clever organizers who build special places (like virtual shelves and cabinets) to keep all the data safe and well-arranged.

They also make sure that when someone wants to use a specific piece of data, they can find it quickly and it’s in a form that’s easy to work with.

Best Resources to Learn Data Engineering

To become a skilled data engineer, it’s essential to make sure that you are well-equipped with both theoretical and practical knowledge.

Below is a list of books, courses, YouTube channels, and certifications to guide you:

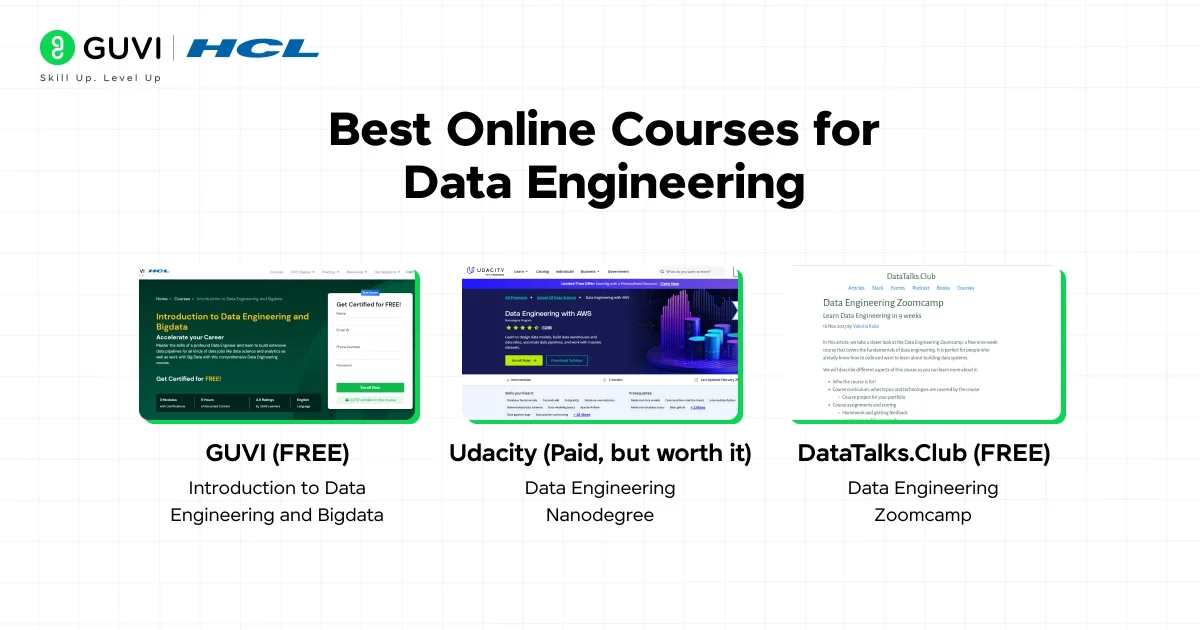

1. Best Online Courses for Data Engineering

Online courses are one of the best ways to learn data engineering because they provide structured learning, hands-on projects, and real-world applications. Here’s a list of the best online courses for data engineering.

a) Introduction to Data Engineering and Bigdata – GUVI (FREE)

This GUVI’s Data Engineering course is one of the best courses for beginners with little to no prior experience in data engineering that you get for free. It provides a step-by-step introduction to databases, SQL, ETL pipelines, and cloud technologies.

What You’ll Learn:

- Basics of Data Engineering – Understanding SQL, data warehousing, and data modeling.

- Basic Python programming skills

- Introduction to Apache Spark

Who Should Take This Course?

- Absolute beginners in data engineering.

- Software developers or analysts transitioning to data engineering.

- Anyone looking for a structured & free certification.

b) Data Engineering Nanodegree – Udacity (Paid, but worth it)

This is one of the most comprehensive courses available, covering SQL, Python, Apache Spark, and Airflow. It’s designed for those who already have some programming experience and want to build real-world data pipelines.

What You’ll Learn:

- Data Modeling – How to design OLTP and OLAP databases.

- Cloud Data Warehouses – Using Amazon Redshift, S3, and AWS Glue.

- ETL Pipelines – Implementing batch and streaming ETL workflows.

Who Should Take This Course?

- Software developers looking to specialize in data engineering.

- Data analysts transitioning to data engineering roles.

- Anyone willing to invest in a project-based curriculum.

c) Data Engineering Zoomcamp – DataTalks.Club (FREE)

This free, open-source course is perfect for those who want hands-on experience with Docker, Terraform, Kafka, Airflow, and dbt.

What You’ll Learn:

- Data Orchestration with Apache Airflow.

- Real-Time Data Processing with Kafka & Spark.

- Infrastructure as Code (Terraform & Docker).

Who Should Take This Course?

- Experienced Python & SQL developers moving into data engineering.

- Aspiring cloud data engineers looking to work with AWS or GCP.

- Anyone who prefers open-source learning resources.

By taking the right course, you’ll gain the skills and hands-on experience needed to become a successful data engineer!

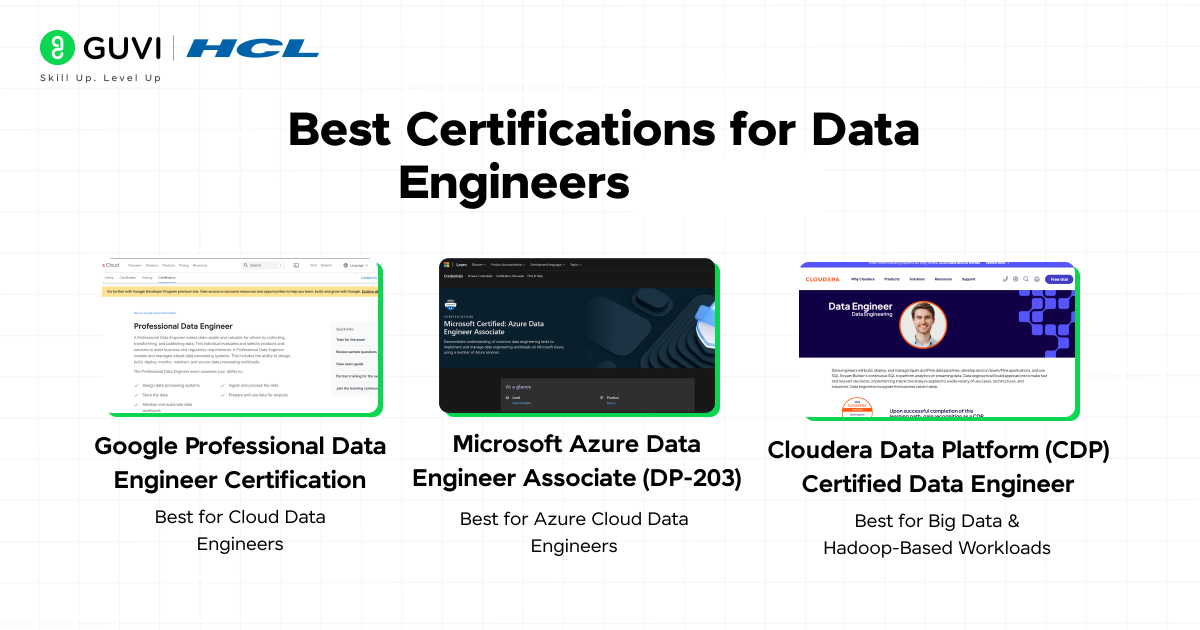

2. Best Certifications for Data Engineers

Certifications are an excellent way to validate your skills, boost your resume, and stand out in the job market as a data engineer.

Here’s a list of the best data engineering certifications, categorized based on cloud platforms, big data technologies, and general data engineering expertise.

a) Google Professional Data Engineer Certification (Best for Cloud Data Engineers)

The Google Professional Data Engineer certification is one of the most in-demand certifications for cloud-based data engineering roles. It covers BigQuery, Dataflow, Dataproc, Cloud Storage, and machine learning integrations and all essential skills for working with data in Google Cloud.

What You’ll Learn:

- Google Cloud Big Data Services – BigQuery, Dataflow (Apache Beam), and Dataproc (Hadoop & Spark).

- Building Scalable Data Pipelines – Designing batch & real-time ETL pipelines in GCP.

- Machine Learning & Data Processing – Using TensorFlow & AI services with BigQuery.

- Security & Compliance – Managing IAM roles, data encryption, and access control.

Who Should Get This Certification?

- Aspiring cloud data engineers who want to work with Google Cloud.

- Big data professionals who need to manage large-scale data processing.

- Software engineers transitioning into data engineering.

b) Microsoft Azure Data Engineer Associate (DP-203) (Best for Azure Cloud Data Engineers)

The DP-203 certification is Microsoft’s top certification for data engineers, covering Azure Synapse Analytics, Data Factory, Databricks, and Cosmos DB.

What You’ll Learn:

- Azure Data Storage & Warehousing – Working with Azure Data Lake, Synapse Analytics & Cosmos DB.

- Data Pipelines in Azure – Using Azure Data Factory (ADF) for ETL workflows.

- Big Data Processing – Implementing batch & real-time data solutions with Databricks & Stream Analytics.

- Data Security & Governance – Managing access, encryption, and compliance in Azure.

Who Should Get This Certification?

- Data engineers working with Microsoft Azure.

- Cloud professionals managing big data workloads.

- ETL developers looking to expand their cloud expertise.

c) Cloudera Data Platform (CDP) Certified Data Engineer (Best for Big Data & Hadoop-Based Workloads)

This certification is ideal for big data professionals working with Hadoop, Spark, and large-scale data lake architectures.

What You’ll Learn:

- Hadoop Ecosystem – Working with HDFS, Hive, Impala, and Cloudera Manager.

- Apache Spark Processing – Writing PySpark & Scala-based big data jobs.

- Real-Time & Batch Processing – Implementing Spark Streaming & Flink-based solutions.

- Performance Optimization – Tuning big data jobs for efficiency & scalability.

Who Should Get This Certification?

- Big data engineers working with Hadoop & Spark.

- Companies using Cloudera as their primary data platform.

- Data engineers managing on-premise & hybrid cloud data lakes.

Certifications validate your skills, help with career advancement, and make you stand out to employers. Choose the one that best matches your career goals, preferred cloud platform, and level of expertise.

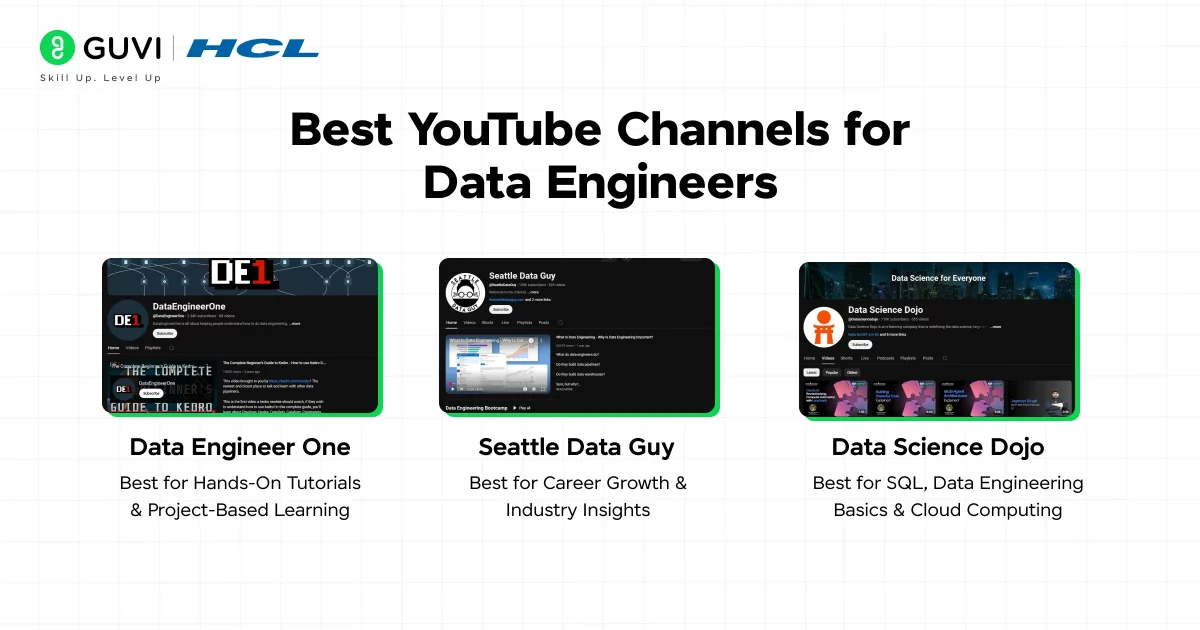

3. Best YouTube Channels for Data Engineers

YouTube is an amazing free resource for learning data engineering. It provides high-quality tutorials, real-world case studies, live coding sessions, and career advice from experienced professionals.

Here are the best YouTube channels for every aspiring and professional data engineer:

a) Data Engineer One – (Best for Hands-On Tutorials & Project-Based Learning)

Data Engineer One is a must-follow channel if you want to see real-world data engineering projects in action. It covers Apache Airflow, AWS, ETL pipelines, BigQuery, and Python for data engineering.

What You’ll Learn:

- How to Build Data Pipelines with Apache Airflow.

- Real-world ETL & ELT pipeline projects.

- Working with Cloud Data Warehouses like BigQuery & Redshift.

- Python for Data Engineering – Automating data tasks.

b) Seattle Data Guy – (Best for Career Growth & Industry Insights)

Seattle Data Guy (Ben Rogojan) shares insights into the real-life work of data engineers, career advice, and the latest trends in data architecture, big data, and cloud computing.

What You’ll Learn:

- How to Get Your First Data Engineering Job.

- Real-World Case Studies of Data Engineering Failures & Successes.

- Best Practices for Designing Scalable Data Pipelines.

- Comparisons of Tools Like Snowflake, BigQuery, Databricks, and AWS Glue.

c) Data Science Dojo – (Best for SQL, Data Engineering Basics & Cloud Computing)

This channel is great for learning SQL, data engineering fundamentals, and cloud computing with Google Cloud, AWS, and Azure.

What You’ll Learn:

- SQL Mastery – Joins, CTEs, window functions, query optimization.

- Data Engineering Fundamentals – Data modeling, ETL, and NoSQL databases.

- Cloud Data Engineering – Hands-on AWS Redshift, BigQuery, and Azure Data Factory tutorials.

- Python for Data Engineering – Working with Pandas, NumPy, and PySpark.

By following these channels, you’ll gain practical skills, industry knowledge, and career guidance, making you job-ready!

4. Best Books to Build Strong Foundations in Data Engineering

Books are one of the best ways to gain deep, structured knowledge in data engineering. Unlike online courses that focus on hands-on exercises, books explain the concepts in-depth, allowing you to build strong theoretical foundations. Here are some of the finest books that can help you understand data engineering better.

a) Fundamentals of Data Engineering – Joe Reis & Matt Housley

This book is often referred to as the ultimate starting point for anyone who wants to understand the entire data engineering ecosystem.

Unlike other books that focus only on specific tools or technologies, Fundamentals of Data Engineering teaches the principles, best practices, and evolving trends in the field.

What You’ll Learn:

- Modern Data Architecture – How to build scalable and efficient data platforms.

- ETL vs. ELT Pipelines – When to use which approach and why.

- Cloud vs. On-Prem Data Infrastructure – How cloud technologies are changing data engineering.

- Batch vs. Streaming Data Processing – Differences between Apache Spark and Kafka-based systems.

- DataOps & Data Governance – How to ensure high-quality data with automation.

Who Should Read It?

- Beginners who want a structured introduction to data engineering.

- Software developers & data analysts transitioning into data engineering.

- Experienced data engineers looking for an updated approach to modern data platforms.

b) Designing Data-Intensive Applications – Martin Kleppmann

If you want to go beyond just tools and frameworks of data engineering and understand how large-scale data systems work, this book is a must-read.

It teaches how distributed systems store, process, and analyze big data, making it one of the most recommended books for data engineers who want to upskill their database management prowess.

What You’ll Learn:

- How Databases Work – Learn about relational (SQL) and NoSQL databases in detail.

- Data Scalability Strategies – How to design systems that handle billions of records efficiently.

- Data Modeling & Storage – Learn about structured, semi-structured, and unstructured data storage.

- Replication, Partitioning, and Sharding – Techniques to ensure high availability and fault tolerance.

- Real-World Case Studies – Learn how big tech companies like Google, Facebook, and LinkedIn handle massive amounts of data.

Who Should Read It?

- Intermediate & advanced learners who already know Python and SQL.

- Software engineers & backend developers transitioning to data engineering.

- Data engineers looking to master system design for big data processing.

c) The Data Warehouse Toolkit – Ralph Kimball & Margy Ross

This book is the Bible of data warehousing. If you want to work with data warehouses like Snowflake, Amazon Redshift, or Google BigQuery, then this book will teach you everything you need to know.

What You’ll Learn:

- Star Schema & Dimensional Modeling – How to design databases for analytical workloads.

- Fact & Dimension Tables – The role of these tables in a well-structured data warehouse.

- Data Warehouse Architecture – Best practices for building scalable data warehouses.

- ETL Process Design – How to build efficient ETL workflows for business intelligence.

- Real-World Applications – How companies like Walmart and Amazon use data warehouses for analytics.

Who Should Read It?

- Beginners who want to understand how analytical databases work.

- Data engineers building data pipelines for business intelligence.

- BI & analytics professionals working with SQL-based reporting systems.

By reading these books, you will gain deep knowledge and be well-equipped to become a highly skilled data engineer.

A Guide to Learn Data Engineering

The best way to learn data engineering is actually interesting and can be a very rewarding journey, but it requires dedication, persistence, and a systematic approach. Here’s a step-by-step guide to the best way to learn data science:

1. Build a Strong Programming Foundation

Proficiency in programming is essential for data engineers. Focus on languages widely used in the industry:

- Python: Renowned for its simplicity and extensive libraries, Python is ideal for scripting and automation in data tasks.

- SQL: The standard language for managing and querying relational databases, SQL is indispensable for data manipulation.

Understanding data structures and algorithms will enhance your problem-solving skills and code optimization.

2. Gain Proficiency in Data Management and Databases

A deep understanding of how data is stored and retrieved is crucial:

- Relational Databases: Learn about MySQL, PostgreSQL, and Oracle. Mastery of SQL is essential for interacting with these databases.

- NoSQL Databases: Familiarize yourself with MongoDB, Cassandra, and Redis, which are designed for unstructured data and offer flexibility in storage.

3. Master Data Warehousing Solutions

Data warehousing involves collecting and managing data from varied sources to provide meaningful business insights. Familiarize yourself with:

- Amazon Redshift: A fully managed data warehouse service in the cloud.

- Google BigQuery: A serverless, highly scalable, and cost-effective multi-cloud data warehouse.

- Snowflake: A cloud-based data warehousing platform known for its scalability and performance.

4. Understand ETL (Extract, Transform, Load) Processes

ETL is fundamental in data engineering:

- Extract: Collect data from various sources.

- Transform: Clean and process data into a usable format.

- Load: Store the transformed data into a database or data warehouse.

Tools like Apache NiFi, Talend, and Apache Airflow are popular for designing and managing ETL workflows.

5. Explore Big Data Technologies

Handling large datasets requires specialized tools:

- Apache Hadoop: Utilizes distributed storage and processing to manage big data.

- Apache Spark: Offers fast, in-memory data processing capabilities.

- Apache Kafka: A distributed event streaming platform capable of handling real-time data feeds.

Conclusion

In conclusion, mastering data engineering requires a structured approach, blending foundational knowledge with hands-on experience.

Start with Python and SQL, the core languages for handling and manipulating data. Build expertise in databases (both SQL and NoSQL), ETL processes, and data warehousing to understand how data is collected, processed, and stored.

Data engineering is an ever-evolving field, and continuous learning is the key to success. If you stay consistent, work on real-world problems, and adapt to new technologies, you will be well on your way to becoming a skilled data engineer.

FAQs

Data engineering involves building and maintaining systems that collect, store, and process large volumes of data. Data engineers design and construct data pipelines, integrate data from various sources, and ensure the scalability and efficiency of these systems.

Key skills for data engineers include proficiency in programming languages like Python and Java, expertise in SQL and NoSQL databases, understanding of ETL (Extract, Transform, Load) processes, and familiarity with big data tools such as Apache Spark and Hadoop.

While both fields deal with data, data engineering focuses on designing and managing the infrastructure and tools for data collection and storage. In contrast, data science involves analyzing and interpreting complex data to inform business decisions.

ETL (Extract, Transform, Load) involves extracting data from sources, transforming it into a suitable format, and loading it into a destination system.

ELT (Extract, Load, Transform) first loads raw data into a storage system and then transforms it as needed.

The choice between ETL and ELT depends on factors like data volume, processing requirements, and system architecture.

Data engineers often encounter challenges such as handling large and complex datasets, ensuring data quality and consistency, integrating data from diverse sources, scaling systems to accommodate growth, and staying updated with rapidly evolving technologies and tools.

Did you enjoy this article?