Data Engineering Syllabus 2024 | A Complete Guide

Oct 24, 2024 4 Min Read 9280 Views

(Last Updated)

Do you want to become an experienced Data Engineer? If so, are you confused about what to study for it and aimlessly surfing through the internet without knowing the Data Engineering syllabus?

Worry not, to ease your burden and to give you a clear understanding of the data engineering syllabus, we formulated everything that you need to learn and get expertise. In this article, you will understand in-depth about data engineering Syllabus, and the topics that have to be covered in order to become an expert in the field.

Table of contents

- What is Data Engineering?

- Key Roles in Data Engineering

- Data Engineering Syllabus

- Introduction to Data Engineering

- Data Storage Technologies

- Data Processing Technologies

- Data Integration and ETL

- Data Modeling and Architecture

- Data Pipeline Orchestration

- Data Quality and Testing

- Cloud Platforms and Services

- 9: Data Security and Compliance

- Scalability and Performance

- Case Studies and Real-world Projects

- Emerging Trends in Data Engineering

- Final Project

- Conclusion

- FAQs

- What are the primary roles and responsibilities of a data engineer?

- Can I transition from software engineering to data engineering?

- What technologies are typically covered in a Data Engineering Syllabus?

- Why is Data Engineering important?

What is Data Engineering?

Data engineering is a field within data management that involves designing, constructing, and maintaining systems, pipelines, and architectures to collect, store, process, and transform raw data into usable and valuable information.

It focuses on creating the infrastructure and processes that allow organizations to effectively manage and utilize their data for various purposes, including analytics, reporting, machine learning, and decision-making.

Before we move to the next part, you should have a deeper knowledge of data engineering concepts. You can consider enrolling yourself in GUVI’s Big Data and Cloud Analytics Course, which lets you gain practical experience by developing real-world projects and covers technologies including data cleaning, data visualization, Infrastructure as code, database, shell script, orchestration, cloud services, and many more.

Additionally, if you would like to explore Data Engineering and Big Data through a Self-paced course, try GUVI’s Data Engineering and Big Data self-paced course.

Key Roles in Data Engineering

In the field of data engineering, various roles and responsibilities exist, each contributing to the design, construction, and maintenance of data pipelines, infrastructure, and systems. Here are some common roles you might find in data engineering:

- Data Engineer: Data engineers are responsible for designing, building, and maintaining data pipelines and architectures.

- Data Integration Engineer: Data integration engineers focus on integrating data from disparate sources into a unified format.

- ETL Developer: ETL (Extract, Transform, Load) developers specialize in designing and implementing ETL processes. They extract data from various sources, transform it according to business rules, and load it into target systems.

- Big Data Engineer: Big data engineers work with massive volumes of data, often using distributed computing frameworks.

It is important for you to understand the skills required for a data engineer also as that can give you an idea and more insight about the work that a data engineer is required to do.

Data Engineering Syllabus

A data engineering syllabus typically covers a range of topics related to the design, construction, and management of data pipelines and infrastructure to support data-driven applications and analytics.

Here’s a comprehensive syllabus that covers various aspects of data engineering.

1. Introduction to Data Engineering

This is the first module in the data engineering syllabus and in this section, you are introduced to the fundamental concepts of data engineering. It will explore the crucial role data engineering plays in the modern data landscape, including its significance in supporting data-driven decision-making.

The module covers the data lifecycle from ingestion to visualization, providing a comprehensive overview of the processes involved in handling data effectively.

You will effectively learn the following topics in this module:

- Overview of Data Engineering: Role, importance, and challenges.

- Data Engineering vs. Data Science vs. Data Analytics.

- Data Lifecycle: Ingestion, storage, processing, analysis, and visualization.

2. Data Storage Technologies

This module is the second step in data engineering syllabus and it delves into various data storage solutions, including relational databases, NoSQL databases, data warehouses, and distributed file systems.

You also learn about the strengths and weaknesses of each technology, how to design efficient storage systems, and how to choose the appropriate solution based on specific use cases.

In this module, you will learn in-depth about the following:

- Relational Databases: SQL databases, schema design, normalization, and indexing.

- NoSQL Databases: Document, key-value, column-family, and graph databases.

- Data Warehouses: Concepts, star and snowflake schemas, OLAP, and data marts.

- Distributed File Systems: Hadoop HDFS, Google Cloud Storage, Amazon S3.

3. Data Processing Technologies

In this module, you are introduced to data processing paradigms such as batch processing and stream processing. This module explores how these technologies enable efficient processing of data, whether in large batches or in real-time streams.

You will learn about technologies that help in achieving data efficiency:

- Batch Processing: MapReduce, Apache Spark, data parallelism.

- Stream Processing: Apache Kafka, Apache Flink, event-driven architectures.

- In-Memory Computing: Redis, Apache Ignite, Memcached.

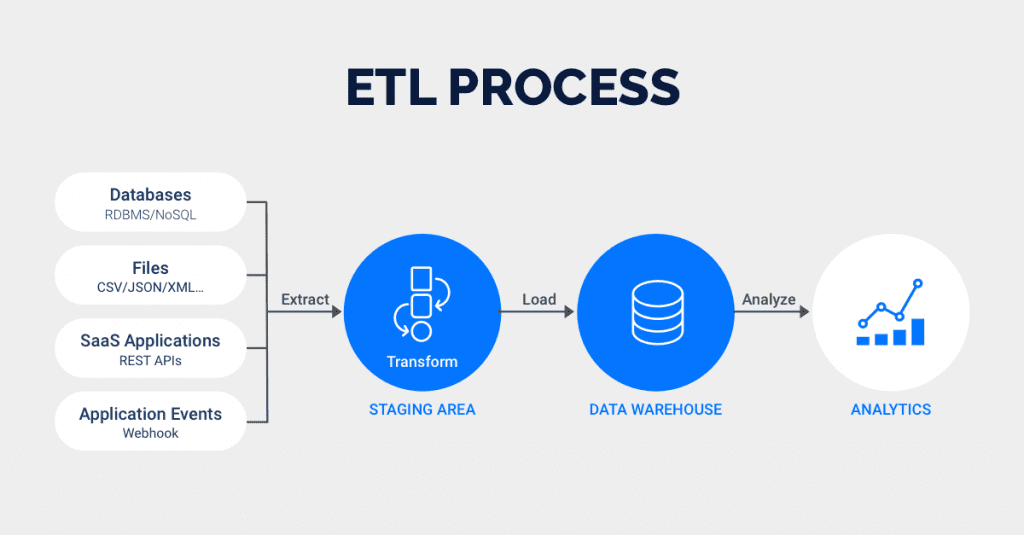

4. Data Integration and ETL

The fourth module in the data engineering syllabus will help you study Extract, Transform, and Load (ETL) processes that are essential for integrating data from various sources.

You additionally learn about data extraction techniques, data transformation methods, and loading data into target systems. Practical skills in designing and implementing ETL pipelines are emphasized.

Tools that you will learn in this module are:

- Data Integration Tools: Apache Nifi, Talend, Informatica.

- Data Transformation: Data cleaning, enrichment, aggregation, and denormalization.

5. Data Modeling and Architecture

You explore data modeling techniques in this module of the data engineering syllabus that includes conceptual, logical, and physical models. This module also delves into dimensional modeling for data warehouses, understanding how to design efficient schemas such as star and snowflake schemas.

This module also introduces concepts of data governance and data lineage.

6. Data Pipeline Orchestration

In this module ‘you will learn about workflow management systems such as Apache Airflow, which enable the automation and orchestration of data pipelines.

This helps you to understand how to schedule, monitor, and manage dependencies between tasks in complex data workflows.

7. Data Quality and Testing

This module focuses on ensuring the quality of data throughout the data pipeline. You study data profiling, validation, and cleansing techniques.

You will also learn about testing strategies specific to data engineering, including unit testing and integration testing of data pipelines.

8. Cloud Platforms and Services

You are introduced to cloud computing platforms such as AWS, Google Cloud, and Azure. Here, you will learn how to leverage cloud services for data storage, processing, and analytics. The module covers managed data services like AWS Glue, Google Dataflow, and Azure Data Factory.

Key points in this module will be:

- Introduction to Cloud Computing: AWS, Google Cloud, Azure.

- Managed Data Services: AWS Glue, Google Dataflow, Azure Data Factory.

- Serverless Architectures for Data Processing.

9: Data Security and Compliance

You will explore data security best practices, including encryption of data at rest and in transit. You also learn about access control mechanisms and authentication methods.

Additionally, you will gain an understanding of data privacy regulations and compliance requirements.

10. Scalability and Performance

This module focuses on designing data systems that can scale to handle large volumes of data. You will learn about horizontal and vertical scaling, load balancing, and performance optimization techniques to ensure efficient data processing.

11. Case Studies and Real-world Projects

You get to analyze real-world data engineering use cases and projects. You will also gain hands-on experience in designing and implementing end-to-end data pipelines, handling diverse data types, and addressing scalability and performance challenges.

12. Emerging Trends in Data Engineering

This module covers current trends in data engineering, such as the integration of big data and machine learning, DataOps practices, and serverless data processing. You gain insights into the evolving landscape of data engineering technologies and practices.

13. Final Project

In the culminating module, you get to work on a comprehensive data engineering project. You will apply the knowledge gained throughout the course to design, implement, and optimize a complete data pipeline. You can present your projects, showcasing your proficiency in data engineering concepts and skills.

This syllabus provides a comprehensive overview of the data engineering field, equipping students with the knowledge and skills needed to excel in designing, building, and maintaining data pipelines and infrastructure.

Kickstart your career by enrolling in GUVI’s Big Data and Cloud Analytics Course where you will master technologies like data cleaning, data visualization, Infrastructure as code, database, shell script, orchestration, and cloud services, and build interesting real-life cloud computing projects.

Alternatively, if you want to explore Data Engineering and Big Data through a Self-paced course, try GUVI’s Data Engineering and Big Data Self-Paced course.

Conclusion

In conclusion, a well-structured data engineering syllabus serves as a guiding roadmap for individuals seeking to navigate the dynamic landscape of modern data management. It is important for you to check the topics covered whenever you enroll for a Data Engineering course online.

As data continues to shape industries and drive innovation, a comprehensive syllabus empowers learners with the essential skills to design, construct, and manage robust data pipelines and architectures.

From foundational concepts to emerging trends, each module contributes to a holistic understanding of data engineering’s intricacies. By equipping students with the ability to tackle data integration, quality assurance, security, and scalability, a thoughtfully crafted syllabus paves the way for adept data engineers who can transform raw information into actionable insights, pushing organizations toward data-driven success in an ever-evolving digital world.

FAQs

What are the primary roles and responsibilities of a data engineer?

Data engineers design, build, and maintain data pipelines, ensuring data is collected, transformed, and stored effectively. They work with various teams to ensure data availability, quality, and accessibility.

Can I transition from software engineering to data engineering?

Yes, many skills from software engineering are transferable to data engineering. You might need to learn database management, data processing frameworks, and other relevant skills.

What technologies are typically covered in a Data Engineering Syllabus?

A syllabus might cover technologies like Apache Spark, Hadoop, SQL databases, NoSQL databases, cloud services (AWS, GCP, Azure), ETL tools (Apache Nifi, Talend), and workflow management tools (Apache Airflow).

Why is Data Engineering important?

Data engineering is crucial for managing and transforming raw data into usable insights. It enables organizations to make informed decisions, develop data-driven applications, and conduct meaningful analytics.

![Top 10 Big Data Project Ideas [With Source Code] 8 Big Data Project Ideas](https://www.guvi.in/blog/wp-content/uploads/2024/10/BigData_Project_Ideas.png)

Did you enjoy this article?