Build your own personal voice assistant like Siri, Alexa using Python

Oct 22, 2024 6 Min Read 11479 Views

(Last Updated)

The world we see today is a sci-fi utopian, bending technology, design, and nature together in a way that is harmonious and seamless. We effortlessly use a plethora of technologies. For instance, could you imagine a decade ago that you would be able to talk to a phone, console, or speaker and it would perform the task scripts with only your voice commands and no action on your part? Although we still can’t seek companionship and fall in love with our AI/Operating System as shown in the movie ‘Her” but voice assistant has come a long way, killing all needs for computer peripherals. In this blog, we will decode the history of virtual assistants and how you can program & build your own AI personal virtual assistant using Python.

Table of contents

- From Shoebox to Smart Speakers

- Let's get started with Personal Voice-Assistant AI development

- The components & Python Packages for Voice interface

- Voice Input/output

- NLP & Intelligent Interpretation

- Subprocesses

- Compress the speech

- Other libraries

- Writing script for Personal Voice Assistants

- Setting up Speech Engine

- 'RunAndWait' Command

- Greeting the User

- Setting up command function for our personal voice assistant

- The Ongoing Function

- Summoning Skills

- Accessing Data from Web Browsers-G-Mail, Google Chrome & YouTube

- Fetching Data with Wikipedia API

- Time Prediction

- Clicking Pictures

- To fetch latest news

- Fetching Data from web

- Wolfram Alpha API for geographical and computational questions

- Weather Forecasting

- Credits

- Subprcesses-Log Off Your System

- Wrapping up

From Shoebox to Smart Speakers

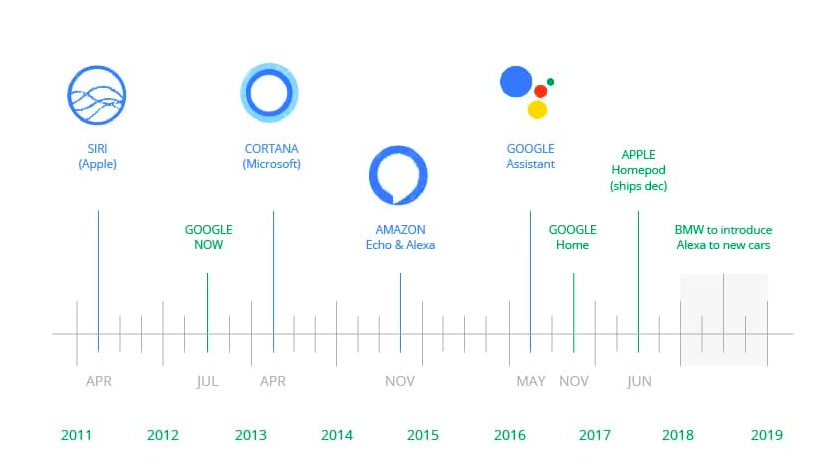

For those who don’t know, an AI virtual assistant is a piece of software that understands written or verbal commands and completes tasks assigned by the user. The first attempts for voice assistants can be traced back to the early ’60s when IBM introduced the first digital speech recognition tool. While very primitive, it did recognize 16 words and 9 digits. The next breakthrough is in the ’90s when Dragon launched the very first software product that led the way with competent voice recognition and transcription.

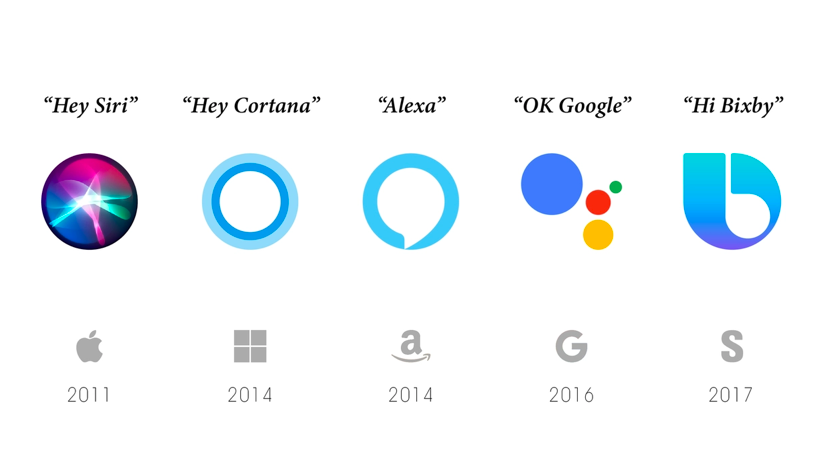

Virtual assistants become mainstream when Apple introduced Siri in Feb 2010 with integration in iPhone 4S. The team used a mixture of Natural Language Processing and speech recognition to drive the virtual assistant innovation. Siri was trained to initiate after a wake-up phrase “Hey Siri”, a user could then ask a question, for instance, “What’s the weather like in Chennai today?”. These texts were then passed to NLP software to interpret. After Siri, Google Now, and Microsoft’s Cortana soon followed the trend.

The next milestone was later achieved by Amazon’s Alexa and its launch to Echo Dot, ushering in for what we call today “The Smart Speaker” – and the birth of Voicebot.ai.

The Smart Speaker will play out for years to come but we expect that the voice assistant revolution will later morph into an ambient voice revolution where it isn’t constrained by limited devices, or assigned user tasks. Instead, they will be embedded into the environments we inhabit.

If you would like to explore Python programming through a Self-paced course, try GUVI’s Python Course with IIT Certification.

Let’s get started with Personal Voice-Assistant AI development

Let’s make a distinction here before we start. If you want to build voice and NLP capabilities into your own application, you have several cloud and API options. For Apple, you can use their Sirikit API, along with the $99 cost of registering yourself as an Apple developer and publishing on the Apple Store. One such example is Swiggy and its UI voice command to track the delivery partner. Other cloud options include Amazon’s Alexa with AWS account & Google Now.

But in case you don’t wanna lock yourself in a particular ecosystem, you can develop your own system to enable voice-assistant. It’s just a matter of speech recognition, a pipeline, a rules engine, a query parser, and pluggable architecture with open APIs

The components & Python Packages for Voice interface

Now we’d like to discuss the basic technologies in AI voice assistants. Simply put, what makes it different from a visual one, and characterize it as a voice interface.

There are few components of Voice assistant:

Voice Input/output

It implies that the user does not need to touch their screen or GUI elements to make a request. Voice command is more than enough. Our voice assistant software will perform the given task using STT. They convert voice tasks given by the user into text scripts, analyze and perform them. We will be using Speech recognition & the pyttsx3 package library to convert speech to text and vice versa. The packages support Mac OS X, Linux, and Windows.

NLP & Intelligent Interpretation

Our voice assistant shouldn’t be limited to certain catchphrases, the user should be free while communicating. The response is made by tagging certain elements that can be credible for your user. We will be integrating Wolfram Alpha API to compute expert-level answers using Wolfram’s knowledge base algorithms and AI technology. All made possible by Wolfram Language.

Subprocesses

This is a standard library from Python to process various system commands like to log off or restart, predict the current time, and set alarms. We will be using OS Library in python to enable the functions to interact with the operating system.

Compress the speech

This feature of our voice assistant is responsible for the fast delivery of a command response to the user. We will use JSON Module for storing and exchanging data. It’s reliable and fast.

Other libraries

Apart from the essential features, we will use several other Python libraries such as Wikipedia, Ecapture, Time, DateTime, request, and others to enable more functions.

To begin with, it’s necessary to install all the above-mentioned package libraries in your system using the pip command. If you wanna clear your Python Fundamentals, visit here.

Writing script for Personal Voice Assistants

First of all, let’s import all the libraries using the pip command or terminal. For sake of clarity, we’ll name our personal voice assistant “JARVIS-One”. ( Any Resemblance is uncanny )

import speech_recognition as sr import pyttsx3 import datetime import os import time import subprocess import wikipedia import webbrowser from ecapture import ecapture as ec import wolframalpha import json import requests

Setting up Speech Engine

We are going to use Sapi5, a Microsoft text to speech engine for voice recognition. The Pyttsx3 module is stored in a variable name engine. We can set the voice id as either 0 or 1. ‘0’ indicates male voice & ‘1’ indicates Female Voice.

engine=pyttsx3.init('sapi5')

voices=engine.getProperty('voices')

engine.setProperty('voice','voices[0].id')

Further, we will define a function speak which will convert text to speech. The speak function will take the texts as an argument and it will further initialise the engine.

‘RunAndWait’ Command

Just as the name suggests, this function blocks other voice requests while processing all currently queued commands. It invokes callbacks for appropriate engine notification and returns back all the commands queued before the next call are emptied from the queue.

def speak(text):

engine.say(text)

engine.runAndWait()

Greeting the User

The Python Library supports wishMe function for personal voice assistant to greet the user. The now().hour function abstract’s the hour from the current time.

If the hour is greater than zero and less than 12, the voice assistant wishes you with the message “Good Morning <F_name>”.

If the hour is greater than 12 and less than 18, the voice assistant wishes you the following message “Good Afternoon <F_name>”.

Else it voices out the message “Good evening”

def wishMe():

hour=datetime.datetime.now().hour

if hour>=0 and hour<12:

speak("Hello F_name,Good Morning")

print("Hello F_name,Good Morning")

elif hour>=12 and hour<18:

speak("Hello F_name,Good Afternoon")

print("Hello F_name,Good Afternoon")

else:

speak("Hello F_name,Good Evening")

print("Hello F_name,Good Evening")

Setting up command function for our personal voice assistant

Now we need to define a specific function takecommand for the personal voice assistant to understand, adapt and analyze the human language. The microphones capture the voice input and the recognizer recognizes the speech to give a response.

We will also incorporate exception handling to rule out all exceptions during the run time error. The recognize_Google function uses google audio to recognize speech.

def takeCommand():

r=sr.Recognizer()

with sr.Microphone() as source:

print("Listening...")

audio=r.listen(source)

try:

statement=r.recognize_google(audio,language='en-in')

print(f"user said:{statement}\n")

except Exception as e:

speak("Pardon me, please say that again")

return "None"

return statement

print("Loading your AI personal assistant JARVIS-One")

speak("Loading your AI personal assistant JARVIS-One")

wishMe()

The Ongoing Function

The main function starts from here, the command given by the human interaction/user is stored in the variable statement.

if __name__=='__main__':

while True:

speak("How can I help you now?")

statement = takeCommand().lower()

if statement==0:

continue

The voice assitant-JARVIS can now listen to some trigger words assigned by the user.

if "good bye" in statement or "ok bye" in statement or "stop" in statement:

speak('your personal assistant JARVIS-one is shutting down,Good bye')

print('your personal assistant JARVIS-one is shutting down,Good bye')

break

Summoning Skills

Now that we have finished setting up the voice assistant, we will build the essential skills.

1. Accessing Data from Web Browsers-G-Mail, Google Chrome & YouTube

The Open_new_tab function accepts web browser URL’s as a parameter that needs to be accessed. While Python time sleep function delays the execution of the program for a given time.

elif 'open youtube' in statement:

webbrowser.open_new_tab("https://www.youtube.com")

speak("youtube is open now")

time.sleep(5)

elif 'open google' in statement:

webbrowser.open_new_tab("https://www.google.com")

speak("Google chrome is open now")

time.sleep(5)

elif 'open gmail' in statement:

webbrowser.open_new_tab("gmail.com")

speak("Google Mail open now")

time.sleep(5)

2. Fetching Data with Wikipedia API

Once we have successfully imported the Wikipedia API, we will use the following command to extract data from it. The wikipedia.summary() function helps users ask for any trivia, and execute it with a short summary as a variable result.

if 'wikipedia' in statement:

speak('Searching Wikipedia...')

statement =statement.replace("wikipedia", "")

results = wikipedia.summary(statement, sentences=3)

speak("According to Wikipedia")

print(results)

speak(results)

3. Time Prediction

JARVIS-one can predict the current time from datetime.now() function, which will display time in hour, minute & second in a variable name strTime.

elif 'time' in statement:

strTime=datetime.datetime.now().strftime("%H:%M:%S")

speak(f"the time is {strTime}")

4. Clicking Pictures

The ec.capture() function enables JARVIS-One click pictures from your camera. It has 3 parameters: Camera Index, Window Name & Save Name.

If there are two webcams, the first will has an indication with ‘0’, and the second will have an indication of ‘1’. Moreover, it can either be a string or a variable. In case you don’t wanna access this window, type as False.

You can also give the name to the clicked image, if you don’t wish to save the image, type as False.

elif "camera" in statement or "take a photo" in statement:

ec.capture(0,"robo camera","img.jpg")

5. To fetch latest news

JARVIS-One is programmed to fetch top headline news from Time of India by using the web browser function.

elif 'news' in statement:

news = webbrowser.open_new_tab("https://timesofindia.indiatimes.com/home/headlines”)

speak('Here are some headlines from the Times of India,Happy reading')

time.sleep(6)

6. Fetching Data from web

The open_new_tab() function will help search and extract data from a web browser. For instance, you can search for pictures of blue dandelions. Jarvis-One will help open google images and fetch them.

elif 'search' in statement:

statement = statement.replace("search", "")

webbrowser.open_new_tab(statement)

time.sleep(5)

7. Wolfram Alpha API for geographical and computational questions

Third-party API Wolfram Alpha API enables Jarvis-one to answer computational and geographical questions. However, to access Wolfram alpha API, you need to create an account and have a unique app ID from their official website. The client is an instance (class) created for wolfram alpha whereas res variable stores the response given by the wolfram alpha.

lif 'ask' in statement:

speak('I can answer to computational and geographical questions and what question do you want to ask now')

question=takeCommand()

app_id="Paste your unique ID here "

client = wolframalpha.Client('R2K75H-7ELALHR35X')

res = client.query(question)

answer = next(res.results).text

speak(answer)

print(answer)

8. Weather Forecasting

With an API key from Open Weather Map, your personal voice assistant can detect weather. It is an online service that offers weather data for all locations. We can use city_name_variables command using takecommand() function. Here is the following code.

elif "weather" in statement:

api_key="Apply your unique ID"

base_url="https://api.openweathermap.org/data/2.5/weather?"

speak("what is the city name")

city_name=takeCommand()

complete_url=base_url+"appid="+api_key+"&q="+city_name

response = requests.get(complete_url)

x=response.json()

if x["cod"]!="404":

y=x["main"]

current_temperature = y["temp"]

current_humidiy = y["humidity"]

z = x["weather"]

weather_description = z[0]["description"]

speak(" Temperature in kelvin unit is " +

str(current_temperature) +

"\n humidity in percentage is " +

str(current_humidiy) +

"\n description " +

str(weather_description))

print(" Temperature in kelvin unit = " +

str(current_temperature) +

"\n humidity (in percentage) = " +

str(current_humidiy) +

"\n description = " +

str(weather_description))

view rawVoice_assistant.py hosted with ❤ by GitHub

9. Credits

It will add an element of fun to program Jarvis_ONE to answer the questions such as “what it can do” and “who created it”.

elif 'who are you' in statement or 'what can you do' in statement:

speak('I am JARVIS-one version 1 point O your personal assistant. I am programmed to minor tasks like'

'opening youtube,google chrome, gmail and stackoverflow ,predict time,take a photo,search wikipedia,predict weather'

'In different cities, get top headline news from times of india and you can ask me computational or geographical questions too!')

elif "who made you" in statement or "who created you" in statement or "who discovered you" in statement:

speak("I was built by F_NAME")

print("I was built by F_NAME")

10. Subprcesses-Log Off Your System

The subprocess.call() function here is used to process the system function to log off or to turn off your PC. Further, it invokes your AI assistant to automatically turn off your PC.

elif "log off" in statement or "sign out" in statement:

speak("Ok , your pc will log off in 10 sec make sure you exit from all applications")

subprocess.call(["shutdown", "/l"])

time.sleep(3)

Wrapping up

Now that you have got the hang of it, you can build your own personal voice assistant from scratch. Similarly, you can incorporate so many other free APIs available to enable more functionalities.

In case you want to realign your code, visit this Git Repository. (All credit goes to the developer). GUVI is an IIT-M incubated springboard for knowledge and has helped millions of students with their programming journey.

If you would like to explore Python programming through a Self-paced course, try GUVI’s Python Course with IIT Certification.

![10 Incredible PyTorch Project Ideas [With Source Code] 5 PyTorch Project Ideas](https://www.guvi.in/blog/wp-content/uploads/2024/11/Feature-image.png)

![10 Unique Deep Learning Project Ideas [With Source Code] 6 Deep Learning Project Ideas](https://www.guvi.in/blog/wp-content/uploads/2024/10/DeepLearning_Project_Ideas.png)

![10 Unique Keras Project Ideas [With Source Code] 8 Keras Project Ideas](https://www.guvi.in/blog/wp-content/uploads/2024/10/Feature-Image.png)

![10 Outstanding TensorFlow Project Ideas [With Source Code] 10 TensorFlow Project Ideas](https://www.guvi.in/blog/wp-content/uploads/2024/10/Top-10-Tensorflow-Project-Ideas-Feature-Image.webp)

![AI Video Revolution: How the Internet is Forever Changed [2024] 12 Feature Image - AI Video Revolution How the Internet is Forever Changed](https://www.guvi.in/blog/wp-content/uploads/2024/03/Feature-4.webp)

Did you enjoy this article?