From predicting market trends to optimizing business operations, data science provides the tools and techniques needed to turn raw data into actionable insights. However, successfully executing a data science project requires more than just technical skills; it demands a structured approach that encompasses various stages from problem definition to deployment and monitoring. This is where the data science life cycle comes into play.

In this blog, we will delve into a deeper understanding of the data science life cycle, exploring each stage in detail and highlighting the popular frameworks that guide these projects. We will also discuss the key roles involved in data science initiatives and provide insights on how to embark on a career as a data scientist. So, let’s dive in and explore this concept.

Table of contents

- What is the Data Science Life Cycle?

- Steps in the Data Science Life Cycle

- Popular Frameworks for the Data Science Life Cycle

- Members involved in the Data Science Lifecycle

- Conclusion

- FAQs

- Q1. What is the data science life cycle process?

- Q2. What are the 7 steps of the data science cycle?

- What are the 5 phases of the data cycle?

What is the Data Science Life Cycle?

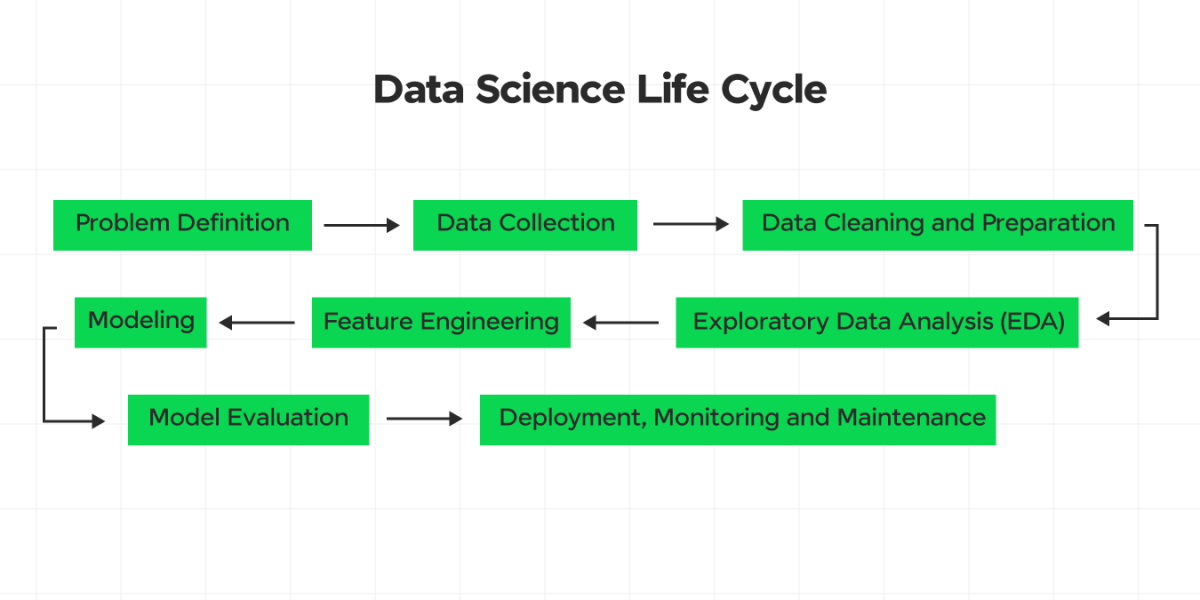

The data science life cycle is a systematic approach to managing data science projects. It encompasses a series of stages that guide data scientists from the initial problem definition to the final deployment and monitoring of solutions. It includes the typical stages involved in the data science life cycle. Let’s explore them:

Before we move into the next section, ensure you have a good grip on data science essentials like Python, MongoDB, Pandas, NumPy, Tableau & PowerBI Data Methods. If you are looking for a detailed course on Data Science, you can join GUVI’s Data Science Course with Placement Assistance. You’ll also learn about the trending tools and technologies and work on some real-time projects. Additionally, if you want to explore Python through a self-paced course, try GUVI’s Python course.

Steps in the Data Science Life Cycle

1. Problem Definition

The first step in data science projects is to clearly define the problem you are trying to solve. This involves engaging with business stakeholders to understand their needs, challenges, and objectives. By conducting thorough stakeholder interviews, you can gather the necessary information to articulate a clear and concise problem statement.

This statement outlines the business objectives and sets the criteria for success. Additionally, formulating hypotheses that can be tested through data analysis is essential at this stage.

For instance, a retail company may want to predict which products will be popular in the next season to optimize their inventory levels.

If you’re looking for a complete guide on how to start your career as a data scientist, we have A Complete Data Scientist Roadmap for Beginners, where you’ll read about the major concepts you should know to become a data scientist.

2. Data Collection

Once the problem is defined, the next step is to gather the relevant data needed to address it. Identifying data sources is crucial; these sources could include internal databases, APIs, web scraping, or external datasets.

The process of data acquisition involves collecting data from these sources and ensuring it is in a format that can be processed. Often, this stage also involves integrating data from different sources to create a unified dataset.

For example, to predict product popularity, you might collect sales data, customer demographics, and social media trends.

3. Data Cleaning and Preparation

Data cleaning and preparation is a critical stage where you ensure that the data is accurate, complete, and ready for analysis. This process involves handling data, missing values, removing duplicates, and correcting any errors in the data.

Transforming data into the required formats or structures is also necessary to facilitate analysis. Feature selection, where you choose relevant variables that will be used in the analysis, is another important aspect of this stage.

For instance, you might handle missing sales records, normalize product names, and convert dates into a standard format.

4. Exploratory Data Analysis (EDA)

Exploratory Data Analysis (EDA) is the stage where you delve into the data to uncover patterns, relationships, and initial insights. Conducting descriptive statistics helps in understanding the basic properties of the data, such as mean, median, and standard deviation.

Data visualization techniques, such as charts, graphs, and plots, are invaluable for visualizing data distributions and relationships. Correlation analysis helps in identifying relationships between different variables.

For example, visualizing sales trends over time and analyzing the correlation between customer age and purchasing behavior can provide valuable insights.

5. Feature Engineering

Feature engineering involves creating and selecting the most relevant features for modeling. This process includes generating new features from existing data, such as creating a “season” variable from dates.

Transforming features through scaling, encoding categorical variables, and normalization is also necessary. Selecting the best features using techniques like variance thresholding, correlation analysis, or feature importance from models ensures that the most informative variables are used.

For instance, you might create features like “days since last purchase” and one-hot encode product categories.

6. Modeling

In the modeling stage, you build predictive or descriptive models using statistical and machine-learning techniques. Selecting appropriate algorithms, such as regression, classification, or clustering, is the first step.

Training the models on the training dataset involves applying these algorithms to learn from the data. Hyperparameter tuning, where you optimize model parameters to improve performance, is also crucial.

For example, you might train a random forest model to predict product demand based on historical sales data.

7. Model Evaluation

Model evaluation is the stage where you assess the performance of your models to select the best one. This involves using performance metrics such as accuracy, precision, recall, F1 score, RMSE, or AUC-ROC.

Validation techniques like cross-validation and train-test split help ensure the robustness of the model. Analyzing model errors to understand their sources and implications is also essential.

For instance, evaluating the random forest model using cross-validation and assessing its performance with accuracy and F1 score can help in selecting the best model.

8. Deployment

Deployment involves implementing the model in a production environment where it can generate real-time insights. This stage includes exporting the trained model in a format that can be deployed, such as PMML or ONNX.

Developing APIs to integrate the model with existing systems is necessary for seamless operation. Integration testing ensures that the model works correctly within the production environment.

For example, deploying the demand prediction model as an API allows the inventory management system to call it and update stock levels accordingly.

9. Monitoring and Maintenance

The final stage of the data science life cycle is monitoring and maintenance. Continuously tracking the model’s performance over time using predefined metrics helps ensure its ongoing effectiveness. Periodically retraining the model with new data is necessary to maintain accuracy.

Setting up alert systems for significant drops in performance or other anomalies ensures timely intervention.

For example, monitoring the demand prediction model’s accuracy and retraining it monthly with new sales data helps keep it accurate and reliable.

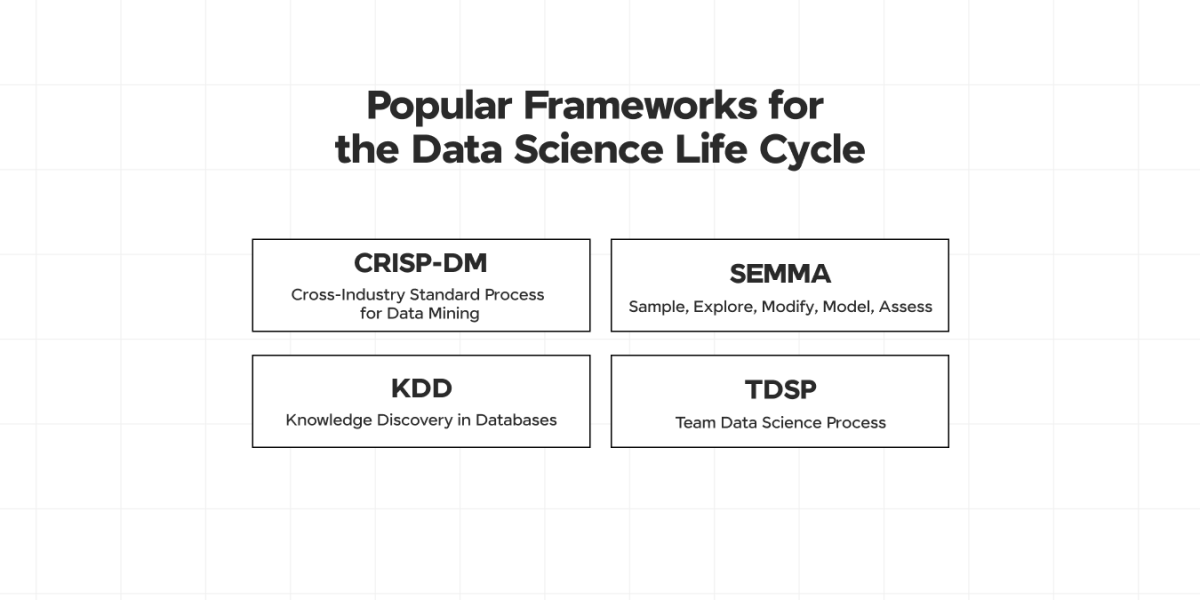

Popular Frameworks for the Data Science Life Cycle

Several data science frameworks provide structured approaches to managing data science projects. Some popular ones include:

- CRISP-DM (Cross-Industry Standard Process for Data Mining): A widely used framework that includes six phases: Business Understanding, Data Understanding, Data Preparation, Modeling, Evaluation, and Deployment.

- SEMMA (Sample, Explore, Modify, Model, Assess): Developed by SAS Institute, this framework focuses on the iterative nature of data science.

- KDD (Knowledge Discovery in Databases): Emphasizes data preparation, data mining, and the interpretation and evaluation of results.

- TDSP (Team Data Science Process): Created by Microsoft, this framework is designed to support collaborative data science efforts in teams.

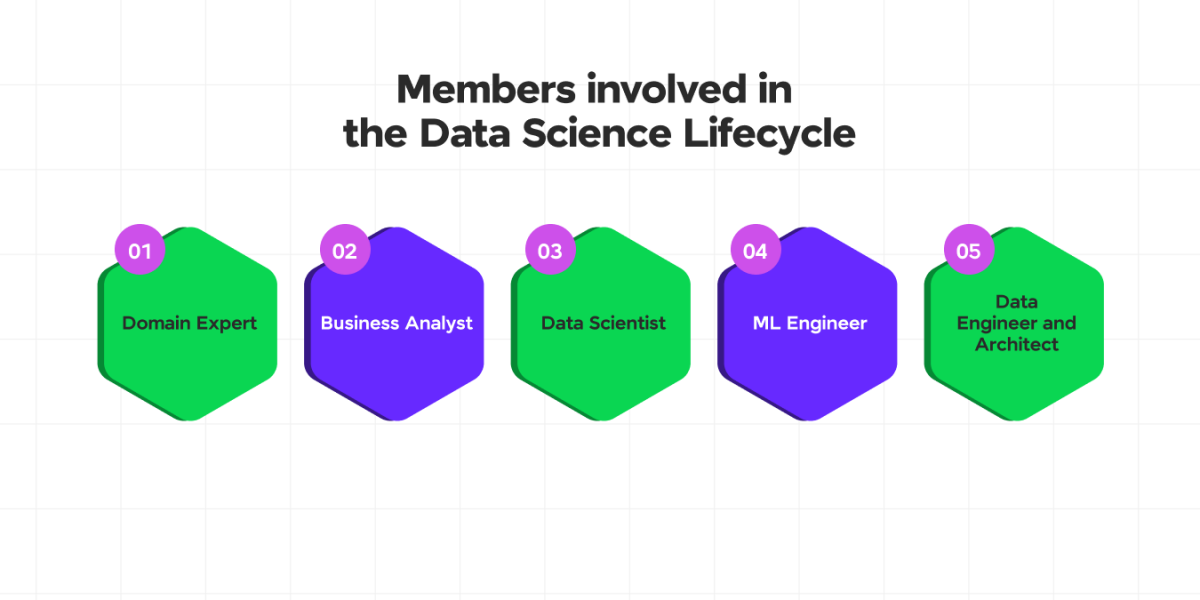

Members involved in the Data Science Lifecycle

Data science projects typically involve a variety of roles, each contributing unique expertise:

- Data Scientists: They are responsible for data analysis, modeling, and deriving actionable insights.

- Data Engineers: They handle the data pipeline, ensuring data is collected, stored, and made accessible for analysis.

- Business Analysts: They bridge the gap between technical teams and business stakeholders, translating business needs into technical requirements.

- Domain Experts: They help in providing subject matter expertise to ensure the data science solutions are relevant and accurate for the specific field.

- Project Managers: They oversee the project’s progress, manage timelines, and coordinate between different team members.

Also, work on some great Data Science Course using the steps involved in the data science life cycle to achieve an error-free application.

Kickstart your Data Science journey by enrolling in GUVI’s Data Science Course where you will master technologies like MongoDB, Tableau, PowerBI, Pandas, etc., and build interesting real-life projects.

Alternatively, if you would like to explore Python through a Self-paced course, try GUVI’s Python Certification course.

Conclusion

This guide has clearly explained the steps required in the data science life cycle and guides data scientists from problem definition to solution deployment and monitoring. You would also have learned about popular frameworks used to streamline the process in the data science life cycle. Also, the stakeholders or members needed to perform the operation and complete the project efficiently.

FAQs

The data science life cycle is simply the series of steps a data scientist—or another related professional—takes to complete the process of solving a problem for an organization using large amounts of data and various other tools.

Stage 1: Understanding the Business Problem.

Stage 2: Data Collection.

Stage 3: Data Cleaning.

Stage 4: Exploratory Data Analysis (EDA).

Stage 5: Model Building and Evaluation.

Stage 6: Communicating Results.

Stage 7: Deployment & Maintenance.

Accomplishing those goals requires careful organization of the five different phases that comprise the data lifecycle: creation, storage, usage, archiving, and destruction.

Did you enjoy this article?