What is Generative AI? Everything You Need to Know

Mar 17, 2025 7 Min Read 4170 Views

(Last Updated)

Artificial Intelligence (AI) has been rapidly evolving over the past few decades, revolutionizing industries and transforming the way we live and work. Among the most exciting and potentially transformative developments in AI is the emergence of generative AI.

This powerful technology has captured the imagination of researchers, businesses, and the general public alike, promising to enhance creativity, problem-solving, and innovation.

But what exactly is generative AI? How does it work, and what are its potential applications? In this comprehensive guide, we’ll explore the world of generative AI, breaking down its core concepts, examining its various forms, and discussing both its promising future and the challenges it faces.

Table of contents

- What is Generative AI?

- How Generative AI Works

- Types of Generative AI Models

- Generative Adversarial Networks (GANs)

- Variational Autoencoders (VAEs)

- Transformer-Based Models

- Diffusion Models

- Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) Networks

- Flow-Based Models

- Hybrid Models

- Top Generative AI Tools

- OpenAI's GPT-4

- DALL-E 2

- Stable Diffusion

- Midjourney

- Applications of Generative AI

- Benefits and Opportunities

- Challenges and Concerns

- The Future of Generative AI

- Conclusion

- FAQs

- What is Generative AI?

- How does Generative AI work?

- What are the applications of Generative AI?

What is Generative AI?

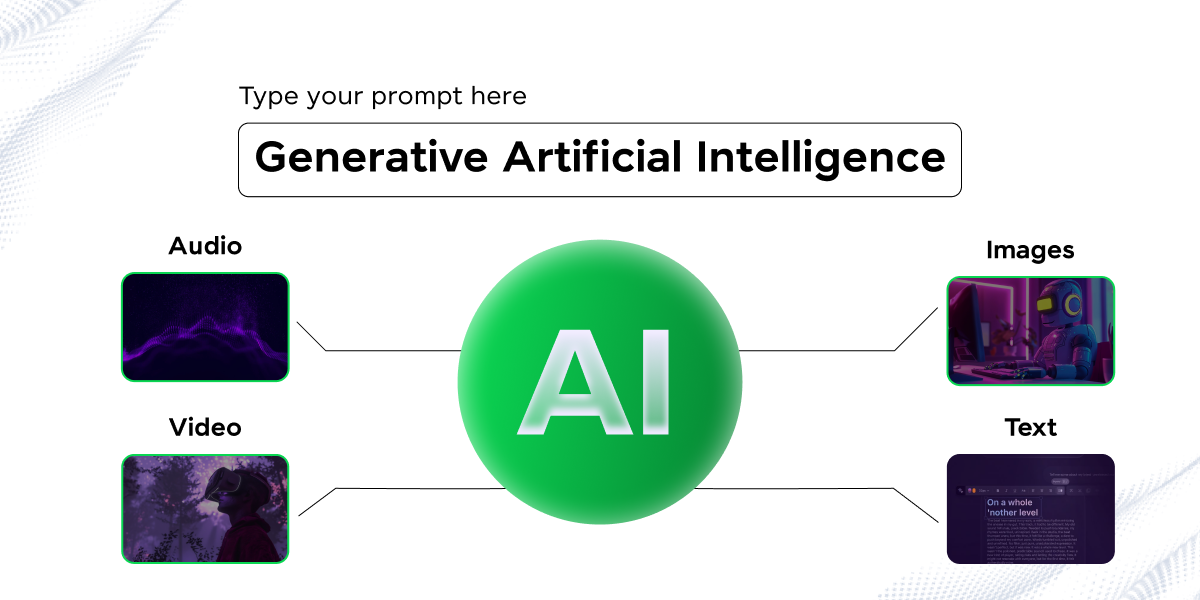

Generative AI refers to a class of artificial intelligence systems that can create new content, such as text, images, music, or even code, based on patterns and information they’ve learned from existing data. Unlike traditional AI systems that are designed to analyze or categorize existing information, generative AI has the ability to produce original outputs that didn’t previously exist.

At its core, generative AI is about creation and innovation. These systems use complex algorithms and neural networks to understand the patterns and structures within their training data and then use that understanding to generate new content that is similar in style or structure, but unique in its composition.

The field of generative AI has seen rapid advancements in recent years, driven by an increase in computational power, improvements in algorithm design, and the availability of large datasets. These developments have led to the creation of increasingly sophisticated generative models capable of producing high-quality, diverse, and often startlingly realistic outputs across a wide range of domains.

If you want to explore GenAI in-depth and transform your ideas into reality, enroll in GUVI’s Generative AI course today!

How Generative AI Works

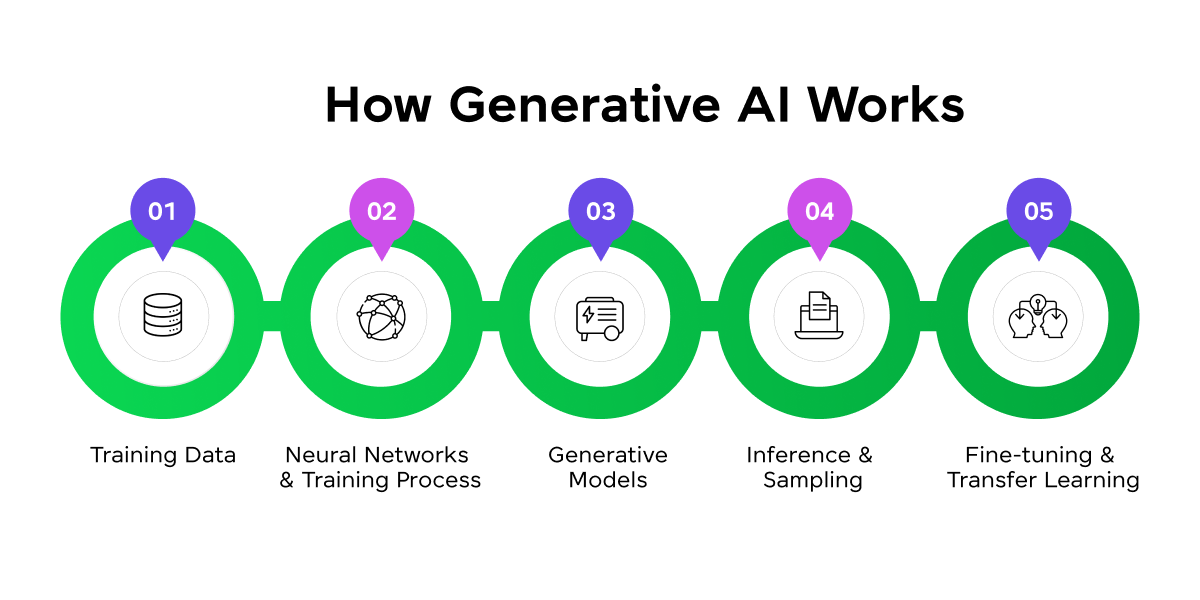

To understand how generative AI works, the process can be broken down into several key steps:

- Training Data

The first step involves gathering and preparing a vast dataset, which serves as the foundation for the generative AI model. This data is crucial as it helps the model learn patterns, structures, and relationships. The quality and diversity of the training data significantly influence the model’s performance and the range of outputs it can generate.

- Neural Networks and Training Process

The model is built using artificial neural networks, particularly deep learning architectures. These networks consist of layers of interconnected nodes (neurons) that mimic the human brain’s functioning.

During training, the model is exposed to the data, and through a process called backpropagation, it learns to recognize patterns by adjusting the weights of these connections to minimize the difference between its predictions and the actual data.

- Generative Models

Once trained, the model can generate new content. There are several types of generative models, including Generative Adversarial Networks (GANs), which use a generator and discriminator in a competitive setup to produce realistic outputs.

Variational Autoencoders (VAEs), which encode and decode data to create new, similar data; and Transformer-based models like GPT, which excel in processing sequential data for text generation.

- Inference and Sampling

In the inference stage, the model uses the patterns it has learned to generate new content. This typically involves providing a prompt to the model, which then generates new data.

The generation process often includes probabilistic sampling, where a “temperature” parameter can control the creativity and diversity of the outputs.

- Fine-tuning and Transfer Learning

Finally, many generative AI systems undergo fine-tuning, where they are first trained on large, general datasets and then refined using specific datasets tailored to particular applications. This step, known as transfer learning, enables the model to adapt efficiently to specialized tasks or domains.

These steps collectively form the backbone of most generative AI systems, enabling them to create new and innovative content based on the data they have been trained on.

If you’re ready to learn AI and take your skills to the next level, enroll in GUVI’s ChatGPT course today!

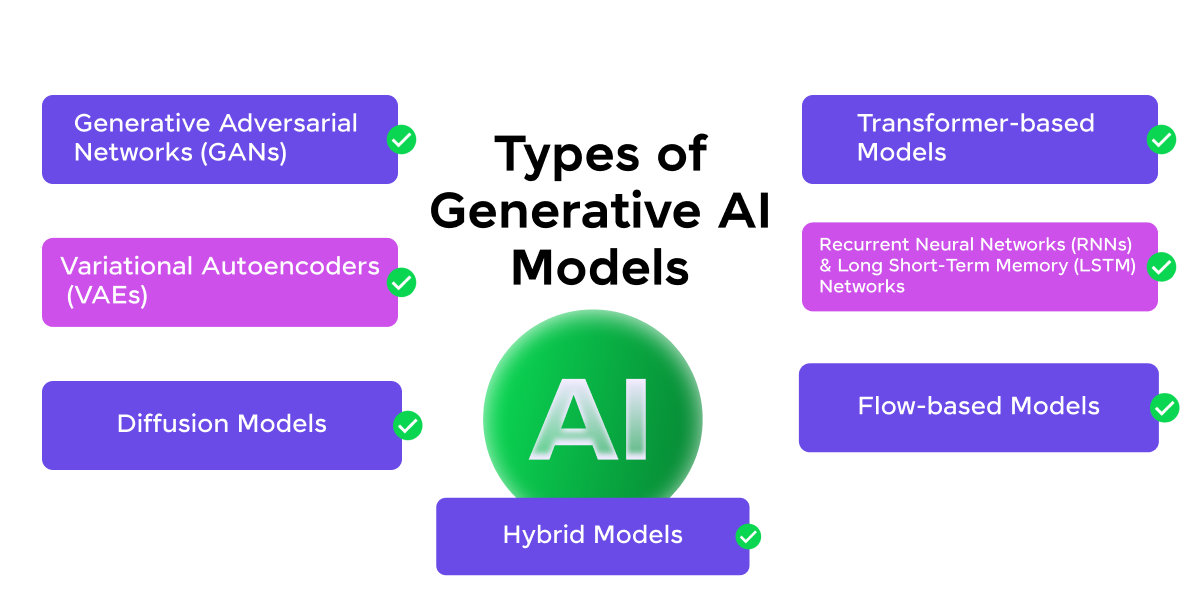

Types of Generative AI Models

Generative AI encompasses a variety of model architectures, each with its own strengths and typical applications. Here are some of the most prominent types:

1. Generative Adversarial Networks (GANs)

Introduced by Ian Goodfellow in 2014, GANs consist of two neural networks—a Generator that creates new data instances and a Discriminator that evaluates their authenticity. These networks are trained simultaneously, with the generator striving to fool the discriminator, resulting in highly realistic outputs.

Key Features:

- Adversarial training between two networks

- Ability to generate highly realistic data

- Constant improvement as the generator learns to outsmart the discriminator

Applications:

- Image generation

- Image-to-image translation

- Super-resolution imaging

- Generating synthetic data for AI model training

2. Variational Autoencoders (VAEs)

VAEs are a type of autoencoder that compresses input data into a latent space and decodes it back to generate new data. Unlike regular autoencoders, VAEs learn a probabilistic mapping, allowing for the generation of new samples that resemble the training data.

Key Features:

- Learn probabilistic mappings between data and latent space

- Generate new samples by sampling from the latent space

- Smoother and more consistent outputs compared to GANs

Applications:

- Image generation

- Data compression

- Anomaly detection

3. Transformer-Based Models

Transformers, introduced in 2017, revolutionized natural language processing (NLP) by using self-attention mechanisms to process sequential data. These models handle long-range dependencies effectively and scale well, making them the backbone of state-of-the-art language models.

Key Features:

- Self-attention mechanisms for processing sequential data

- Handle long-range dependencies better than RNNs

- Scalable and capable of parallel processing

Applications:

- Text generation

- Language Translation

- Summarization

- Question-answering

- Code generation

4. Diffusion Models

Diffusion models are generative models that iteratively transform noise into structured outputs, such as images, by reversing a process of gradually adding noise. They are known for their ability to produce high-quality, realistic images.

Key Features:

- Reverse a gradual noising process

- Operate on a Markov chain of steps

- Use score-based modeling to estimate data distribution gradients

Applications:

- Image generation

- Image editing

- Audio generation

5. Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) Networks

RNNs and LSTMs are neural networks designed to handle sequential data, like time series and text. LSTMs address the limitations of RNNs, particularly the vanishing gradient problem, by introducing specialized gates that manage memory over long sequences.

Key Features:

- Process sequential data with an internal state

- LSTMs use gates (input, forget, output) to manage long-term dependencies

- Suitable for tasks where the order of information is crucial

Applications:

- Text generation

- Music Generation

- Predictive typing

- Time series forecasting

6. Flow-Based Models

Flow-based models learn invertible transformations between simple distributions and complex data distributions. These models allow for exact likelihood computation, making them useful for both generation and density estimation.

Key Features:

- Invertible transformations between distributions

- Exact likelihood computation

- Dual capabilities for generation and density estimation

Applications:

- Image generation

- Audio synthesis

- Anomaly detection

7. Hybrid Models

Hybrid models combine the capabilities of multiple generative AI architectures, such as GANs, VAEs, and Transformers, to create more robust and flexible models that can handle complex tasks more effectively.

Key Features:

- Enhanced generation quality by combining the strengths of different models

- Improved training stability

- Flexibility to tailor architecture to specific tasks

Applications:

- Image generation and editing

- Text-to-image and image-to-text tasks

- Video generation and analysis

- Speech and audio processing

Many cutting-edge generative AI systems combine multiple model types or architectural innovations. For example, some models combine the strengths of GANs and VAEs, while others might integrate Transformer-like attention mechanisms into other architectures.

Top Generative AI Tools

Here’s a brief overview of some top Generative AI (GenAI) tools, highlighting their key features and capabilities:

1. OpenAI’s GPT-4

GPT-4 is the latest iteration in OpenAI’s Generative Pre-trained Transformer series. It is a powerful language model capable of understanding and generating human-like text. It’s designed for a wide range of applications, from content creation to coding assistance.

Features

- Natural Language Understanding: GPT-4 excels at comprehending and generating contextually relevant text across various topics.

- Versatile Applications: It can be used for writing essays, summarizing content, answering questions, and even generating code.

- Customizability: Users can fine-tune GPT-4 for specific tasks, making it adaptable to different industries.

2. DALL-E 2

Also developed by OpenAI, DALL-E 2 is an advanced image generation tool that creates images from textual descriptions. It leverages deep learning to produce high-quality, original images based on the input text.

Features

- Text-to-Image Generation: DALL-E 2 can generate detailed and contextually accurate images from simple text prompts.

- Creative Flexibility: The tool allows users to create imaginative and complex visuals that might not exist in the real world.

- Editing Capabilities: Users can refine or alter images by providing new instructions, enabling iterative creativity.

3. Stable Diffusion

Stable Diffusion is an open-source AI model designed for generating high-quality images from text descriptions. It’s particularly noted for its efficiency and ability to run on consumer-grade hardware.

Features

- High-Quality Image Generation: The tool produces visually stunning images with attention to detail.

- Customizability: Users can fine-tune the model on specific datasets or adjust parameters like “sampling steps” to influence the creative output.

- Accessibility: Unlike many high-performance models, Stable Diffusion is optimized to run on less powerful devices, making it more accessible to a broader audience.

4. Midjourney

Midjourney is an AI-powered image generation tool designed to create artistic and visually appealing images based on text prompts. It’s widely used by designers, artists, and creators for generating concept art and illustrations.

Features:

- Artistic Focus: Midjourney emphasizes creating images with an artistic and imaginative touch, often producing stylized or surreal visuals.

- Ease of Use: The tool is user-friendly, with a simple interface that allows users to quickly generate images by inputting descriptive text.

- Community and Collaboration: Midjourney has a strong community of users who share and collaborate on creative projects, making it a popular choice for collaborative artistic endeavors.

These tools are leading the charge in Generative AI, each offering unique capabilities that cater to various creative and professional needs.

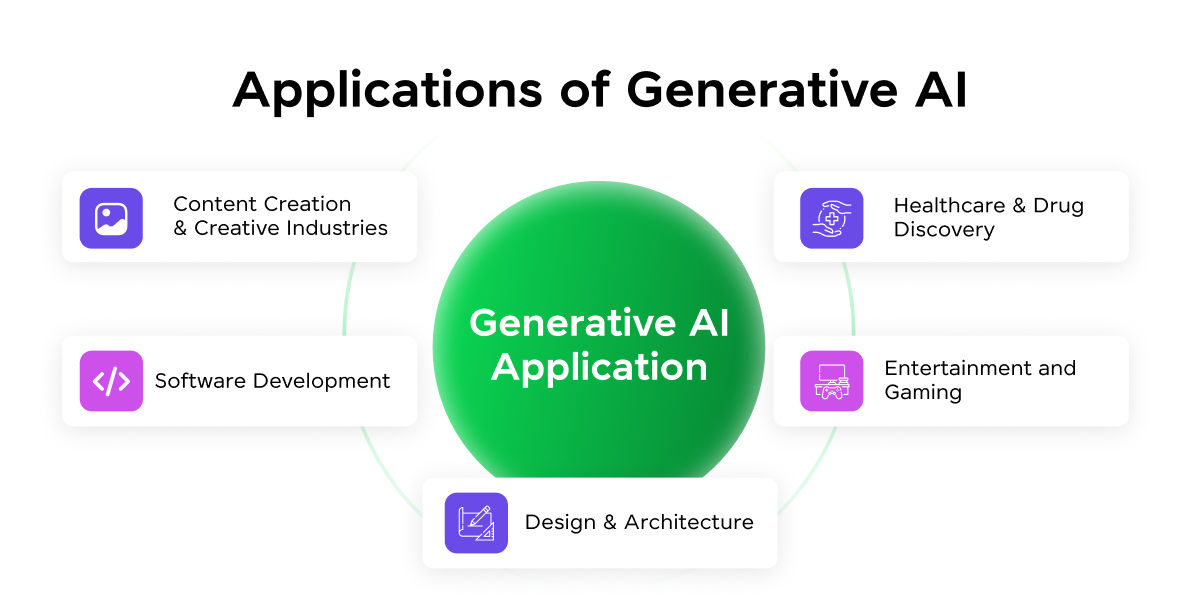

Applications of Generative AI

Generative AI is transforming industries with its wide range of applications. Here are five major areas where it’s making a significant impact:

- Content Creation and Creative Industries

- Text Generation: AI can write articles, stories, poetry, and screenplays, revolutionizing content creation.

- Image and Video Creation: Tools like DALL-E and Midjourney generate stunning images from text while emerging technologies enable video generation from simple prompts.

- Music Composition: AI can create original compositions in various styles, offering new possibilities in music production.

- Design and Architecture

- Generative Design: AI generates multiple design options based on parameters, aiding in architecture and product design.

- 3D Modeling: AI assists in creating intricate 3D models for use in architecture, gaming, and product design.

- Healthcare and Drug Discovery

- Drug Design: AI accelerates the discovery of new drugs by generating and evaluating potential compounds.

- Medical Imaging: Generative models create synthetic medical images for training or improve diagnostic accuracy by augmenting existing images.

- Entertainment and Gaming

- Procedural Content Generation: AI dynamically creates game levels, quests, and narratives, enhancing player experience.

- Character and Environment Design: AI generates diverse and unique game assets, streamlining the creative process.

- Software Development

- Code Generation: AI assists developers by generating code snippets or complete functions from natural language descriptions.

- Automated Testing: AI generates test cases and scenarios, improving the efficiency and accuracy of software testing.

These application areas showcase the transformative potential of generative AI across various sectors, driving innovation and enhancing productivity.

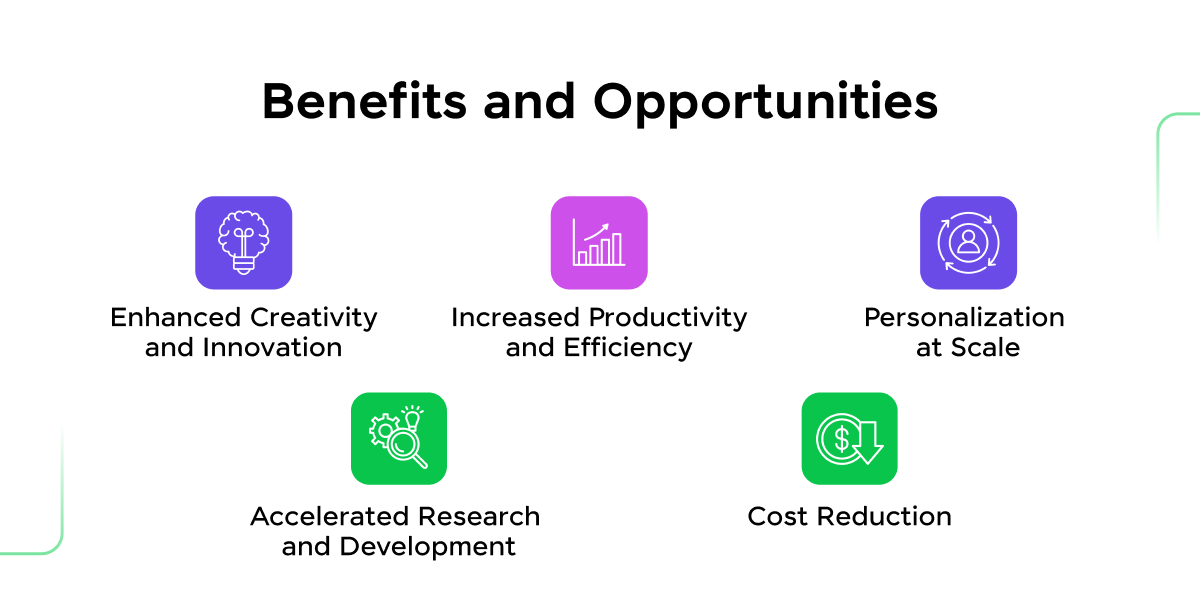

Benefits and Opportunities

Generative AI is unlocking significant advantages across various sectors, with some of the most important benefits being:

- Enhanced Creativity and Innovation

Generative AI acts as a catalyst for creativity, enabling the generation of novel ideas, designs, and solutions that might not have been conceived by humans alone. This leads to groundbreaking innovations in areas like art, design, and scientific research.

- Increased Productivity and Efficiency

By automating routine creative and analytical tasks, generative AI enhances productivity. For example, in content creation, AI can generate initial drafts, allowing human creators to focus on refining and making high-level creative decisions.

- Personalization at Scale

Generative AI allows for the creation of highly personalized content and experiences on a massive scale, which is transformative for industries like marketing, education, and entertainment where tailored experiences are key.

- Accelerated Research and Development

In sectors such as drug discovery and materials science, generative AI accelerates R&D by proposing and evaluating new compounds or materials, potentially speeding up breakthroughs and reducing costs.

- Cost Reduction

Through the automation of tasks and processes, generative AI can significantly reduce costs in industries ranging from content production to product design and testing, offering substantial financial benefits.

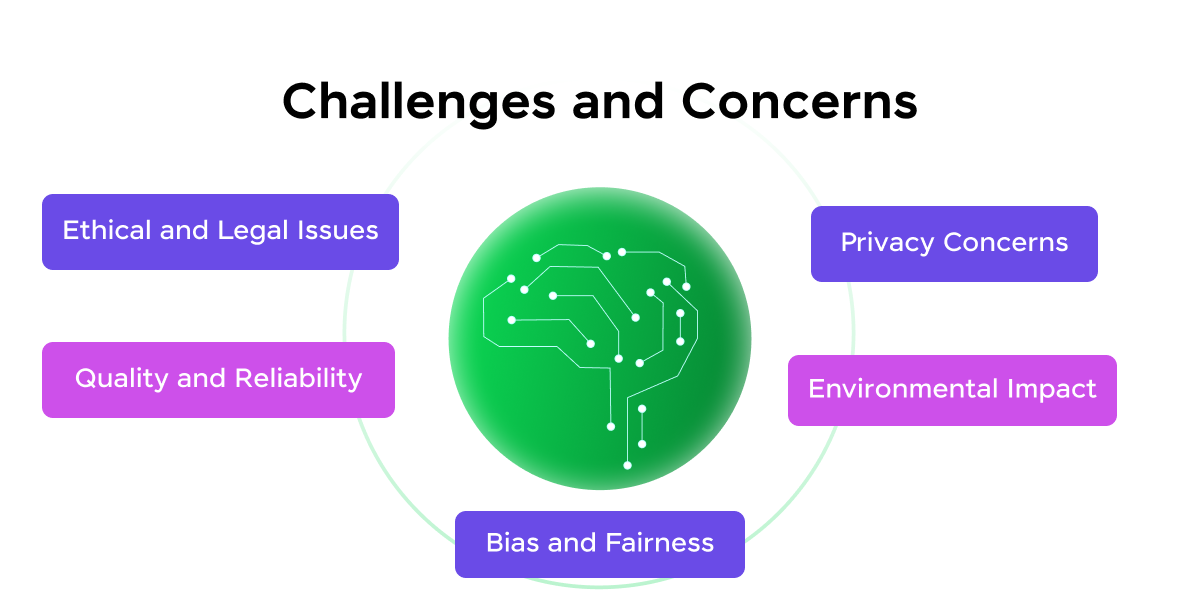

Challenges and Concerns

Generative AI, while promising, faces several significant challenges and ethical concerns:

- Ethical and Legal Issues: The use of existing works to train AI models raises questions about copyright infringement and fair use, challenging existing legal frameworks.

- Quality and Reliability: Generative AI models can produce information that sounds plausible but is incorrect or nonsensical, impacting the reliability of the generated content.

- Bias and Fairness: If the data used to train generative models is biased, the resulting outputs can perpetuate or amplify these biases, leading to fairness issues in generated content.

- Privacy Concerns: The extensive data required to train generative models raises concerns about data privacy, consent, and potential misuse, such as targeted phishing or impersonation.

- Environmental Impact: Training large generative models demands significant computational power, contributing to high energy consumption and raising environmental concerns.

The Future of Generative AI

The future of Generative AI (GenAI) is poised to be transformative, shaping both technological and non-technological domains in profound ways. In the tech world, GenAI is driving advancements in software development, creative industries, and data analysis.

For instance, the global Generative AI market is expected to grow from $20.9 billion in 2024 to $136.7 billion by 2030, reflecting a compound annual growth rate (CAGR) of 36.7%.

We need the highlighted part as the infographic 8

This growth is fueled by its applications in content creation, personalized marketing, and innovative product designs. In the non-tech world, GenAI is revolutionizing sectors such as healthcare, where it accelerates drug discovery and enhances diagnostic accuracy, and education, where it tailors learning experiences to individual needs.

As GenAI continues to evolve, its impact on daily life and business operations is becoming increasingly evident. With major tech companies like OpenAI and Google investing heavily in AI research and development, the technology is set to become even more integrated into our lives. The rise of AI-driven tools for art, music, and writing highlights its growing role in creative expression and content creation.

If you’re ready to learn AI and ML, the Artificial Intelligence and Machine Learning Courses by GUVI is your perfect gateway. Gain hands-on experience, learn from industry experts, and get endless opportunities in one of the most exciting fields today. Enroll now and start your journey toward becoming an AI and ML expert!

Conclusion

The journey of generative AI is just beginning, and its full impact is yet to be realized. As researchers, developers, policymakers, and users, we all have a role to play in guiding this technology toward outcomes that benefit humanity while mitigating potential risks.

By staying informed, engaging in open dialogue, and approaching generative AI with both enthusiasm and responsibility, we can work towards a future where this powerful technology serves as a force for positive change and progress.

FAQs

Generative AI refers to algorithms that can create new content, such as text, images, or music, by learning patterns from existing data. It’s used in various applications like chatbots, content creation, and art generation.

Generative AI uses models like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) to generate new data. These models learn from large datasets and create outputs that resemble the input data in style or structure.

Generative AI is widely used in fields like art, entertainment, design, and healthcare. It can create realistic images, write coherent text, compose music, and even assist in drug discovery by generating potential molecular structures.

Did you enjoy this article?