A Complete Data Scientist Roadmap for Beginners

Mar 07, 2025 6 Min Read 6056 Views

(Last Updated)

Do you want to pursue a career in Data Science? If so, you must’ve been confused by the abundance of information on the internet. Choosing to become a Data Scientist is easy, but what’s tough is finding a way to do it.

But worry not – you’ve come to the right place. This comprehensive guide will provide you with a Data Science roadmap to becoming successful in the field. This data scientist roadmap will provide you with the necessary steps to achieve your goals.

Table of contents

- What Does a Data Scientist Do?

- Roles and Responsibilities of a Data Scientist

- Data Scientist Roadmap

- Programming Language

- Maths and Statistics

- Machine Learning and Natural Language Processing

- Data Collection and Cleaning

- Key Tools for Data Science

- Git and GitHub

- Average Salaries of Data Scientists in India

- Conclusion

- FAQs

- What are the key skills required to become a data scientist?

- How long does it take to become a data scientist?

- Do I need a degree to become a data scientist?

- Which tools and languages should I learn first as a beginner?

- How can I gain practical experience as a beginner in data science?

What Does a Data Scientist Do?

A data scientist is a skilled professional who leverages their expertise in statistics, mathematics, computer science, and domain knowledge to extract meaningful insights from large and complex datasets.

Additionally, data scientists play a vital role in designing and deploying data-driven applications and predictive models to address real-world problems across industries, contributing significantly to data-driven decision-making processes and business growth.

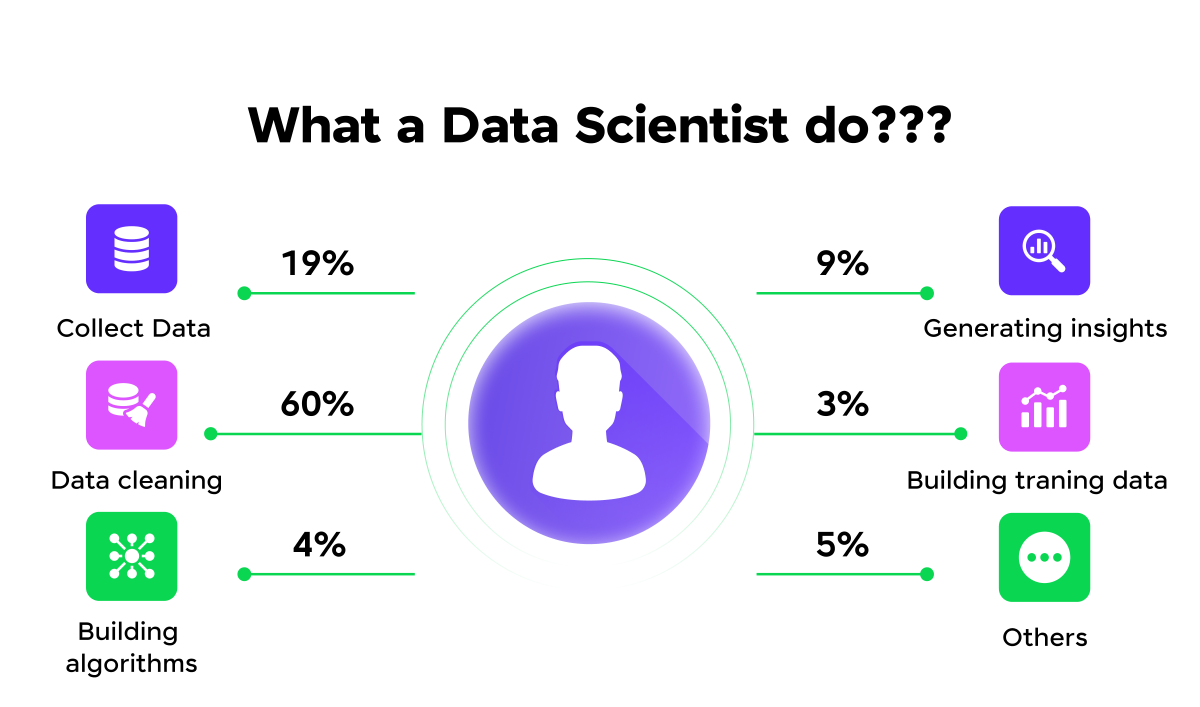

Roles and Responsibilities of a Data Scientist

- Data Collection: Gather relevant data from various sources, including databases, APIs, and web scraping.

- Data Cleaning: Clean and preprocess the data to remove noise, handle missing values, and ensure data quality.

- Data Preparation: Transform and structure the data, making it suitable for analysis and model building.

- Model Development: Design and develop advanced analytical models, including machine learning algorithms, to solve specific business problems.

- Data Analysis: Apply statistical techniques and analytical models to identify patterns, trends, and correlations in the data.

- Data Visualization: Create visualizations and dashboards to present insights and findings in an understandable and actionable manner.

- Model Evaluation: Test and validate models to ensure accuracy and reliability, using techniques like cross-validation and A/B testing.

- Collaboration: Work closely with other teams, including data engineers, analysts, and business stakeholders, to align data science efforts with business objectives.

- Continuous Learning: Stay updated with the latest advancements in data science, machine learning, and artificial intelligence to apply cutting-edge techniques.

Data Scientist Roadmap

As the name suggests, a roadmap is a way in which you can reach your destination. In this case, your destination is to become a Data Scientist and we have created a roadmap that you can follow to achieve it.

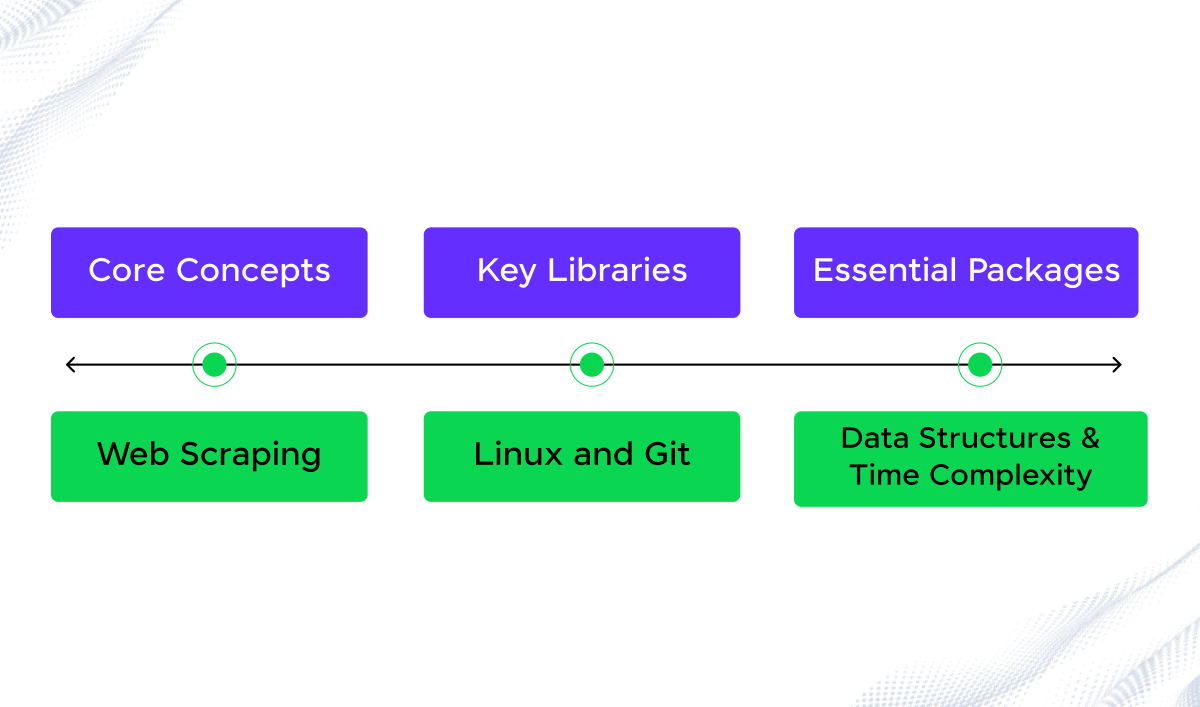

1. Programming Language

To build a solid foundation in data science, having a strong grasp of programming concepts is essential, particularly in areas like data structures and algorithms. Different programming languages are used depending on the task, with Python and R being the most popular choices. However, Java, Scala, and C++ also play crucial roles, especially in performance-critical scenarios.

Python is often the go-to language for data science due to its versatility and extensive libraries. You’ll need to be familiar with:

- Core Concepts: Lists, sets, tuples, and dictionaries, along with function definitions.

- Key Libraries: NumPy for numerical computations, Pandas for data manipulation, and Matplotlib/Seaborn for data visualization.

R is another powerful language in the data science toolkit, particularly for statistical analysis and data visualization. Key areas include:

- Core Concepts: Understanding vectors, lists, data frames, matrices, and arrays.

- Essential Packages: dplyr for data manipulation, ggplot2 for visualization, and Shiny for building interactive web applications.

In addition to programming, knowledge of databases is crucial. Understanding SQL is essential for querying relational databases, while familiarity with MongoDB can be beneficial for handling non-relational data.

Other critical skills include:

- Data Structures and Time Complexity: Important for optimizing algorithms and ensuring efficient data processing.

- Web Scraping: Extracting data from websites using Python or R.

- Linux and Git: Proficiency in Linux commands and version control with Git is also valuable in managing projects and collaborating with teams effectively.

Learning these concepts and tools will equip you with the necessary skills to thrive in the data science field.

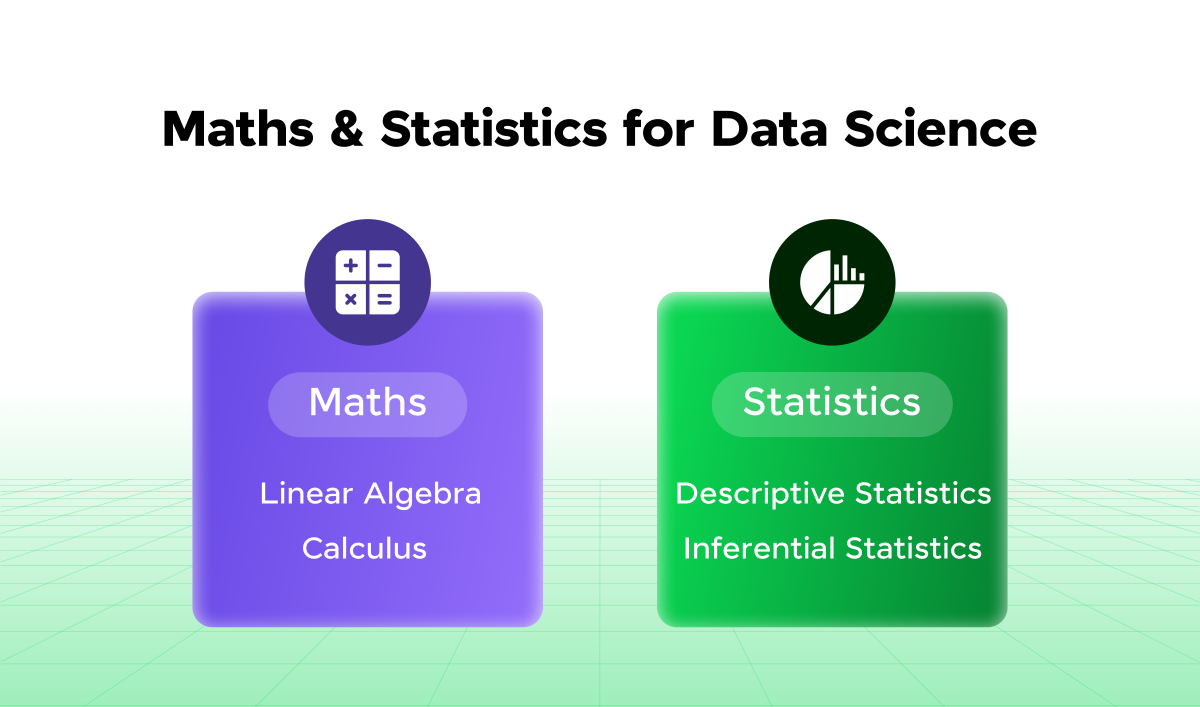

2. Maths and Statistics

- Linear Algebra: Understand vector spaces, matrices, eigenvalues, and eigenvectors, crucial for data manipulation and machine learning algorithms.

- Calculus: Learn concepts like differentiation, integration, and partial derivatives, essential for optimization and understanding model training processes.

- Probability Theory: Explore probability distributions, random variables, and Bayes’ theorem to assess uncertainties and make predictions.

- Descriptive Statistics: Master measures of central tendency (mean, median, mode), dispersion (variance, standard deviation), and data visualization techniques.

- Inferential Statistics: Study hypothesis testing, confidence intervals, and p-values to make data-driven decisions and validate models.

- Regression Analysis: Learn about linear and logistic regression, helping to model relationships between variables and predict outcomes.

- Optimization Techniques: Understand gradient descent and other optimization algorithms used to minimize loss functions in machine learning models.

- Hypothesis Testing: Apply statistical tests like t-tests, chi-square tests, and ANOVA to validate assumptions and compare datasets.

3. Machine Learning and Natural Language Processing

Machine Learning:

- Supervised Learning: Learn algorithms like linear regression, decision trees, and support vector machines for tasks like classification and regression.

- Unsupervised Learning: Explore clustering techniques such as k-means and hierarchical clustering, and dimensionality reduction methods like PCA (Principal Component Analysis).

- Neural Networks: Understand the architecture and functioning of neural networks, including feedforward networks, convolutional networks (CNNs), and recurrent networks (RNNs).

- Model Evaluation: Master techniques such as cross-validation, confusion matrices, ROC curves, and precision-recall metrics to evaluate model performance.

- Ensemble Methods: Study methods like Random Forest, Gradient Boosting, and Bagging, which combine multiple models to improve predictive accuracy.

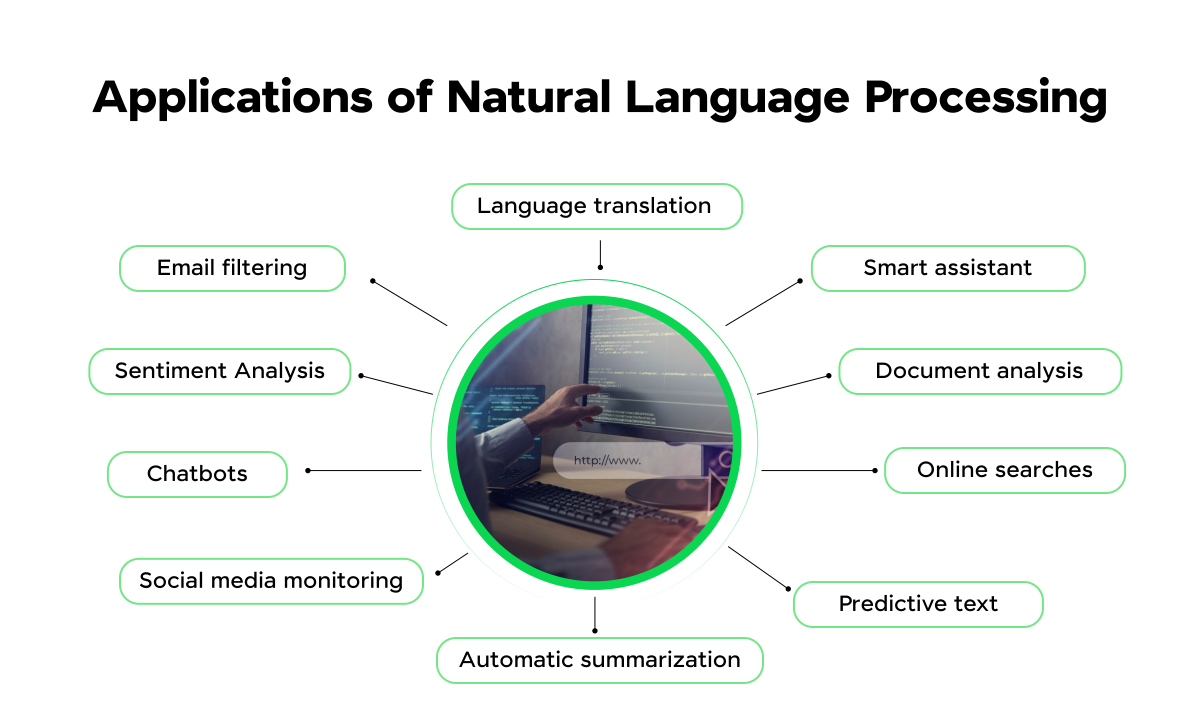

Natural Language Processing (NLP):

- Tokenization: Break down text into words, sentences, or subwords, which form the basic units for analysis in NLP tasks.

- Part-of-Speech Tagging: Assign grammatical categories (nouns, verbs, adjectives) to words in a sentence, aiding in syntactic analysis and understanding context.

- Sentiment Analysis: Detect and categorize opinions expressed in text, identifying sentiment as positive, negative, or neutral.

- Named Entity Recognition (NER): Identify and classify entities such as names, organizations, locations, and dates within text data.

- Text Classification: Apply machine learning algorithms to categorize text into predefined categories, useful in tasks like spam detection and topic identification.

- Language Models: Understand models like BERT, GPT, and LSTM that predict the likelihood of a sequence of words, enabling tasks like translation, summarization, and text generation.

- Word Embeddings: Explore techniques like Word2Vec, GloVe, and TF-IDF to represent words in vector space, capturing semantic meaning and relationships between words.

- Text Preprocessing: Learn techniques such as stemming, lemmatization, and stop-word removal to clean and normalize text data for analysis.

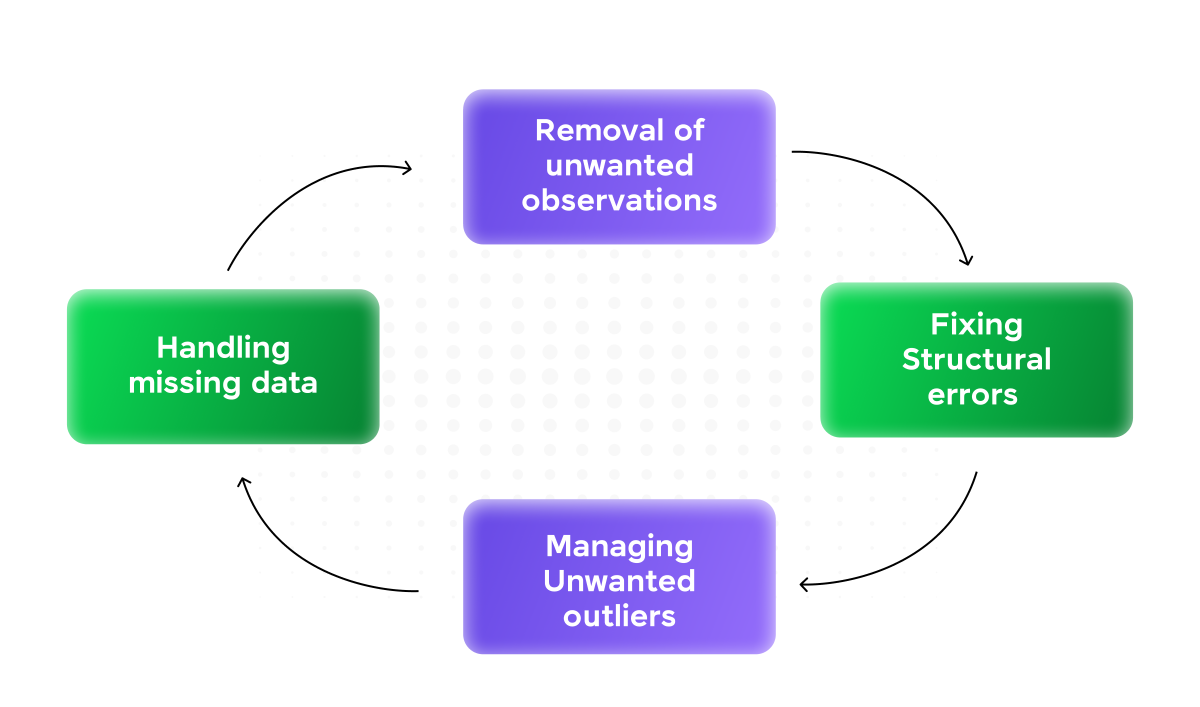

4. Data Collection and Cleaning

Data collection and cleaning are critical stages in the data science process. Data collection involves gathering relevant data from various sources, such as databases, APIs, web scraping, or sensor networks.

It is essential to ensure data quality, reliability, and completeness during this phase. Once collected, the data often requires cleaning to address issues like missing values, duplicates, inconsistencies, and outliers, which can adversely affect analysis and modeling.

Data cleaning techniques involve imputing missing values, resolving inconsistencies, and handling outliers to create a clean, reliable dataset.

Proper data collection and cleaning lay the foundation for accurate analysis and modeling, ensuring that the insights drawn from the data are valid and trustworthy.

5. Key Tools for Data Science

Data science relies on a variety of tools that facilitate data manipulation, analysis, visualization, and modeling. Here are some key tools commonly used in data science:

- Jupyter Notebooks: Interactive web-based environments that allow data scientists to create and share documents containing code, visualizations, and explanatory text. They are widely used for data exploration and prototyping.

- SQL (Structured Query Language): A language for managing and querying relational databases. SQL is essential for data retrieval and data manipulation tasks.

- Apache Hadoop: An open-source framework for distributed storage and processing of large datasets. It is used for handling big data.

- Apache Spark: Another open-source distributed computing system that provides fast and scalable data processing, machine learning, and graph processing capabilities.

- Tableau: A data visualization tool that enables users to create interactive and visually appealing dashboards and reports.

- Scikit-learn: A machine learning library for Python, providing various algorithms for classification, regression, clustering, and more.

- Excel: Widely used for basic data analysis and visualization due to its accessibility and familiarity.

- Git: Version control system used for tracking changes in code and collaborating with others in data science projects.

- Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP): Cloud computing platforms that offer various services for data storage, processing, and machine learning.

- NLTK (Natural Language Toolkit): A Python library for natural language processing tasks.

6. Git and GitHub

Git and GitHub are integral tools in data science that provide version control and collaboration capabilities, facilitating efficient and organized project development.

Git

Git is a distributed version control system that allows data scientists to track changes in their code and project files over time. It enables data scientists to create a repository, a centralized storage space for their projects.

As data scientists work on the project, Git records each modification, creating a detailed history of changes. This version control functionality offers several benefits in data science.

Firstly, it allows data scientists to review and revert to previous versions of their code, providing a safety net against potential errors. Secondly, Git enables seamless collaboration among multiple team members. Each team member can create their branch to work on specific features or experiments independently, and later merge these changes back into the main codebase.

This branching and merging capability avoids conflicts and ensures a coherent codebase. Overall, Git enhances productivity, accountability, and organization in data science projects.

Features of Git:

- Version Control: Tracks changes in files over time, allowing you to revert to previous versions.

- Branching and Merging: Enables the creation of branches to work on different features or fixes independently, which can later be merged back.

- Distributed System: Every developer has a complete history of the project, enabling offline work and reducing reliance on a central server.

- Staging Area: Allows you to stage changes before committing, providing better control over which changes are included in a commit.

- Commit History: Maintains a detailed log of all changes, who made them, and why, providing transparency and accountability.

GitHub

GitHub is a web-based hosting service that utilizes Git for managing repositories. It serves as a social platform for data scientists and developers, allowing them to share their work, collaborate, and contribute to open-source projects.

In data science, GitHub provides several advantages. Data scientists can create both public and private repositories, depending on whether they want to showcase their projects to the public or keep them private for proprietary work.

Public repositories offer an excellent opportunity for data scientists to showcase their data science projects, build their portfolios, and establish their expertise within the data science community.

Collaboration becomes more accessible through GitHub, as team members can contribute to projects remotely, propose changes, and discuss ideas through issues and pull requests.

Features of GitHub:

- Repository Hosting: Stores Git repositories in the cloud, making collaboration easier and more accessible.

- Pull Requests: Facilitates code review and discussion before merging changes into the main branch, promoting collaboration.

- Issues and Project Management: Includes tools for tracking bugs, feature requests, and project progress within a repository.

- GitHub Actions: Automates workflows like CI/CD, testing, and deployments directly within your repository.

- Collaboration Tools: Provides features like wikis, discussions, and code reviews, fostering teamwork and knowledge sharing.

By leveraging Git and GitHub, data scientists can build a strong professional presence, engage in the data science community, and efficiently collaborate on data-driven projects.

Kickstart your Data Science journey by enrolling in GUVI’s Data Science Course where you will master technologies like MongoDB, Tableau, PowerBi, Pandas, etc., and build interesting real-life projects.

Alternatively, if you would like to explore Python through a Self-paced course, try GUVI’s Python certification course.

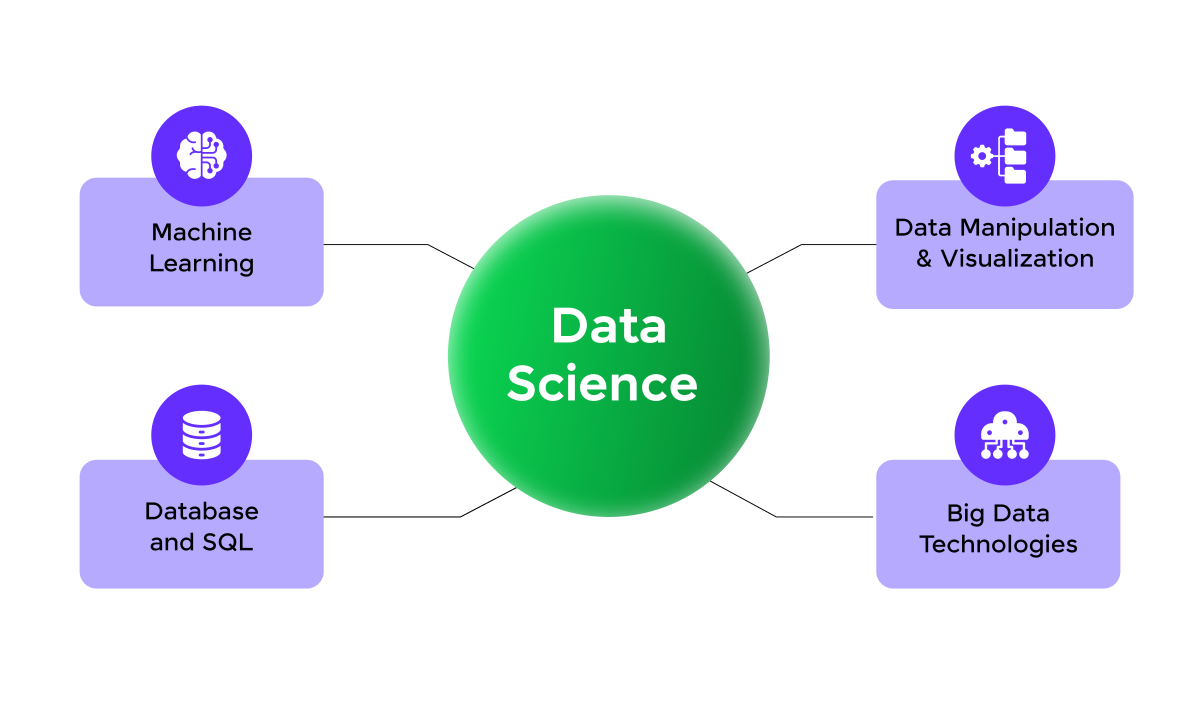

Who is a Data Scientist?

A data scientist is a professional who utilizes data analysis, machine learning, statistical modeling, and various other techniques to extract meaningful insights and knowledge from large and complex datasets.

Data science is an interdisciplinary field that combines knowledge from various domains, including statistics, mathematics, computer science, and domain expertise.

Becoming a Data Scientist in India requires proficiency in a variety of technologies and tools that are commonly used in the industry. Here are some of the top technologies you should consider mastering to excel in this field:

- Machine Learning: Familiarity with various machine learning algorithms, techniques, and frameworks to build predictive models and perform data-driven tasks.

- Data Manipulation and Visualization: Knowledge of data manipulation libraries (e.g., Pandas) and visualization tools (e.g., Matplotlib, Seaborn) to handle and present data effectively.

- Database and SQL: Understanding of database systems and the ability to work with SQL to extract and manipulate data.

- Big Data Technologies: Familiarity with big data tools and frameworks like Apache Spark can be beneficial for handling large-scale data processing.

Average Salaries of Data Scientists in India

Data science is one of the most sought-after careers in India, with competitive salaries reflecting the growing demand for skilled professionals in this field. The average salary for data scientists in India varies significantly based on experience, as follows:

| Experience Level | Average Annual Salary (INR) |

| Entry-Level (0-2 years) | ₹4,00,000 – ₹8,00,000 |

| Mid-Level (2-5 years) | ₹8,00,000 – ₹15,00,000 |

| Senior-Level (5-10 years) | ₹15,00,000 – ₹25,00,000 |

| Lead/Manager (10+ years) | ₹25,00,000 – ₹50,00,000+ |

Conclusion

The data scientist roadmap offers a clear, step-by-step guide for aspiring data scientists to build a strong foundation in mathematics, programming, and statistics. By mastering essential tools and techniques, you can unlock exciting career opportunities and thrive in the evolving, data-driven world.

FAQs

Essential skills include programming (Python/R), statistics, data wrangling, machine learning, and data visualization.

It typically takes 6 months to 2 years, depending on prior knowledge, dedication, and the learning path chosen.

While a degree in a related field helps, it’s not mandatory. Practical skills and project experience often hold more value.

Start with Python or R for programming, SQL for databases, and tools like Jupyter Notebooks, TensorFlow, and Tableau.

Engage in personal projects, participate in Kaggle competitions, and complete internships or freelance work.

![Top 10 Mistakes to Avoid in Your Data Science Career [2025] 10 data science](https://www.guvi.in/blog/wp-content/uploads/2023/05/Beginner-mistakes-in-data-science-career.webp)

Any recommendations to data science online course