Top AWS Data Engineer Interview Questions and Answers

Mar 06, 2025 8 Min Read 5420 Views

(Last Updated)

In today’s data-driven world, the role of a Data Engineer has become increasingly important, with AWS leading the charge in cloud-based data solutions. As organizations use AWS for their data needs, the demand for skilled AWS Data Engineers continues to soar. Learning AWS Data Engineering is essential for career success.

One important step in this journey is acing the interview process. To help you prepare thoroughly, we’ve compiled this blog with the top AWS Data Engineer interview questions and answers. From data storage and processing to security and scalability, we’ll learn the key concepts and best practices that every AWS Data Engineer should know.

So, let’s explore how you can confidently ace your next AWS Data Engineer interview!

Table of contents

- What is AWS?

- Top AWS Data Engineer Interview Questions and Answers

- A. Data Storage and Retrieval

- B. Data Processing

- C. Data Analytics

- D. Data Security and Compliance

- Conclusion

- FAQs

- Why are AWS Data Engineer interviews important?

- How can I prepare effectively?

- What challenges can I expect, and how do I overcome them?

What is AWS?

AWS stands for Amazon Web Services. It is a comprehensive cloud computing platform provided by Amazon, offering a broad set of global cloud-based products and services. AWS allows individuals, companies, and governments to access servers, storage, databases, networking, software, analytics, and other services over the internet on a pay-as-you-go basis.

Some of the most popular AWS services include:

- Elastic Compute Cloud (EC2): Provides scalable virtual servers in the cloud.

- Simple Storage Service (S3): Offers scalable object storage for data backup, archive, and application hosting.

- Relational Database Service (RDS): Provides managed relational database services like MySQL, PostgreSQL, Oracle, and SQL Server.

- Lambda: Enables running code without provisioning or managing servers (serverless computing).

- Elastic Beanstalk: Simplifies the deployment and management of applications in the AWS cloud.

- CloudFront: A content delivery network (CDN) service for fast and secure content delivery.

- CloudWatch: Monitoring service for AWS resources and applications.

AWS provides a global infrastructure with data centers (known as Availability Zones and Regions) located around the world, making it easier for businesses to deploy applications and serve customers globally. It offers a wide range of services for various use cases, including web hosting, backup and storage, machine learning, Internet of Things (IoT), and more.

Ready to master big data engineering? Enroll now in GUVI’s Big Data and Cloud Analytics Course and unlock the potential of massive datasets to drive innovation and business growth!

Now that we have explored the fundamentals of AWS, let’s explore the specifics of AWS Data Engineering through a curated selection of top interview questions and answers.

Top AWS Data Engineer Interview Questions and Answers

In this section, we will learn some of the top interview questions and insightful answers to help you succeed in your AWS Data Engineer interviews.

Also Read: Top 8 Career Opportunities for Data Engineers

A. Data Storage and Retrieval

Data storage and retrieval lie at the heart of modern information management, serving as the foundation for efficient data processing and analysis.

1. How does Amazon S3 differ from Amazon RDS?

Amazon S3 (Simple Storage Service) and Amazon RDS (Relational Database Service) are two different services in AWS with distinct purposes.

Amazon S3

- Object storage service for storing and retrieving any amount of data as objects (files).

- Highly scalable, durable, and cost-effective for storing static data like backups, logs, media files, and archives.

- Data is accessed via a web services interface (HTTP/HTTPS).

- Provides features like versioning, lifecycle policies, and cross-region replication.

- Not suitable for structured data or transactional workloads.

Amazon RDS

- Managed relational database service that supports various database engines like MySQL, PostgreSQL, Oracle, and SQL Server.

- Ideal for storing structured data in a tabular format and performing complex queries using SQL.

- Handles tasks like provisioning, patching, backup, and recovery automatically.

- Supports features like read replicas, multi-AZ deployments, and encryption at rest.

- Suitable for online transaction processing (OLTP) and online analytical processing (OLAP) workloads.

Also Read: Best Way to Learn Data Engineering in 2024

2. Explain the concept of data partitioning in Amazon Redshift.

Data partitioning in Amazon Redshift is a technique used to divide large datasets into smaller partitions based on specific criteria, such as date ranges, alphabetical order, or other attributes. This partitioning helps improve query performance, data management, and overall system efficiency.

In Amazon Redshift, data partitioning can be achieved using the following methods:

a. Table partitioning: Tables are partitioned based on a partition key, which is typically a column or a set of columns. Each partition contains a subset of the data based on the partition key values.

b. Columnar storage and compression: Redshift automatically stores data in a columnar format and applies compression, which can significantly reduce the storage requirements and improve query performance, especially for analytical workloads.

c. Distribution styles: Redshift offers different distribution styles (EVEN, KEY, or ALL) to distribute data across compute nodes, optimizing for different query patterns and workloads.

By partitioning data, Amazon Redshift can:

- Eliminate unnecessary data scans by querying only the relevant partitions.

- Improve query performance by allowing parallel processing of partitions.

- Simplify data management tasks like adding or removing data ranges.

- Optimize storage utilization and cost by compressing and storing data more efficiently.

Proper data partitioning in Amazon Redshift can significantly enhance query performance, reduce storage costs, and facilitate efficient data management for large datasets.

Also Read: Data Engineering Syllabus 2024 | A Complete Guide

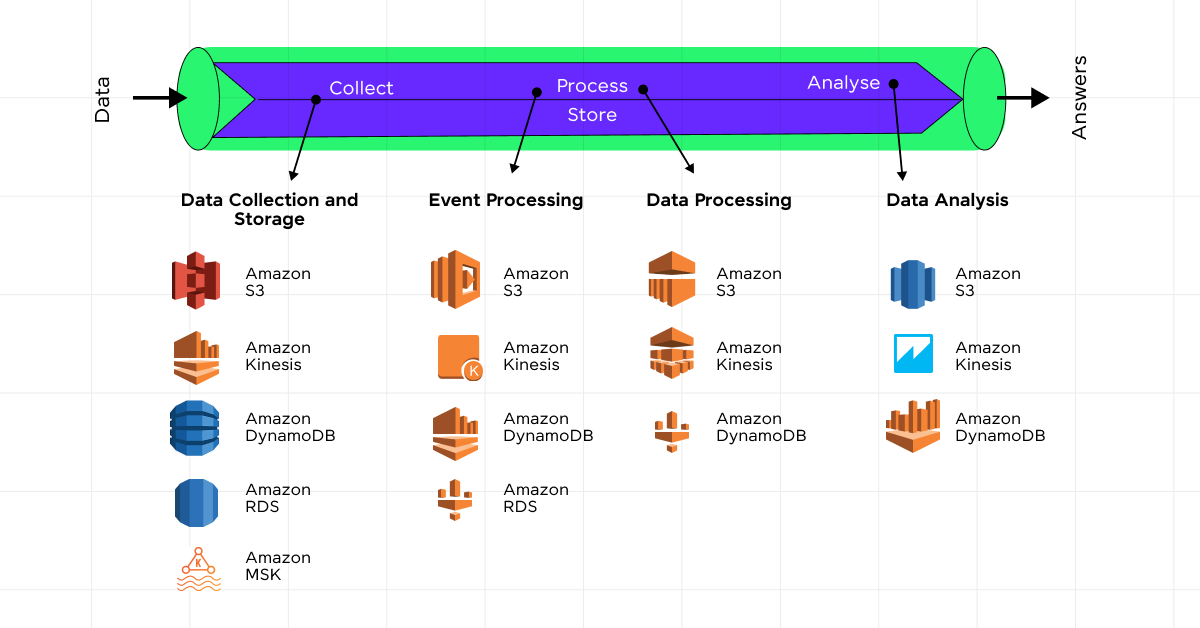

B. Data Processing

Data processing is the transformative engine that converts raw data into actionable insights, driving informed decision-making and innovation across industries.

1. What is Amazon EMR, and how does it work?

Amazon EMR (Elastic MapReduce) is a cloud-based big data processing service provided by AWS. It is used for running large-scale distributed data processing frameworks like Apache Hadoop, Apache Spark, Apache HBase, Apache Flink, and others on the AWS infrastructure.

How Amazon EMR works:

- Cluster Creation: Users create an EMR cluster by specifying the required resources (EC2 instances, instance types, and configurations) and the desired big data processing framework (e.g., Hadoop, Spark).

- Data Storage: EMR integrates with other AWS services like Amazon S3 for data storage and Amazon DynamoDB for NoSQL databases. Input data is typically stored in S3, and EMR directly accesses it from there.

- Processing: EMR automatically provisions and configures the specified big data framework on the EC2 instances within the cluster. Users can submit data processing jobs (e.g., MapReduce jobs, Spark applications) to the cluster for execution.

- Scaling: EMR allows dynamic scaling of the cluster by adding or removing EC2 instances based on the workload requirements, providing elasticity and cost optimization.

- Monitoring and Management: EMR provides monitoring and management capabilities through AWS Management Console, APIs, and integrations with other AWS services like CloudWatch for monitoring and AWS Data Pipeline for workflow management.

- Output and Termination: After processing, the output data can be stored back in Amazon S3 or other AWS services. Once the job is complete, the EMR cluster can be automatically terminated to save costs, or it can be kept running for further processing.

EMR simplifies the deployment and management of big data processing frameworks on the AWS cloud, providing a scalable, reliable, and cost-effective solution for processing large datasets.

Also Read: Data Engineering Career Roadmap: 7 Things You Should Know About!

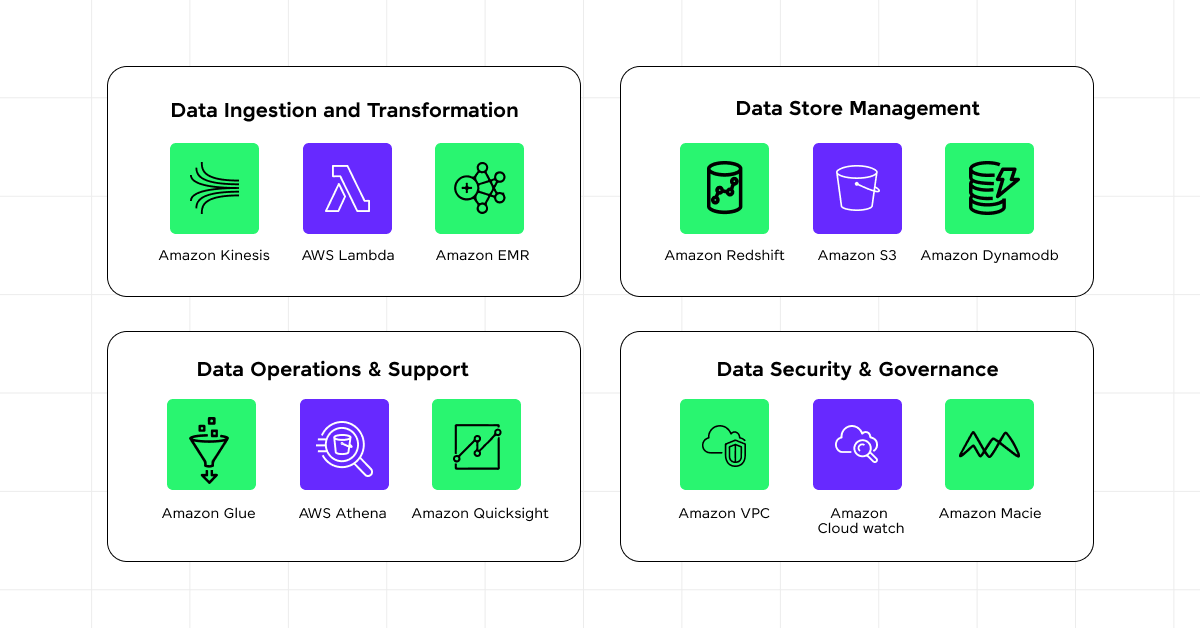

2. Discuss the difference between Amazon Kinesis and AWS Glue.

Amazon Kinesis and AWS Glue are two different services in AWS, each serving a distinct purpose in the data processing and analytics domain.

Amazon Kinesis

- A fully managed service for real-time data streaming and processing.

- Consists of three main components: Kinesis Video Streams, Kinesis Data Streams, and Kinesis Data Firehose.

- Kinesis Data Streams enables real-time ingestion, processing, and analysis of large streams of data records.

- Kinesis Data Firehose is used for capturing, transforming, and loading streaming data into various destinations like Amazon S3, Amazon Redshift, and more.

- Kinesis is primarily used for building real-time data processing pipelines, capturing and analyzing IoT data, and processing large-scale data streams.

AWS Glue

- A fully managed extract, transform, and load (ETL) service for data integration.

- Provides a serverless Apache Spark environment for running ETL jobs.

- Automatically discovers and catalogs data sources (databases, S3 buckets, etc.) using a crawler feature.

- Generates and executes data transformation code (in Apache Spark) based on a visual editor or scripting interface.

- Simplifies and automates the process of moving and transforming data between various data stores and formats.

- Primarily used for building data lakes, data warehousing, and ETL pipelines for batch processing of data.

Amazon Kinesis is focused on real-time data streaming and processing, while AWS Glue is designed for batch-oriented data integration and ETL tasks. Kinesis is suitable for applications that require continuous processing of data streams, while Glue is more appropriate for scheduled or on-demand data transformations and loading into data warehouses or data lakes.

Also Read: What Is a Data Engineer? A Complete Guide to This Data Career

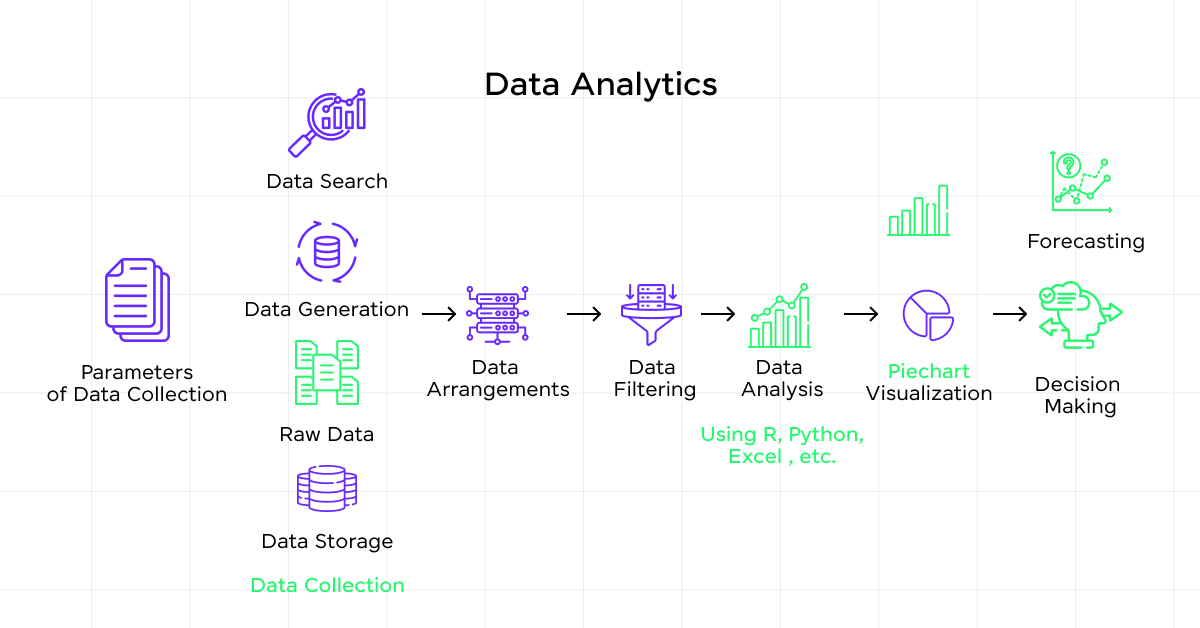

C. Data Analytics

Data analytics is the cornerstone of deriving meaningful insights from vast datasets, empowering organizations to make informed decisions and gain a competitive edge in today’s data-driven world.

1. How do you optimize data queries in Amazon Athena?

Amazon Athena is an interactive query service that allows you to analyze data stored in Amazon S3 using standard SQL. Here are some ways to optimize data queries in Amazon Athena:

- Partitioning and Bucketing: Partition your data in Amazon S3 based on frequently queried columns (e.g., date, type, region). This allows Athena to scan only the relevant partitions, reducing the amount of data scanned and improving query performance. Bucketing can further optimize queries by organizing data within partitions.

- Compression: Store your data in compressed formats like Parquet, ORC, or ZLIB. Compressed data requires less storage and reduces the amount of data transferred, leading to faster query execution times.

- Columnar Storage: Use columnar data formats like Parquet or ORC, which store data by columns instead of rows. Columnar storage is more efficient for analytical queries as it reduces the amount of data read.

- Caching: Athena caches query results in AWS CloudFront, allowing subsequent queries on the same data to retrieve results from the cache, significantly improving performance.

- Joining and Filtering: Optimize your queries by filtering data early in the query plan and performing joins on smaller datasets. This reduces the amount of data that needs to be processed and improves query efficiency.

- Workgroup Configurations: Adjust the workgroup configurations in Athena, such as increasing the maximum number of concurrent queries, adjusting the query result cache size, and configuring the appropriate query execution engine (Apache Spark or Presto).

- Cost-Based Optimizer (CBO): Enable the Cost-Based Optimizer in Athena, which analyzes query patterns and data statistics to generate efficient query execution plans.

- Query Optimization: Use techniques like query simplification, predicate pushdown, and partition pruning to optimize your queries and reduce the amount of data processed.

Regular monitoring and analysis of query performance using Athena’s query monitoring and logging features can help identify and address performance bottlenecks.

Boost your skills with GUVI’s Big Data and Cloud Analytics Course. This comprehensive program is designed to equip you with everything you need to become a data engineer. Learn from industry experts, gain extensive hands-on experience, receive personalized mentorship, and get placement guidance.

Also Read: AWS Data Engineer: Comprehensive Guide to Your New Career [2024]

2. Explain the architecture of Amazon QuickSight.

Amazon QuickSight is a cloud-based business intelligence (BI) service that allows users to create interactive dashboards, reports, and visualizations from various data sources. The architecture of Amazon QuickSight can be broken down into the following components:

1. Data Sources: QuickSight can connect to various data sources, including AWS services (e.g., Amazon Athena, Amazon Redshift, Amazon RDS), on-premises databases, SaaS applications, and flat files stored in Amazon S3 or other cloud storage services.

2. Data Ingestion: QuickSight’s in-memory engine that parallelizes and optimizes data ingestion and calculation for faster analytics. Data is ingested and cached in SPICE for improved query performance.

3. Data Preparation: QuickSight provides data preparation capabilities like joining data sources, applying calculations, and transforming data. Users can create custom data sets and manage data refreshes.

4. Visualization and Dashboard Creation: QuickSight offers a user-friendly interface for creating visualizations (charts, graphs, tables) and building interactive dashboards. Users can apply filters, add annotations, and customize the appearance of visualizations.

5. Access and Sharing: Dashboards and reports can be accessed through the QuickSight web application or mobile apps. QuickSight supports user permissions, row-level security, and sharing capabilities for collaboration.

6. Analytics and Machine Learning: QuickSight integrates with AWS machine learning services like Amazon SageMaker for advanced analytics and predictions. Users can incorporate forecasting and anomaly detection into their dashboards.

7. Security and Compliance: QuickSight uses AWS Identity and Access Management (IAM) for authentication and authorization. Data is encrypted in transit and at rest, and QuickSight supports various compliance standards.

8. Serverless Architecture: QuickSight is a fully managed, serverless service, which means users don’t have to provision or manage any infrastructure. AWS handles scaling, availability, and updates to the service automatically.

QuickSight’s architecture is designed to provide a scalable, secure, and high-performance platform for data visualization and business intelligence, using AWS’s cloud infrastructure and integrating with other AWS services for data processing and machine learning capabilities.

Also Read: Top 9 Data Engineer Skills You Should Know

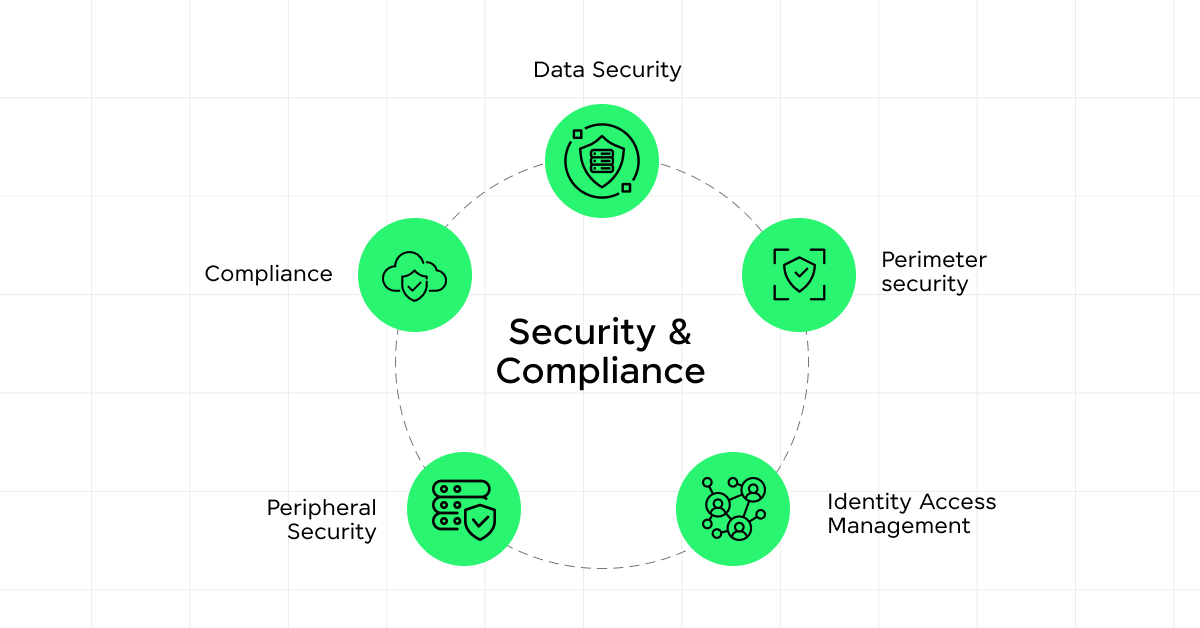

D. Data Security and Compliance

Data security and compliance are important in safeguarding sensitive information and maintaining trust in the digital age.

1. How does AWS IAM help in securing data?

AWS Identity and Access Management (IAM) is a core service that plays an important role in securing data and resources within the AWS ecosystem. Here’s how IAM helps in securing data:

A. User and Group Management

IAM allows the creation of users, groups, and roles with specific permissions, enabling fine-grained access control to AWS resources, including data stored in services like Amazon S3, Amazon RDS, and Amazon Redshift. Users can be assigned individual or group-based policies, ensuring that only authorized personnel can access or modify data.

B. Access Policies and Permissions

IAM policies define the specific actions that users, groups, or roles can perform on AWS resources, including data-related operations like reading, writing, or deleting objects in Amazon S3 or managing database instances in Amazon RDS. These policies can be attached to users, groups, or roles, providing granular control over data access and operations.

C. Multi-Factor Authentication (MFA)

IAM supports multi-factor authentication, which adds an extra layer of security by requiring users to provide a one-time password or code in addition to their regular credentials. This helps prevent unauthorized access to data, even if the user’s credentials are compromised.

D. Temporary Security Credentials

IAM can generate temporary security credentials (access keys and session tokens) with limited permissions and validity periods, allowing secure access to data for specific use cases or applications. This minimizes the risk of long-term credentials being compromised.

Must Read: Everything about Cloud Security with AWS Identity and Access Management [2024]

E. Logging and Auditing

IAM integrates with other AWS services like AWS CloudTrail, which logs all API calls made within the AWS environment, including data access and modification requests. This audit trail helps monitor and investigate any unauthorized or suspicious activity related to data access.

By implementing IAM best practices, organizations can enforce the principle of least privilege, ensuring that users and applications have only the necessary permissions to access and operate on data, reducing the risk of unauthorized access or data breaches.

2. Discuss the features of AWS Key Management Service (KMS).

AWS Key Management Service (KMS) is a secure and centralized service that simplifies the creation, management, and usage of cryptographic keys. It is designed to provide a highly available and scalable key management solution for encrypting and decrypting data across various AWS services and applications. Here are the key features of AWS KMS:

A. Key Creation and Management

KMS allows you to create, import, rotate, and securely store cryptographic keys, including symmetric and asymmetric keys. Keys are stored in FIPS 140-2 validated hardware security modules (HSMs), providing robust protection against unauthorized access.

B. Encryption and Decryption

KMS enables encryption and decryption operations using the managed keys, supporting industry-standard encryption algorithms like AES-256, RSA, and Elliptic Curve Cryptography (ECC). This allows you to encrypt data at rest (e.g., in Amazon S3, Amazon EBS, Amazon RDS) and in transit (e.g., AWS Direct Connect, AWS VPN).

C. Key Policies and Access Control

KMS allows you to define key policies that control who can access and use specific keys, as well as the operations they can perform (e.g., encrypt, decrypt, create keys). Integration with AWS IAM enables granular access control over keys based on users, groups, or roles.

D. Auditing and Logging

KMS integrates with AWS CloudTrail, providing detailed logs of all key usage and management activities, which can be used for auditing and compliance purposes.

E. Key Rotation and Periodic Rewrapping

KMS supports automatic key rotation, which creates new cryptographic material for keys at a specified interval, ensuring key freshness and reducing the risk of key compromise. It also supports periodic rewrapping, which re-encrypts the encrypted data with a new key without the need for decryption and re-encryption.

By using AWS KMS, organizations can centrally manage and securely store their encryption keys, simplify key management processes, and maintain control over their data encryption across multiple AWS services and applications, while adhering to industry best practices and compliance standards.

Also Read: Code to Cloud Using Terraform and AWS: The Epic Guide to Automated Deployment [2024]

Transform your career with GUVI’s Big Data and Cloud Analytics! Join today to gain the skills and knowledge needed to thrive in the era of data-driven decision-making.

Conclusion

For further study and enrichment, we recommend exploring additional resources such as AWS documentation, online courses, and community forums. Additionally, consider participating in hands-on projects and networking with fellow professionals to deepen your understanding and broaden your horizons in AWS Data Engineering.

With dedication and determination, the sky’s the limit in your journey towards becoming a proficient AWS Data Engineer. Good luck!

Also Explore: AWS vs Azure vs Google Cloud: Comparing the Top Cloud Service Providers

FAQs

AWS Data Engineer interviews are important for assessing technical skills, problem-solving abilities, and familiarity with AWS services, reflecting real-world demands and opening doors to rewarding career opportunities in data engineering within AWS environments.

Take the help of the AWS documentation, practice coding exercises, review common interview questions, engage in mock interviews, and prioritize hands-on experience with AWS services through personal projects and labs to showcase practical skills and proficiency.

Stay composed, break down complex problems, communicate transparently, use past experiences, emphasize collaboration and adaptability, and prioritize continuous learning and self-improvement to navigate challenges, showcase abilities, and prepare for future opportunities.

![Top 15+ Jenkins Interview Questions and Answers [2025] 8 jenkins interview questions](https://www.guvi.in/blog/wp-content/uploads/2022/06/wallpapertip_open-source-wallpaper_1780230.jpg)

![Top 25 Business Analyst Interview Questions and Answers [2025] 9 business analyst interview questions](https://www.guvi.in/blog/wp-content/uploads/2023/07/Business-Analyst-Interview-Questions-With-Answers.png)

![Top 20+ React Interview Questions and Answers [2025] 10 Top 20 React Interview Questions and Answers](https://www.guvi.in/blog/wp-content/uploads/2022/01/Top-20-React-Interview-Questions-and-Answers.png)

Did you enjoy this article?