Best Practices for Prompt Engineering in 2025

Oct 27, 2025 4 Min Read 13289 Views

(Last Updated)

AI is the trend and will be in the coming years. Almost, more than 35% of global companies use AI to its full potential to gain desired outcomes in any domain. But wait, do you know how to use it? or what language do you need to communicate with it?

If not, let me know, prompt engineering is the key. It is a must-have skill for every professional who wants to use AI to the best. If you’re new to this concept and want to explore some of the best practices for prompt engineering, you must read this blog and gain a deeper understanding. Let’s begin:

Table of contents

- What is Prompt Engineering?

- Best Practices for Prompt Engineering

- Wrapping Up

- FAQs

- How do you do perfect prompt engineering?

- What is the best practice for AI prompts?

- What skills are needed for prompt engineering?

- What is the main responsibility of prompt engineers?

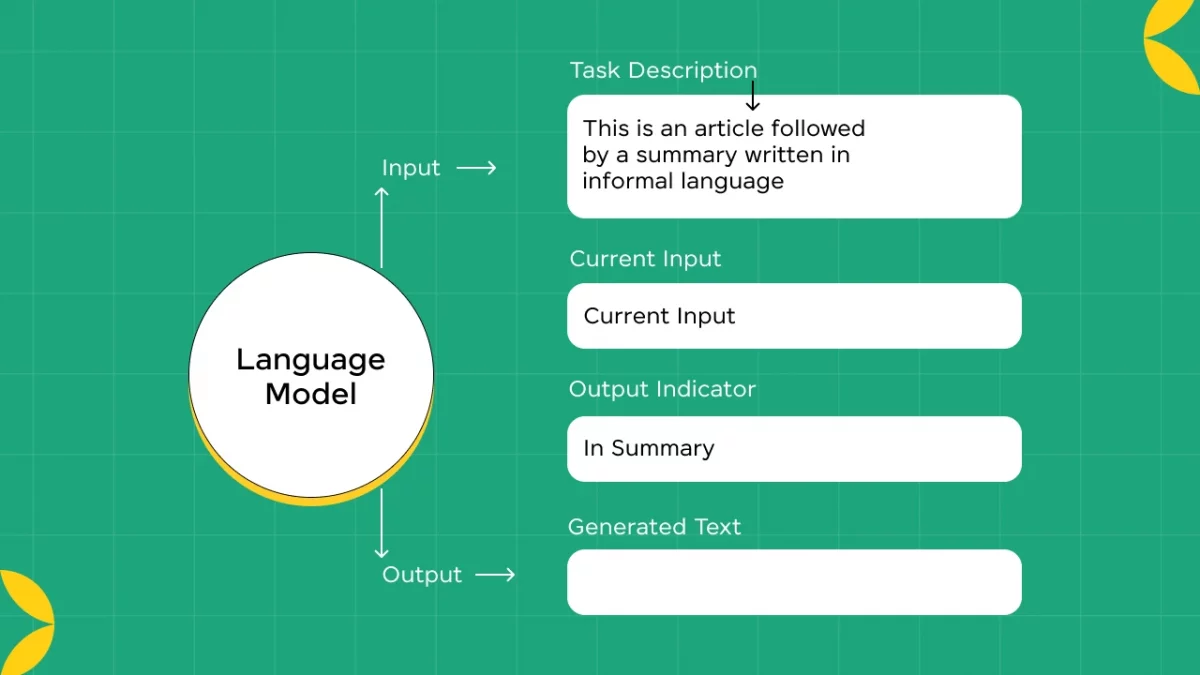

What is Prompt Engineering?

Prompt engineering is a critical aspect of Natural Language Processing (NLP) that involves crafting effective prompts or instructions to guide AI models in generating desired outputs. With the rise of large language models like GPT-3, prompt engineering has become increasingly important for leveraging the full potential of these models in various applications such as text generation, language translation, question answering, and more.

Effective prompt engineering can significantly enhance the performance and usability of AI systems, leading to better outcomes in real-world scenarios.

Before moving forward, make sure you understand the basics of Artificial Intelligence & Machine Learning, including algorithms, data analysis, and model training. If you want to learn more, join HCL GUVI’s AI & Machine Learning Courses with job placement assistance. You’ll discover important tools like TensorFlow, PyTorch, scikit-learn, and others. Plus, you’ll work on real projects to gain practical experience and improve your skills in this fast-growing field.

Continuous experimentation, iteration, and validation are key to refining prompt strategies and achieving desired outcomes in real-world scenarios. As NLP technology continues to evolve, mastering the art of prompt engineering will be increasingly valuable for unlocking the full capabilities of AI-powered applications.

Must Read: ChatGPT 3.5 vs 4.0: Is it worth it to buy a ChatGPT Plus Subscription?

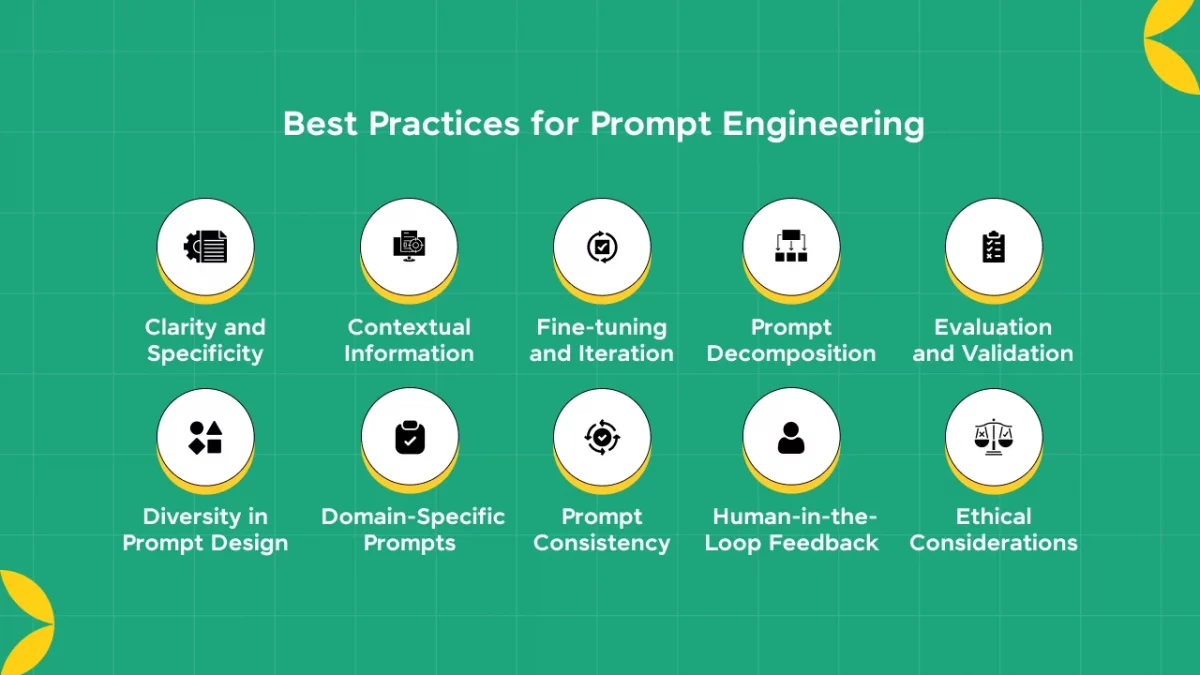

Best Practices for Prompt Engineering

Now let’s move to the understanding of some of the best practices prompt engineers must follow in order to get the accurate output:

Do check out the 5 Secrets to Becoming a Top Prompt Engineer.

1. Clarity and Specificity

Clear and specific prompts provide unambiguous guidance to AI models, reducing the likelihood of generating irrelevant or nonsensical outputs. Specificity helps in defining the task more precisely, enabling the model to focus its attention on relevant information.

For example, instead of asking for a “summary of a book,” a clearer and more specific prompt would be “Summarize the plot and main themes of ‘To Kill a Mockingbird’ by Harper Lee.”

2. Contextual Information

Contextual information provides additional cues or constraints to help the model understand the task requirements better. This can include background information, relevant facts, or specific parameters that influence the desired output. For instance, when asking for a weather forecast, providing contextual details such as location, date, and time enhances the accuracy and relevance of the generated forecast.

Example: Prompt: “Based on the customer’s recent inquiry about a delayed shipment, provide an empathetic response and offer a discount code for their next purchase.”

You should also learn great techniques to improve your coding skills with ChatGPT.

3. Fine-tuning and Iteration

Fine-tuning involves adapting pre-trained models to specific tasks or domains by providing task-specific examples or data. Iterative refinement of prompts based on the model’s outputs allows developers to progressively improve performance and address shortcomings. Fine-tuning and iteration are essential for optimizing model performance and achieving desired outcomes in real-world applications.

Example: Initially: “Generate a scientific abstract.” Refined: “Summarize key findings of a research paper on renewable energy technologies.

4. Prompt Decomposition

Breaking down complex tasks into smaller, more manageable sub-tasks or prompts helps the model better understand and address each component individually. Prompt decomposition facilitates clearer communication of task requirements and allows for more focused processing, leading to improved overall performance.

For instance, decomposing a text generation task into prompts for introduction, body paragraphs, and conclusion can help maintain coherence and structure in the generated text.

Example: Instead of one prompt for a contract, decompose: “Define parties involved,” “Specify terms and conditions,” “Outline payment terms.”

We also have a ChatGPT Course for you to get started with your AI journey, which is FREE.

5. Evaluation and Validation

Regular evaluation and validation of model outputs against predefined criteria are crucial for assessing performance and identifying areas for improvement. Evaluation metrics such as accuracy, coherence, and relevance can provide quantitative insights into model performance, while human evaluation allows for qualitative assessment of output quality. Continuous evaluation and validation help ensure that the model meets the desired standards and objectives.

Example: Use metrics like accuracy, precision, and recall to assess sentiment analysis model performance against labeled reviews.

6. Diversity in Prompt Design

Experimenting with diverse prompt designs allows developers to explore different approaches and strategies for eliciting desired responses from the model. Variations in prompt structure, format, language, and style can influence the model’s behavior and output, providing insights into the effectiveness of different prompt designs. Diversity in prompt design fosters creativity and innovation in prompt engineering, leading to more robust and versatile AI systems.

Example: Experiment with open-ended (“Write a story about…”) and constrained prompts (“Incorporate these words into a poem:…”) to stimulate creativity.

Also Read: Comprehensive Generative AI Terms For Enthusiasts

7. Domain-Specific Prompts

Tailoring prompts to specific domains or tasks enhances the model’s understanding and performance in domain-specific contexts. Domain-specific prompts may incorporate specialized terminology, constraints, or requirements relevant to the task at hand. By aligning prompts with the target domain, developers can improve the accuracy, relevance, and usefulness of the model’s outputs for domain-specific applications.

Example: For medical diagnosis: “Patient demographics, symptoms, and medical history prompt tailored to specific conditions.”

8. Prompt Consistency

Maintaining consistency in prompt formulation across different tasks or iterations promotes reproducibility and comparability of results. Consistent prompts provide a standardized framework for evaluating model performance and tracking changes over time. By adhering to consistent prompt design practices, developers can ensure reliability and integrity in the evaluation and optimization of AI models.

Example: Maintain consistency in appointment scheduling prompts: “Date, time, and reason for appointment.”

9. Human-in-the-Loop Feedback

Incorporating feedback from human annotators or end-users enables the validation of model outputs and refinement of prompt strategies based on real-world use cases and preferences. Human-in-the-loop feedback provides valuable insights into the usability, relevance, and quality of generated outputs, guiding iterative improvements in prompt design and model performance.

By engaging with human feedback, developers can ensure that AI systems meet user expectations and address real-world needs effectively.

Example: Integrate user feedback for language learning: “Users rate accuracy and helpfulness of generated language exercises.”

You can also try building some amazing Engineering Project Ideas Using ChatGPT.

10. Ethical Considerations

Considering ethical implications in prompt design is essential for ensuring responsible and ethical AI deployment. Ethical considerations may include addressing biases, promoting fairness and equity, and mitigating potential societal impacts of AI-generated outputs.

By incorporating ethical principles into prompt engineering practices, developers can help mitigate risks and ensure that AI systems uphold ethical standards and values in their interactions with users and society at large.

Example: Job application prompts avoid gendered language and stereotypes: “Neutral language for equitable opportunities.”

Begin your Artificial Intelligence & Machine Learning journey with HCL GUVI’s Artificial Intelligence & Machine Learning Courses. Learn essential technologies like matplotlib, pandas, SQL, NLP, and deep learning while working on real-world projects.

Wrapping Up

By adhering to these best practices, developers can effectively engineer prompts that guide AI models toward generating accurate, relevant, and contextually appropriate outputs across a wide range of applications and domains. Each best practice contributes to effective prompt engineering and facilitates the development of robust and reliable AI systems.

Must Explore: Top Tools for Prompt Engineering in 2025

FAQs

How do you do perfect prompt engineering?

For prompt engineering, you need the following steps:

1. Be specific and clear

2. Give clear instructions

3. Provide an example for an explanation

4. Provide data if needed

5. Specify the output

What is the best practice for AI prompts?

One of the best practices for prompt engineering is you always have to be very clear about what you’re expecting as an output. You need to give instructions to AI as if you’re explaining to a 5-year-old kid.

What skills are needed for prompt engineering?

Skills needed for prompt engineering:

Writing

Knowledge of prompting techniques

Understanding your end-users

What is the main responsibility of prompt engineers?

A Prompt Engineer is a professional who specializes in developing, refining, and optimizing AI-generated text prompts to make sure they provide accurate results.

Did you enjoy this article?