Python Libraries for Data Science: What Top Companies Actually Use in 2025

Apr 08, 2025 7 Min Read 3002 Views

(Last Updated)

Python rules the data science world with its rich ecosystem of 137,000+ libraries. The sheer number of these data science tools has pushed Python to become the third most popular programming language among developers globally.

Hence, knowing python and what its many libraries can do is very important and a crucial step for your career, this knowledge is the key to to cracking roles in top companies.

Hence, to make it simpler for you, this blog will discuss the Python libraries for data science that top companies use right now. You’ll learn which tools deserve space in your technical toolkit and how to make the most of them.

Table of contents

- What Makes Python the Top Choice for Data Science

- Why companies prefer Python

- Python vs other programming languages

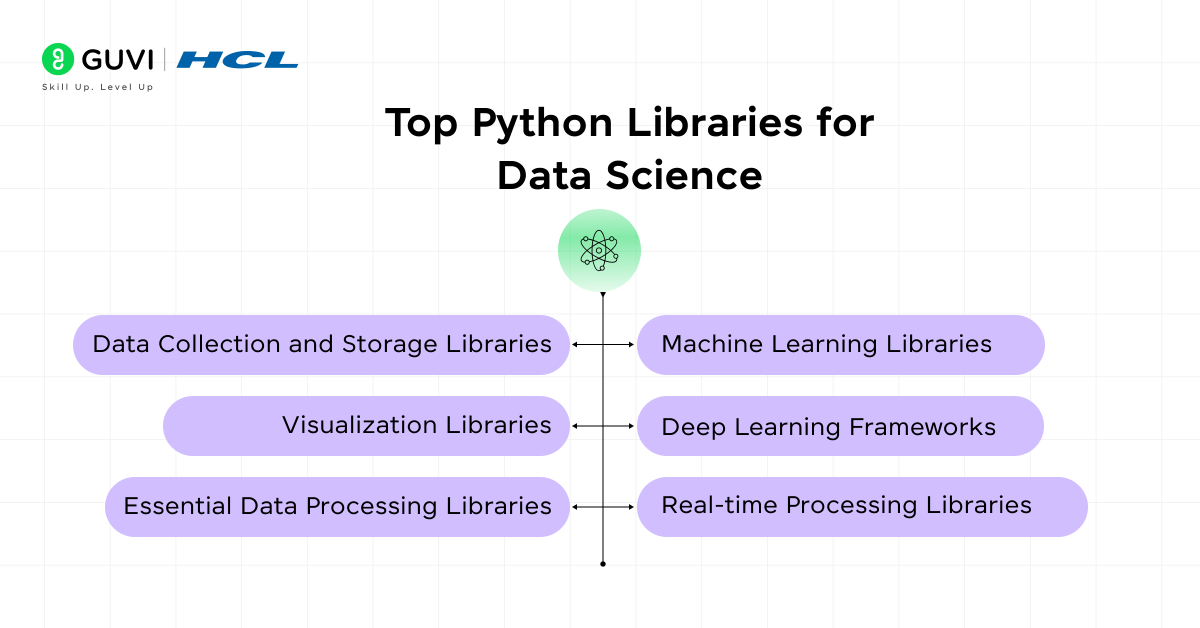

- The Top Python Libraries for Data Science

- 1) Data Collection and Storage Libraries

- 2) Essential Data Processing Libraries

- 3) Visualization Libraries

- 4) Machine Learning Libraries

- 5) Deep Learning Frameworks

- 6) Real-time Processing Libraries

- Concluding Thoughts…

- FAQs

- Q1. What are the most essential Python libraries for data science?

- Q2. How does Python compare to other programming languages for data science?

- Q3. What are the key differences between TensorFlow and PyTorch?

- Q4. How can data scientists handle real-time processing and deployment?

What Makes Python the Top Choice for Data Science

Tech giants around the world now use Python as their go-to programming language for data science. A recent survey of 1,000 data scientists shows that Python has taken the lead over R as the most popular language to analyze data.

Why companies prefer Python

Facebook, Google, Netflix, and Spotify depend on Python to power their data science operations. Companies choose Python because its syntax is easy to read and understand. Python code is 3-5 times shorter than Java and 5-10 times more concise than C++.

Python’s open-source foundation means there are no licensing fees, and users get access to a vast collection of libraries. The language works well with web applications, cloud computing platforms, and Hadoop – the most popular open-source big data platform.

Key advantages that make Python the preferred choice:

- Code syntax that reads like everyday language

- Works on Windows, Linux, and Unix systems

- Dynamic typing speeds up development

- Rich tools for data manipulation and analysis

- Works great with machine learning frameworks

Python vs other programming languages

Python stands out from other programming languages in several ways. Java might run faster, but Python needs much less time to develop. On top of that, Python’s dynamic typing system needs more processing power but offers better flexibility than Java’s strict rules for variables.

Python beats R in general-purpose applications and machine learning tasks. R still dominates statistical work, but Python has become the favorite for AI and deep learning projects. This is because developers create most new AI/ML tools in Python first.

JavaScript focuses on basic functions and variables, but Python supports detailed object-oriented programming with classes and inheritance. Python puts clear, maintainable code first, unlike Perl which focuses on specific application features.

The Top Python Libraries for Data Science

Python does more than just data science – it excels at web development, automation, and software testing. This flexibility and its vast library ecosystem are a great way to get data scientists to connect their work with larger technical systems. Let’s discuss the top libraries that one must know for their data science career to excel.

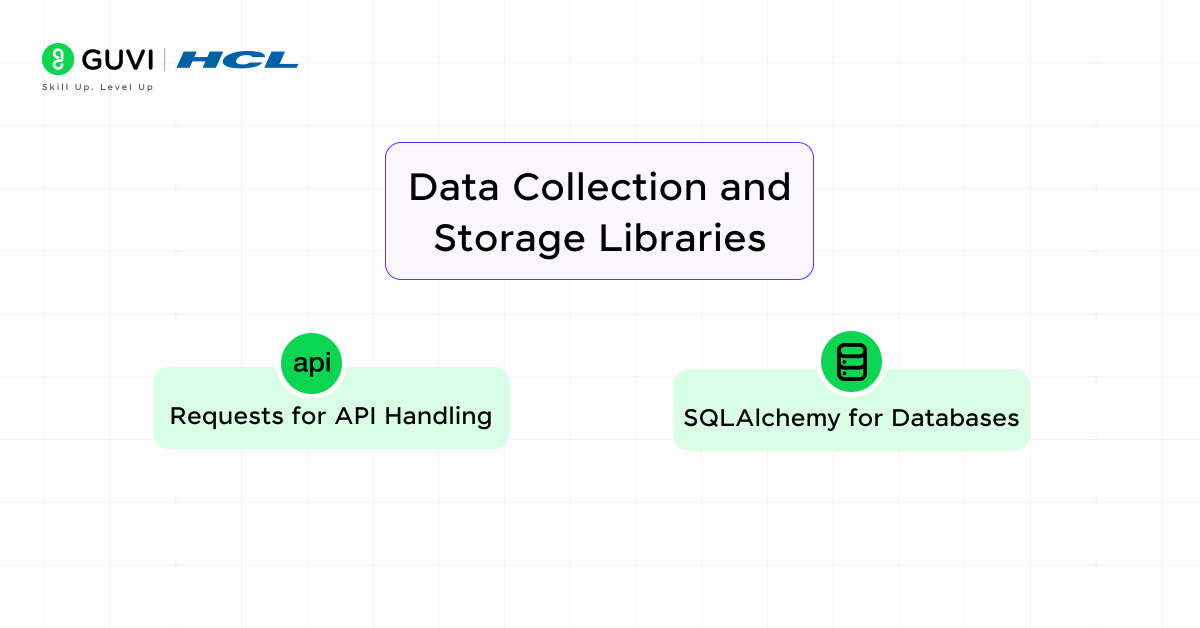

1) Data Collection and Storage Libraries

Data collection and storage are the foundations of any data science project. Python developers rely on two significant libraries to handle these tasks: Requests for API interactions and SQLAlchemy for database management.

a) Requests for API Handling

APIs (Application Programming Interfaces) are essential for accessing real-time data from web services, cloud platforms, and internal systems. The Requests library is the most popular choice for handling API communication in Python. It simplifies sending HTTP requests, retrieving JSON or XML responses, and handling authentication securely.

Key features of Requests:

- Simple syntax for making GET, POST, PUT, and DELETE requests.

- Automatic handling of sessions, cookies, and redirects.

- Support for authentication methods like OAuth and API keys.

- Built-in error handling for failed requests and timeouts.

b) SQLAlchemy for Databases

Most companies rely on structured databases to store and manage large volumes of data efficiently. SQLAlchemy is a powerful Python library that provides an Object Relational Mapper (ORM) and SQL toolkit, making database interactions more flexible and scalable.

Key features of SQLAlchemy:

- ORM allows developers to interact with databases using Python objects instead of writing raw SQL queries.

- Supports multiple database backends, including PostgreSQL, MySQL, SQLite, and Microsoft SQL Server.

- Efficient connection pooling for handling high-performance applications.

- Enables seamless data migrations and schema modifications.

By combining Requests for data retrieval and SQLAlchemy for structured storage, companies can build efficient data pipelines that streamline the entire data science workflow.

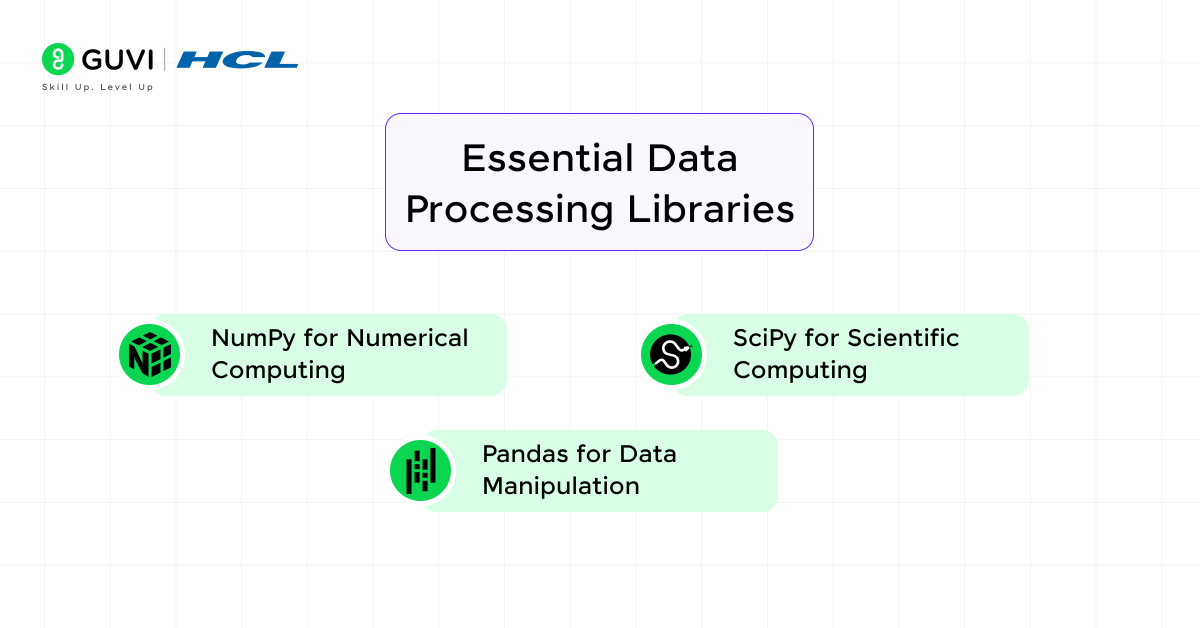

2) Essential Data Processing Libraries

Python’s core processing libraries are the foundations of data analysis. They enable everything from simple calculations to complex scientific computations. These tools handle the heavy lifting needed for data manipulation and numerical operations.

From performing complex mathematical operations to manipulating structured datasets, these libraries form the foundation of any data-driven project. Let’s explore the essential data processing libraries that top companies rely on in 2025.

a) NumPy for Numerical Computing

NumPy (Numerical Python) is the fundamental package for numerical computing in Python. It provides support for large, multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these data structures efficiently. NumPy is highly optimized for performance, making it a critical tool for handling numerical data in data science and machine learning applications.

Key Features of NumPy:

- Efficient array operations with ndarray, enabling fast computations.

- Built-in mathematical functions, including linear algebra and statistical operations.

- Broadcasting mechanism that allows operations on arrays of different shapes.

- Seamless integration with other scientific computing libraries like SciPy and Pandas.

- Support for vectorized operations, reducing the need for explicit loops and improving performance.

b) Pandas for Data Manipulation

Pandas is the go-to library for working with structured data, such as spreadsheets, CSV files, and SQL tables. It provides powerful data structures like DataFrames and Series, allowing users to clean, transform, and analyze datasets with ease. Pandas is widely used in data science, financial analysis, and business intelligence.

Key Features of Pandas:

- Provides DataFrame and Series objects for structured data manipulation.

- Supports reading and writing data from multiple sources, including CSV, Excel, and SQL databases.

- Built-in functions for handling missing data, filtering, and aggregating large datasets.

- Time-series functionality for analyzing trends and seasonal patterns.

- Highly optimized with NumPy integration for efficient numerical operations.

c) SciPy for Scientific Computing

SciPy builds on NumPy and provides additional functionality for scientific computing, including optimization, interpolation, integration, and signal processing. It is essential for researchers and engineers working on complex mathematical models, simulations, and algorithm development.

Key Features of SciPy:

- Advanced linear algebra and optimization functions for numerical computations.

- Statistical and probabilistic modeling tools for data science applications.

- Signal processing capabilities for filtering, transformation, and feature extraction.

- Interpolation and integration functions for mathematical and scientific analysis.

- Built-in image processing modules for computer vision and medical imaging applications.

These essential data processing libraries—NumPy, Pandas, and SciPy—are indispensable tools in data science. They empower companies to handle vast amounts of data efficiently, perform complex computations, and derive valuable insights for decision-making in 2025.

If you’re looking to master Data Science and build a successful career, then GUVI’s Data Science Course is the perfect choice. This industry-aligned course covers Python, Machine Learning, AI, and Big Data, providing hands-on projects and expert mentorship to make you job-ready.

3) Visualization Libraries

Data scientists must know how to create compelling visualizations. Python offers powerful visualization libraries that enable professionals to create a wide range of charts, graphs, and plots. Two of the most widely used libraries for visualization in data science are Matplotlib and Seaborn.

a) Matplotlib

Matplotlib is the most fundamental and widely used plotting library in Python. It provides extensive customization options, allowing users to create static, animated, and interactive visualizations. Whether it’s line plots, bar charts, scatter plots, or histograms, Matplotlib offers full control over every element of a graph, making it a preferred choice for data scientists and engineers.

Key Features of Matplotlib:

- Highly Customizable – Users can modify colors, labels, line styles, grid settings, and more.

- Supports Multiple Plot Types – Includes basic charts like line plots, bar graphs, histograms, and more complex visualizations.

- Integration with Pandas and NumPy – Works seamlessly with data structures like Pandas DataFrames and NumPy arrays.

- Interactive and Animated Plots – Supports interactive backends for zooming and panning, along with animated visualizations.

- Export in Multiple Formats – Save visualizations as PNG, SVG, PDF, and other formats.

b) Seaborn for Statistical Visualization

Seaborn is built on top of Matplotlib and provides a higher-level, more aesthetically pleasing interface for creating statistical visualizations. It is specifically designed for analyzing relationships between variables, making it highly useful in data science applications like regression analysis and categorical data visualization.

Key Features of Seaborn:

- Built-in Statistical Functions – Supports visualizing distributions, correlations, and regression models.

- Beautiful Default Styles – Offers pre-defined themes for polished and professional-looking plots.

- Automatic Data Aggregation – Simplifies working with large datasets by grouping and summarizing data automatically.

- Seamless Integration with Pandas – Works effortlessly with Pandas DataFrames, making it easy to visualize structured data.

- Specialized Plots for Data Analysis – Includes heatmaps, violin plots, pair plots, and categorical plots that are commonly used in data science.

These visualization libraries work well together. Matplotlib provides the essential foundation with extensive customization options. Seaborn adds efficient statistical visualization capabilities. Together they give data scientists powerful tools to create insightful visualizations that drive project decisions.

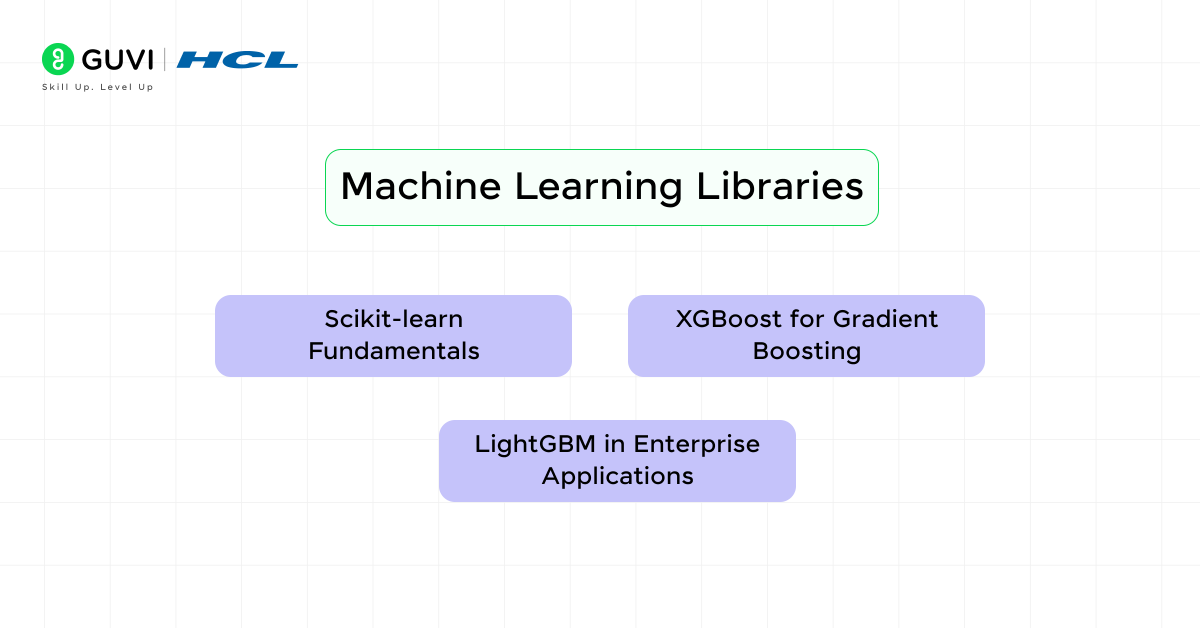

4) Machine Learning Libraries

Python developers need strong, flexible libraries to handle complex machine learning tasks in production. Let’s look at three Python libraries that have become the gold standard for implementing machine learning solutions in real-world environments.

a) Scikit-learn Fundamentals

Scikit-learn is one of the most popular and user-friendly machine learning libraries in Python. It provides simple and efficient tools for building models, handling data preprocessing, and performing various machine learning tasks such as classification, regression, and clustering. The library is built on top of NumPy, SciPy, and Matplotlib, making it well-integrated with the Python data science ecosystem.

Key Features:

- Easy-to-use API: Provides a clean and intuitive interface for applying machine learning algorithms.

- Wide range of algorithms: Supports classification, regression, clustering, and dimensionality reduction techniques.

- Model selection tools: Includes hyperparameter tuning, cross-validation, and performance metrics.

- Scalability: Works efficiently for small- to medium-scale datasets.

- Integration with other libraries: Works seamlessly with Pandas, NumPy, and Matplotlib.

b) XGBoost for Gradient Boosting

XGBoost (Extreme Gradient Boosting) is a powerful machine learning library known for its efficiency and accuracy in predictive modeling. It is widely used in data science competitions and real-world applications that require high-performance models. XGBoost is optimized for speed and scalability, making it a go-to choice for structured data problems.

Key Features:

- Gradient boosting framework: Uses boosting techniques to improve model accuracy.

- Regularization techniques: Prevents overfitting and enhances generalization.

- Parallel computation: Utilizes multi-core processing for fast training.

- Handling of missing values: Automatically manages missing data efficiently.

- Supports multiple platforms: Works with Python, R, C++, and Java.

c) LightGBM in Enterprise Applications

LightGBM (Light Gradient Boosting Machine) is an advanced gradient boosting framework designed for high-performance machine learning. Developed by Microsoft, it is optimized for speed and efficiency, making it suitable for large-scale enterprise applications. LightGBM is widely used for ranking, classification, and regression tasks in industries like finance, healthcare, and e-commerce.

Key Features:

- Faster training speed: Uses histogram-based algorithms for quicker computations.

- Lower memory usage: Requires less RAM compared to other boosting libraries.

- Highly scalable: Supports distributed learning for handling big data.

- Handles categorical features automatically: No need for manual encoding.

- Better accuracy for large datasets: Efficient for high-dimensional data processing.

Companies across various industries rely on these tools to build accurate and scalable machine learning models, making Python the preferred language for data science in 2025.

5) Deep Learning Frameworks

Deep learning frameworks are at the forefront of Python’s data science ecosystem. TensorFlow and PyTorch stand out as the two dominant players that help organizations build and deploy sophisticated neural networks at scale.

a) TensorFlow Ecosystem

TensorFlow, developed by Google, is one of the most comprehensive deep learning frameworks, providing an end-to-end ecosystem for building AI applications. It offers flexible computation using both CPUs and GPUs, making it scalable for everything from research experiments to production-level AI systems.

Key Features of TensorFlow:

- Keras Integration: Built-in high-level API (Keras) for easy and fast model development.

- TensorFlow Extended (TFX): End-to-end platform for deploying ML models in production.

- TensorFlow Lite: Optimized for mobile and edge devices.

- TensorFlow.js: Enables deep learning models to run in web browsers.

- AutoML & TensorFlow Hub: Pre-trained models and tools for efficient transfer learning.

b) PyTorch Adoption Trends

PyTorch, developed by Facebook (now Meta), has gained massive popularity in the research community and is increasingly being adopted in production systems. It is known for its dynamic computation graph, which makes model experimentation more intuitive and flexible. PyTorch is widely used in academia, reinforcement learning, and generative AI applications.

Key Features of PyTorch:

- Dynamic Computation Graphs: Allows flexible model building and debugging.

- TorchScript: Enables easy deployment of models from research to production.

- Distributed Training: Supports multi-GPU training for large-scale AI projects.

- ONNX Compatibility: Enables interoperability with other AI frameworks.

- PyTorch Lightning: A high-level wrapper that simplifies deep learning workflows.

Each framework fills a unique role in the data science ecosystem. TensorFlow excels at production tasks through TensorFlow Serving and supports mobile platforms well. PyTorch has become researchers’ favorite choice because its flexible and accessible design appeals to academics and developers who focus on breakthroughs.

6) Real-time Processing Libraries

Python libraries need resilient solutions to process and deploy models at scale in modern data science. Ray for distributed computing and Streamlit for deployment are two libraries that lead the way to meet these requirements.

a) Ray for Distributed Computing

Ray is an open-source framework that allows for distributed computing, making it easier to scale machine learning and deep learning applications across multiple machines. It provides a simple API for parallel processing, which significantly improves computational efficiency when handling large datasets or training complex models.

Key Features of Ray:

- Parallel Execution: Ray enables parallel processing across multiple CPUs and GPUs, reducing computation time.

- Task Scheduling: It dynamically manages computing resources, ensuring optimal performance for large-scale tasks.

- Integration with AI Frameworks: Supports TensorFlow, PyTorch, and Scikit-learn, allowing easy deployment of AI models.

- Fault Tolerance: Automatically recovers from node failures, ensuring stability in distributed applications.

- Scalability: Easily scales workloads from a single laptop to cloud-based clusters.

b) Streamlit for Deployment

Streamlit is an open-source Python library that simplifies the deployment of data science and machine learning models into interactive web applications. It allows data scientists to create visually appealing dashboards and applications with minimal coding effort.

Key Features of Streamlit:

- Easy Deployment: Convert Python scripts into web applications without requiring front-end development skills.

- Real-time Interactivity: Allows dynamic updates to models and visualizations based on user inputs.

- Seamless Integration: Works well with Pandas, Matplotlib, Plotly, and other data science libraries.

- Minimal Code: Requires only a few lines of Python code to build fully functional apps.

- Cloud & Local Hosting: Apps can be hosted locally or deployed to cloud platforms with a single command.

Ray and Streamlit solve two big challenges in data science workflow. Ray helps scale computations efficiently through distributed computing. Streamlit makes sharing and deploying models easier. Together, these libraries help data scientists build and deploy expandable solutions effectively.

Concluding Thoughts…

Python libraries have turned data science from a specialized field into an available career path that offers powerful tools for every task. These libraries take care of everything from simple data processing to advanced deep learning applications and make complex analysis easier to handle.

The field grows faster each day. Data science success comes from becoming skilled at these libraries and developing strong analytical and problem-solving abilities.

You should start with simple tools like NumPy and Pandas, then move to advanced ones based on your interests and project needs. Your hands-on experience with these libraries will take you further than theoretical knowledge alone.

FAQs

The most essential Python libraries for data science include NumPy for numerical computing, Pandas for data manipulation, Matplotlib and Seaborn for visualization, Scikit-learn for machine learning, and TensorFlow or PyTorch for deep learning. These libraries form the core toolkit for most data science tasks.

Python is widely preferred for data science due to its simple syntax, extensive library ecosystem, and versatility. It offers advantages like shorter development time compared to Java, better general-purpose capabilities than R, and more comprehensive object-oriented programming support than JavaScript. This makes Python an ideal choice for various data science applications.

TensorFlow excels in production environments with robust deployment options and scalability. PyTorch, on the other hand, is favored in research settings for its flexibility and user-friendly design. TensorFlow offers better support for mobile platforms, while PyTorch provides dynamic computational graphs and is increasingly popular in academic research.

For real-time processing, data scientists can use Ray, a framework for distributed computing that scales Python and AI applications across multiple machines. For deployment, Streamlit offers a simple way to create and share web applications, allowing data scientists to quickly deploy models and create interactive data visualizations without extensive web development knowledge.

![Top 10 Mistakes to Avoid in Your Data Science Career [2025] 9 data science](https://www.guvi.in/blog/wp-content/uploads/2023/05/Beginner-mistakes-in-data-science-career.webp)

Did you enjoy this article?