As you already know, data science is the unofficial governing body of every business out there. Without data insights, a business can’t withstand the heavy competition of the current age.

But how to find patterns and identify trends in the data? That’s where the key concept, Clustering in data science comes into the picture. This is an important factor that you shouldn’t miss when you are learning data science.

If you don’t know much about it, worry not, you are not alone as this article will guide you through the world of clustering in data science giving you invaluable knowledge on the domain!

So, without further ado, let us get started!

Table of contents

- Understanding Clustering in Data Science

- Why Clustering in Data Science Matters?

- 4 Key Techniques of Clustering in Data Science

- K-Means Clustering

- Hierarchical Clustering

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

- Gaussian Mixture Models (GMM)

- Steps in the Clustering Process

- Data Preprocessing

- Choosing the Right Algorithm

- Determining the Number of Clusters

- Running the Clustering Algorithm

- Evaluating the Results

- Interpreting and Using Clusters

- Best Practices for Clustering in Data Science

- Standardize Your Data

- Visualize Your Clusters

- Experiment with Different Algorithms

- Use Domain Knowledge

- Handle Outliers Carefully

- Evaluate and Validate Your Clusters

- Iterate and Refine

- Conclusion

- FAQs

- How does clustering differ from classification?

- How does the Silhouette Score help in clustering?

- What is the Expectation-Maximization algorithm in Gaussian Mixture Models?

- Can clustering algorithms be used for real-time data?

Understanding Clustering in Data Science

The best way to learn any new concept is to start from the definition. In the same way, let us now see the textbook definition of clustering in data science and understand its inference.

Clustering is a fundamental technique in data science, used for finding patterns and structures in data. It involves grouping a set of objects in such a way that objects in the same group (called a cluster) are more similar to each other than to those in other groups (clusters).

If you understand clustering in data science by the above definition, well and good but to truly grasp clustering, think about how you naturally group things in your daily life.

For example, when you organize your closet, you might group clothes by type: shirts, pants, and jackets. Within these categories, you might further group them by color or season. This process of grouping similar items together based on certain characteristics is exactly what clustering in data science is.

In the world of technology, clustering in data science is about finding these natural groupings in a dataset. Imagine you have a large set of customer data with various attributes like age, income, and purchasing behavior.

By applying clustering, you can discover which customers are similar in these aspects and group them together. This helps you understand your customers better and tailor your marketing strategies accordingly.

Why Clustering in Data Science Matters?

Clustering in data science has numerous applications across various fields:

- Customer Segmentation: Businesses use clustering to group customers with similar behaviors, enabling targeted marketing strategies.

- Image Segmentation: In computer vision, clustering helps in dividing an image into regions for easier analysis and processing.

- Anomaly Detection: Identifying unusual patterns in data, which could indicate fraud or system failures.

- Social Network Analysis: Understanding community structures within social networks.

4 Key Techniques of Clustering in Data Science

We finished the first step of understanding the definition of clustering in data science. Now it is time to learn the techniques that are used in clustering in data science.

It is imperative that you have a basic understanding of data science before going through this. If not, then consider enrolling for a professionally certified online Data Science course by a recognized institution that can help you get started and also provide you with an industry-grade certificate!

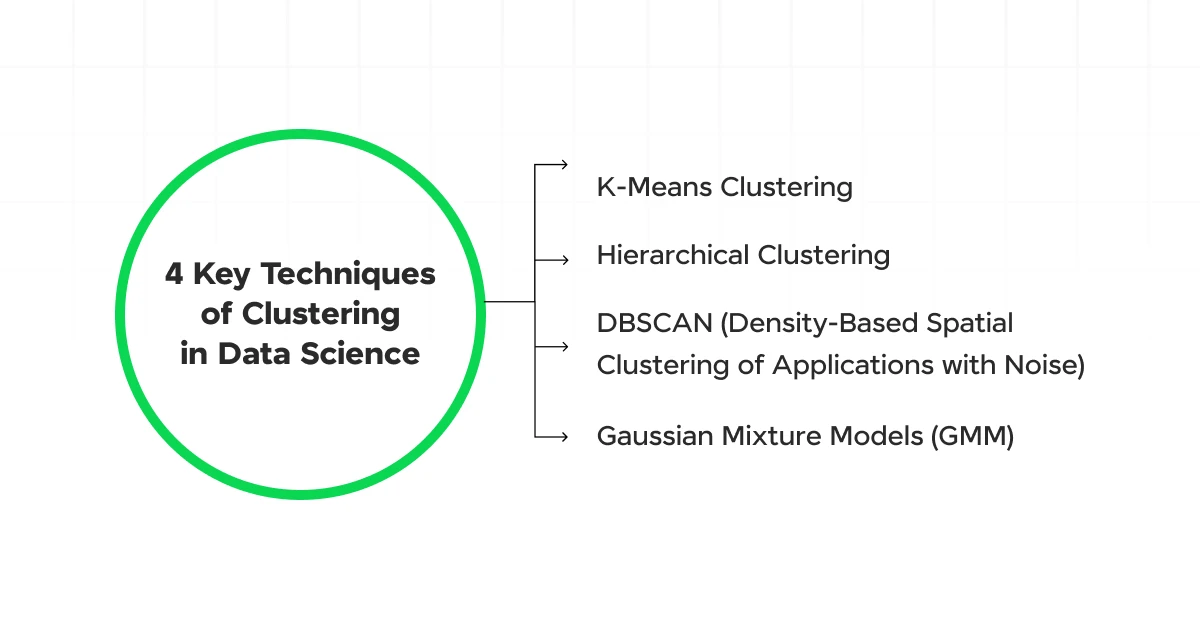

Let us now dive into some key techniques of clustering in data science that you might come across.

1. K-Means Clustering

- How it Works: Imagine you have a bunch of data points on a map. K-Means clustering helps you group these points into K clusters. Think of K as the number of groups you want. The algorithm finds K central points, called centroids, and then assigns each data point to the nearest centroid. After that, it adjusts the centroids and reassigns the points until things settle down.

- Why Use It: It’s straightforward and works well for large datasets.

- Things to Keep in Mind: You need to decide the number of clusters (K) beforehand, which can be tricky. Also, the results can vary depending on where the initial centroids are placed.

2. Hierarchical Clustering

- How it Works: This technique builds a tree of clusters. You start by treating each data point as its own cluster. Then, you repeatedly merge the closest pairs of clusters until you end up with a single cluster or a set number of clusters. There are two main types: agglomerative (bottom-up) and divisive (top-down).

- Why Use It: You don’t need to specify the number of clusters upfront, and it gives you a cool tree diagram (dendrogram) to visualize the clusters.

- Things to Keep in Mind: It can be slow and resource-intensive, especially with large datasets.

3. DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

- How it Works: DBSCAN groups together points that are closely packed based on a distance metric. It starts with an arbitrary point and finds all points within a certain distance (epsilon). If there are enough points (minPts), it forms a cluster. If not, the point is marked as noise. This continues until all points are either clustered or marked as noise.

- Why Use It: It’s great for finding clusters of varying shapes and sizes and is robust to outliers (noise).

- Things to Keep in Mind: You need to set the distance (epsilon) and the minimum number of points (minPts), which can require some trial and error.

4. Gaussian Mixture Models (GMM)

- How it Works: Gaussian Mixture Models assume that your data is generated from a mix of several Gaussian distributions (bell curves). It uses the Expectation-Maximization (EM) algorithm to estimate the parameters of these distributions. Each cluster is represented by a Gaussian distribution, and the algorithm finds the best combination of these distributions to fit the data.

- Why Use It: It’s flexible and can model clusters of different shapes and sizes. Plus, it gives you probabilities for each point belonging to a cluster.

- Things to Keep in Mind: It’s more complex and computationally intensive than some other methods, and you still need to specify the number of clusters.

By understanding these key techniques of clustering in data science, you can choose the one that best fits your data and your specific problem. Each method has its strengths and weaknesses, so it’s often worth trying a few different approaches to see which one works best for you.

Steps in the Clustering Process

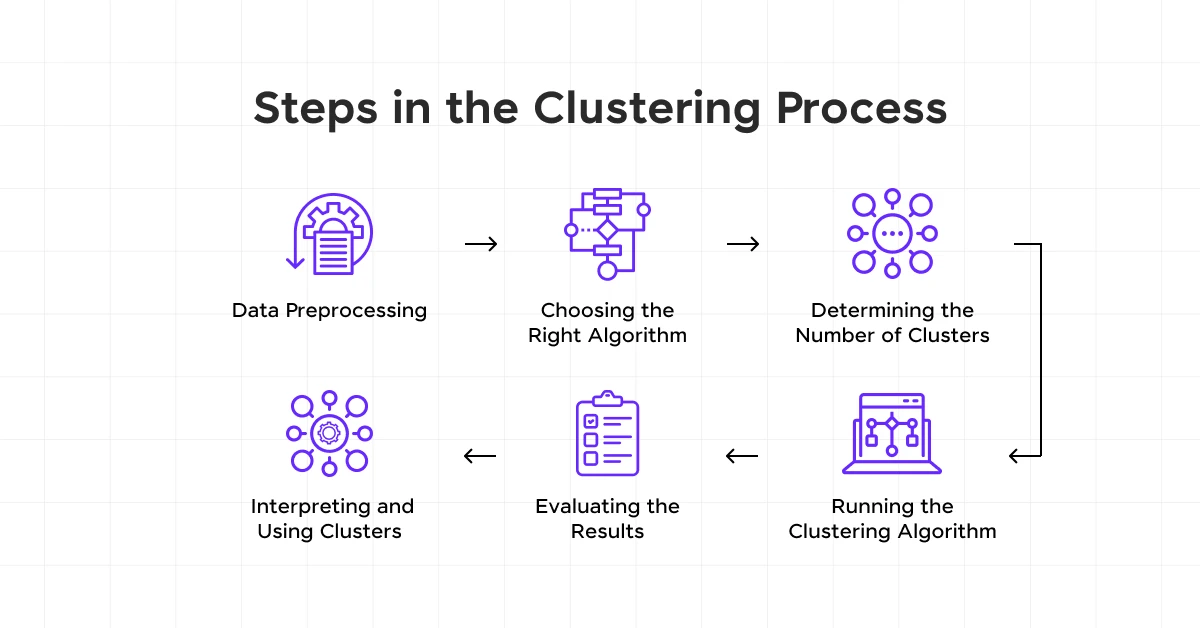

Similar to the data science process, there is a process for clustering in data science that you need to follow!

Here are the steps you’ll have to typically follow when performing clustering in data science.

1. Data Preprocessing

- Before you start clustering, you need to clean your data. This means handling any missing values, removing duplicates, and normalizing your data. Normalizing scales your data so that each feature contributes equally to the result.

- Why It Matters: Clean, normalized data ensures that the clustering algorithm performs well and gives you meaningful clusters.

2. Choosing the Right Algorithm

- Decide which clustering method to use based on your data and what you want to achieve. Common methods include K-Means, Hierarchical Clustering, DBSCAN, and Gaussian Mixture Models.

- Why It Matters: Different algorithms work better for different types of data and objectives. Picking the right one can make a big difference in your results.

3. Determining the Number of Clusters

- For methods like K-Means, you need to decide how many clusters (K) you want. Techniques like the Elbow Method or the Silhouette Score can help you find a good number.

- Why It Matters: Choosing the right number of clusters helps ensure that your groups are meaningful and not too broad or too specific.

4. Running the Clustering Algorithm

- Apply the chosen clustering algorithm to your data. This involves setting up the algorithm with your data and any necessary parameters (like the number of clusters) and letting it run.

- Why It Matters: This step is where the magic happens. The algorithm will group your data points into clusters based on the criteria you set.

5. Evaluating the Results

- After clustering, you need to evaluate how good your clusters are. You can use metrics like Within-Cluster Sum of Squares (WCSS), Silhouette Coefficient, or domain-specific validations to assess the quality of the clusters.

- Why It Matters: Evaluation helps you understand if your clusters make sense and if the algorithm did a good job. It’s a reality check to ensure your results are useful.

6. Interpreting and Using Clusters

- Look at the clusters and interpret what they mean in the context of your data. Use these insights to inform business decisions, refine models, or detect patterns.

- Why It Matters: The end goal of clustering is to gain insights that can help you make better decisions or understand your data better. This step turns your clusters into actionable information.

By following these steps, you can effectively apply clustering in data science and reap the benefits of the concept in a much easier and better way.

If you don’t know already, Python is the best programming language for Data Science and in case your concepts are not very clear in Python and have the determination to improve, consider enrolling for GUVI’s Self-Paced Python course that lets you learn in your way!

Best Practices for Clustering in Data Science

To get the most out of clustering in data science, here are some best practices you should follow. This is the last stop in our journey through the world of clustering in data science!

1. Standardize Your Data

- Make sure all your features (data points’ characteristics) are on a similar scale. This usually means normalizing or standardizing your data.

- Clustering algorithms often rely on distances between data points. If your data isn’t standardized, features with larger scales can dominate the clustering process, leading to misleading results.

2. Visualize Your Clusters

- After clustering, use visualization tools like scatter plots, dendrograms, and heatmaps to see your clusters.

- Visualizing helps you understand the structure of your clusters and can highlight any issues or patterns you might not see from just numbers.

3. Experiment with Different Algorithms

- Try multiple clustering algorithms to see which one works best for your data. Each algorithm has its own strengths and weaknesses.

- Different algorithms can produce different results. By experimenting, you can find the one that provides the most meaningful clusters for your specific problem.

4. Use Domain Knowledge

- Apply your understanding of the field or industry to interpret the clusters. Know what makes sense and what doesn’t in the context of your data.

- Domain knowledge can help you validate the clusters and ensure they make practical sense. It also helps in naming and understanding the clusters better.

5. Handle Outliers Carefully

- Identify and decide how to handle outliers in your data. Sometimes they can be removed, or you might need to use algorithms that can handle them well, like DBSCAN.

- Outliers can skew your clusters and lead to incorrect interpretations. Properly handling them ensures your clusters are accurate and meaningful.

6. Evaluate and Validate Your Clusters

- Use evaluation metrics like the Silhouette Score, Davies-Bouldin Index, or cross-validation methods to assess the quality of your clusters.

- Evaluation ensures that the clusters you’ve created are actually good and useful. It helps you refine the clustering process and improve your results.

7. Iterate and Refine

- Don’t settle on the first clustering result. Iterate by adjusting parameters, trying different algorithms, and refining your data preprocessing steps.

- Clustering is often an iterative process. Refining your approach can lead to better, more meaningful clusters.

By following these best practices, you’ll be better equipped to use clustering in data science effectively.

If you want to learn more about Clustering in Data Science and its functionalities, then consider enrolling in GUVI’s Certified Data Science Course which not only gives you theoretical knowledge but also practical knowledge with the help of real-world projects.

If you wish to explore more, have a look at the Future of Data Science!

Conclusion

In conclusion, clustering in data science is a powerful technique for finding patterns and structures within data. By following best practices like standardizing data, visualizing clusters, experimenting with different algorithms, and leveraging domain knowledge, you can make the most of this method.

Whether you’re segmenting customers, identifying anomalies, or exploring any dataset, clustering in data science provides valuable insights to inform better decisions.

FAQs

Clustering groups unlabeled data into clusters, while classification assigns labeled data into predefined categories.

The Silhouette Score measures how similar a data point is to its own cluster compared to other clusters, helping to evaluate the quality of clusters.

The Expectation-Maximization algorithm iteratively estimates the parameters of the Gaussian distributions in GMM to fit the data.

Yes, some clustering algorithms like online K-Means and stream clustering are designed for real-time data processing.

![Top 10 Mistakes to Avoid in Your Data Science Career [2025] 8 data science](https://www.guvi.in/blog/wp-content/uploads/2023/05/Beginner-mistakes-in-data-science-career.webp)

Did you enjoy this article?