Top 30 Data Engineering Interview Questions and Answers

Mar 29, 2025 6 Min Read 2786 Views

(Last Updated)

Are you nervous about preparing for a data engineering interview and unsure what kind of questions to expect? With the rapid evolution of data infrastructure, tools, and cloud technologies, data engineering interview questions have become increasingly multifaceted, ranging from SQL queries to system design challenges.

But worry not, if you know what is going to come, you can prepare and ace it. Even if you’re a fresher stepping into the world of data, an intermediate-level engineer polishing your skills, or a senior looking to scale your expertise, understanding the most relevant and frequently asked questions can give you a strategic edge.

In this article, we’ve curated 30 of the most important data engineering interview questions, broken down by experience level, to help you approach your next opportunity with confidence. So, take a deep breath and let us start our journey in understanding the questions that you might expect in your next data engineering interview!

Table of contents

- Data Engineering Interview Questions and Answers: Fresher Level (0–1 Year of Experience)

- What is Data Engineering? How is it different from Data Science?

- What is a Data Pipeline?

- Explain the ETL process.

- What is the difference between OLTP and OLAP systems?

- What are the different types of databases used in data engineering?

- What are normalization and denormalization in databases?

- SQL Coding Question: Write a query to fetch the second highest salary from an "employees" table.

- What is the difference between INNER JOIN and LEFT JOIN in SQL?

- What are primary keys and foreign keys?

- What is data warehousing, and why is it important?

- Data Engineering Interview Questions and Answers: Intermediate Level (1–3 Years of Experience)

- What is the role of a data engineer in a modern data stack?

- What is partitioning in databases or data lakes?

- Explain how batch processing differs from stream processing. Give use cases.

- What tools are commonly used for stream processing?

- What is Apache Spark? Why is it popular among data engineers?

- Python Coding Question:

- What is schema evolution in Big Data?

- How do you handle data quality issues in pipelines?

- What are some commonly used orchestration tools?

- What is the CAP theorem, and how does it apply to distributed systems?

- Data Engineering Interview Questions and Answers: Advanced Level (3+ Years of Experience)

- Design a real-time analytics pipeline for a ride-sharing app.

- How would you optimize a slow-running Spark job?

- How do you ensure data lineage and observability in a complex pipeline?

- Explain data lake vs. data warehouse architecture. When to use each?

- What is Delta Lake, and how does it improve upon traditional data lakes?

- Advanced SQL Question:

- How would you handle slowly changing dimensions (SCD) in data warehousing?

- Explain the concept of backpressure in stream processing. How can you handle it?

- What are the best practices for managing infrastructure as code in data engineering?

- How do you ensure security and compliance in your data pipelines?

- Conclusion

Data Engineering Interview Questions and Answers: Fresher Level (0–1 Year of Experience)

If you’re just starting your career in data engineering, employers will primarily assess your understanding of foundational concepts — databases, SQL, ETL workflows, and basic architecture. This section covers beginner-friendly questions that test your grasp on core principles and your ability to apply them in real-world scenarios.

1. What is Data Engineering? How is it different from Data Science?

Data engineering is the discipline of designing, building, and maintaining systems and infrastructure that enable the collection, storage, and processing of large volumes of data efficiently.

While data science is focused on extracting insights from data using statistical methods and machine learning, data engineering is about ensuring that the data is clean, reliable, and accessible for such analysis.

2. What is a Data Pipeline?

A data pipeline is a set of processes that move data from one system to another — typically from a data source (like an API or database) to a storage or analytics system.

It usually involves:

- Extracting data from a source.

- Transforming it into a usable format.

- Loading it into a storage system or database.

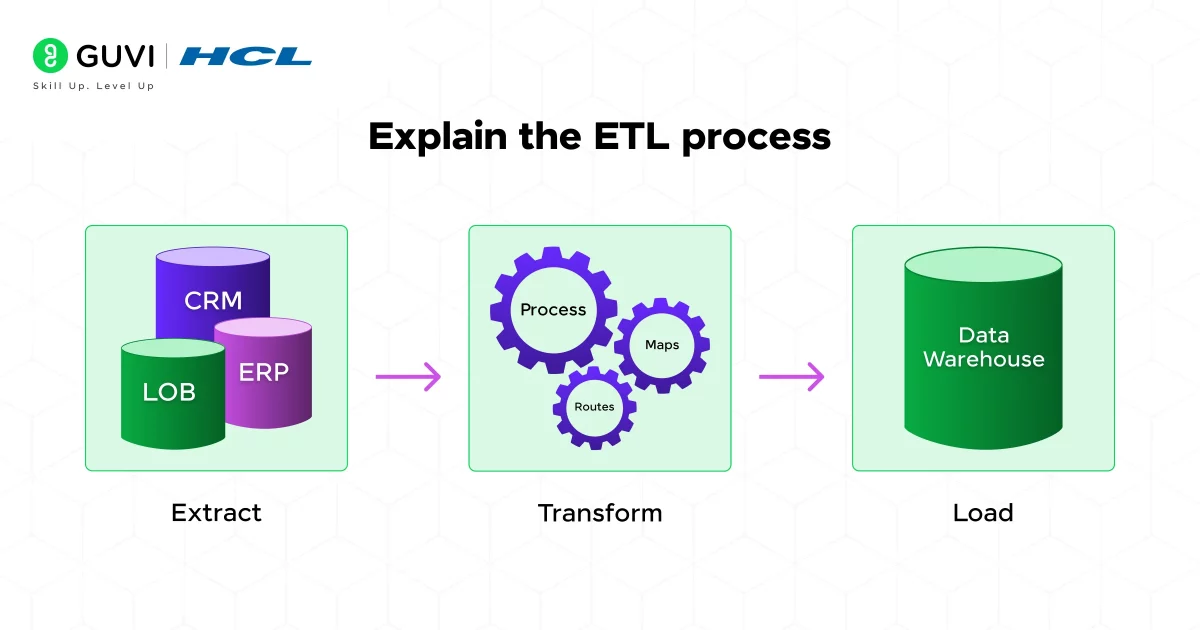

3. Explain the ETL process.

ETL stands for Extract, Transform, Load — a traditional process used to prepare data for analysis.

- Extract: Pull data from various sources (APIs, databases, flat files).

- Transform: Clean and process the data (e.g., remove nulls, convert formats, aggregate).

- Load: Insert the transformed data into a target system like a data warehouse.

4. What is the difference between OLTP and OLAP systems?

- OLTP (Online Transaction Processing):

- Used for handling high volumes of small, quick transactions.

- Examples: Banking systems, and order entry apps.

- Prioritizes speed and accuracy.

- OLAP (Online Analytical Processing):

- Used for complex queries on large datasets.

- Supports reporting and analytics.

- Example: BI dashboards, financial forecasting.

5. What are the different types of databases used in data engineering?

Here are the main types:

- Relational Databases (SQL): Structured schema, uses tables (e.g., MySQL, PostgreSQL).

- NoSQL Databases: Schema-less or flexible schemas.

- Document-based: MongoDB

- Key-value stores: Redis

- Columnar stores: Cassandra

- Columnar Databases: Optimized for analytics (e.g., Amazon Redshift, Google BigQuery).

- Time-Series Databases: Used for time-stamped data (e.g., InfluxDB, TimescaleDB).

Each is suited for specific use cases based on performance, scalability, and structure.

6. What are normalization and denormalization in databases?

- Normalization: Organizing data to reduce redundancy and improve data integrity.

Example: Splitting customer and order details into separate tables and linking them via foreign keys. - Denormalization: Combining tables to reduce joins and improve read performance, often used in analytics systems.

7. SQL Coding Question: Write a query to fetch the second highest salary from an “employees” table.

sql

SELECT MAX(salary)

FROM employees

WHERE salary < (SELECT MAX(salary) FROM employees);Explanation:

- The subquery gets the highest salary.

- The outer query finds the maximum salary that’s less than that — effectively, the second highest.

Alternate method using LIMIT (MySQL):

sql

SELECT DISTINCT salary

FROM employees

ORDER BY salary DESC

LIMIT 1 OFFSET 1;8. What is the difference between INNER JOIN and LEFT JOIN in SQL?

- INNER JOIN: Returns only matching rows from both tables.

- LEFT JOIN: Returns all rows from the left table and matching rows from the right table. If no match, NULLs are returned for the right table columns.

Example:

sql

-- Inner Join

SELECT * FROM orders

INNER JOIN customers ON orders.customer_id = customers.id;

-- Left Join

SELECT * FROM orders

LEFT JOIN customers ON orders.customer_id = customers.id;Use LEFT JOIN when you want all records from one table, even if related records don’t exist in the other.

9. What are primary keys and foreign keys?

- Primary Key: A unique identifier for each record in a table. Cannot be NULL.

- Foreign Key: A field that creates a relationship between two tables. It refers to a primary key in another table.

Example:

sql

-- Customers Table

id (Primary Key), name

-- Orders Table

id, customer_id (Foreign Key referencing Customers.id)This setup ensures referential integrity between tables.

10. What is data warehousing, and why is it important?

A data warehouse is a central repository of integrated data from various sources, structured for querying and analysis.

Key benefits:

- Handles large-scale analytics.

- Supports decision-making.

- Enables historical data analysis.

Popular data warehousing tools: Snowflake, Amazon Redshift, Google BigQuery.

Data Engineering Interview Questions and Answers: Intermediate Level (1–3 Years of Experience)

At the intermediate level, the focus shifts to practical experience, designing pipelines, working with distributed systems, managing data quality, and optimizing workflows.

You’ll also encounter more hands-on coding and tooling questions. This section dives into the concepts and technologies that working professionals are expected to handle with confidence.

11. What is the role of a data engineer in a modern data stack?

In a modern data stack, a data engineer’s role includes:

- Building and managing scalable data pipelines (ETL/ELT).

- Maintaining data quality, reliability, and integrity.

- Integrating multiple data sources — APIs, databases, logs, etc.

- Enabling analytics and BI by preparing data for analysts and data scientists.

- Optimizing storage and compute resources in cloud environments.

- Automating workflows using orchestration tools like Apache Airflow or Prefect.

They act as the bridge between raw data and usable business intelligence.

12. What is partitioning in databases or data lakes?

Partitioning refers to splitting large datasets into smaller, more manageable chunks, typically based on a column like date, region, or category.

Types:

- Horizontal Partitioning: Splitting rows (e.g., data by year).

- Vertical Partitioning: Splitting columns (less common in analytics).

13. Explain how batch processing differs from stream processing. Give use cases.

| Aspect | Batch Processing | Stream Processing |

| Data Arrival | Data comes in chunks | Continuous flow of data |

| Latency | High (minutes to hours) | Low (milliseconds to seconds) |

| Tools | Apache Spark, AWS Glue | Apache Kafka, Apache Flink, Spark Streaming |

| Use Cases | Daily sales reports, monthly billing | Fraud detection, real-time alerts |

Real Example:

- Batch: Aggregating daily sales across branches every midnight.

- Stream: Detecting fraudulent credit card activity in real time.

14. What tools are commonly used for stream processing?

Some popular tools for stream processing include:

- Apache Kafka – Distributed event streaming platform (for message ingestion).

- Apache Flink – Low-latency stream processing engine.

- Spark Streaming – Micro-batch processing using Spark.

- Apache Pulsar – Pub-sub + queue-based messaging system.

- Kafka Streams / ksqlDB – Lightweight stream processing directly on Kafka topics.

These tools enable real-time analytics, event-driven applications, and alerting systems.

15. What is Apache Spark? Why is it popular among data engineers?

Apache Spark is an open-source, distributed computing engine designed for big data processing.

Why it’s popular:

- Supports large-scale batch and stream processing.

In-memory computation for faster processing than traditional Hadoop MapReduce. - High-level APIs in Python (PySpark), Java, Scala, and R.

- Rich libraries: Spark SQL, MLlib, GraphX, Spark Streaming.

16. Python Coding Question:

Write a script to remove duplicate rows from a CSV file using pandas.

python

import pandas as pd

# Load CSV

df = pd.read_csv('data.csv')

# Drop duplicates

df_cleaned = df.drop_duplicates()

# Save back to new file

df_cleaned.to_csv('cleaned_data.csv', index=False)17. What is schema evolution in Big Data?

Schema evolution is the ability of a data system to handle schema changes (e.g., adding/removing fields) without breaking pipelines.

It’s critical in big data systems like:

- Apache Avro, Parquet

- Delta Lake

- BigQuery

18. How do you handle data quality issues in pipelines?

Data quality issues can be handled through:

- Validation Rules: Check for nulls, data types, and ranges.

- Data Profiling: Analyze source data for anomalies before ingestion.

- Monitoring & Alerts: Track unexpected drops/spikes in data volume.

- Automated Testing: Use tools like Great Expectations or Deequ.

- Quarantine Bad Data: Send invalid rows to a separate location for review.

Good data pipelines include logging, alerts, and retries to handle data quality proactively.

19. What are some commonly used orchestration tools?

Orchestration tools manage the execution order, dependencies, and monitoring of data workflows.

Popular ones:

- Apache Airflow – Python-based DAG (Directed Acyclic Graph) workflow tool.

- Prefect – Modern alternative to Airflow, simpler cloud-native features.

- Luigi – Workflow tool developed by Spotify, good for ETL pipelines.

- Dagster – Focuses on software engineering best practices for data pipelines.

These tools allow you to schedule, retry, monitor, and log data jobs efficiently.

20. What is the CAP theorem, and how does it apply to distributed systems?

The CAP Theorem states that a distributed system can only guarantee two out of three of the following at a given time:

- Consistency – Every node sees the same data at the same time.

- Availability – Every request gets a response (even if not the latest).

- Partition Tolerance – The system continues to operate despite network failures.

Implication:

- You must trade-off between C, A, and P depending on your use case.

- For example:

- CP: HBase (consistent but may reject requests during partition)

- AP: Cassandra (available, but eventual consistency)

- CP: HBase (consistent but may reject requests during partition)

Understanding CAP is crucial when designing high-availability and fault-tolerant systems.

Data Engineering Interview Questions and Answers: Advanced Level (3+ Years of Experience)

Senior-level interviews are designed to evaluate how well you can architect scalable systems, manage end-to-end data platforms, and make strategic decisions.

You’ll be tested on stream processing, system design, infrastructure as code, and compliance practices. This section is for those aiming to lead data initiatives or step into more specialized roles.

21. Design a real-time analytics pipeline for a ride-sharing app.

A ride-sharing app generates data from drivers, passengers, locations, payments, and more. A real-time pipeline would look like:

Ingestion:

- Kafka collects location updates, trip events, and payments in real-time.

Stream Processing:

- Apache Flink or Spark Streaming processes location events to calculate live ETAs, surge pricing, or driver heatmaps.

Storage:

- Hot Storage: Redis for caching live data (e.g., nearby drivers).

- Cold Storage: S3/Data Lake or Delta Lake for historical trip data.

Analytics & Visualization:

- Presto/Trino or Druid for interactive dashboards.

- BI tools like Superset, Looker, or Tableau.

Monitoring:

- Prometheus + Grafana for pipeline health.

This system ensures low-latency decisions (e.g., matching rider and driver) and deep analytics (e.g., route optimization).

22. How would you optimize a slow-running Spark job?

Optimizing a Spark job involves several tuning techniques:

- Use Partitioning Wisely

- Avoid Wide Transformations

- Cache Strategically

- Optimize Joins

- Use Efficient Formats

- Tune Executors

- Monitor with Spark UI

23. How do you ensure data lineage and observability in a complex pipeline?

Data Lineage tracks how data flows across systems — essential for debugging, auditing, and compliance.

Ways to implement:

- Metadata Tracking

- Pipeline Graphs.

- Column-level Lineage

24. Explain data lake vs. data warehouse architecture. When to use each?

| Feature | Data Lake | Data Warehouse |

| Data Type | Raw (structured, semi, unstructured) | Structured data only |

| Storage Format | Files (Parquet, Avro, CSV, JSON) | Tables |

| Cost | Cheaper (object storage) | Expensive (compute + storage) |

| Use Cases | ML/AI, raw data archiving | BI, dashboarding, reporting |

| Examples | S3 + Athena, Delta Lake | Snowflake, BigQuery, Redshift |

When to use:

- Use a data lake when you need flexibility and are processing raw logs, images, or JSONs.

- Use a warehouse for structured reporting and SQL-based analytics.

Modern setups often combine both as a lakehouse architecture.

25. What is Delta Lake, and how does it improve upon traditional data lakes?

Delta Lake is an open-source storage layer that brings ACID transactions to data lakes.

Improvements:

- ACID Compliance: Safe concurrent reads/writes.

- Schema Enforcement/Evolution: Prevents bad data from corrupting pipelines.

- Time Travel: Query historical versions of data (like Git for data).

- Upserts/Merges: Efficient support for CDC (Change Data Capture).

26. Advanced SQL Question:

Write a query to find users who made more than 3 purchases in the last 30 days.

sql

SELECT user_id

FROM purchases

WHERE purchase_date >= CURRENT_DATE - INTERVAL '30 days'

GROUP BY user_id

HAVING COUNT(*) > 3;27. How would you handle slowly changing dimensions (SCD) in data warehousing?

SCD refers to how changes in dimensional data (e.g., customer address) are tracked over time.

Common types:

- Type 1 (Overwrite): Replace old value. No history.

- Type 2 (Add Row): Add a new row with a timestamp/version. Keeps history.

- Type 3 (Add Column): Add a new column for the previous value.

28. Explain the concept of backpressure in stream processing. How can you handle it?

Backpressure occurs when the data producer sends events faster than the consumer can process leading to queue buildup or system crashes.

How to handle it:

- Buffering & Throttling: Temporarily store and slow down input.

- Autoscaling: Increase processing power dynamically (e.g., via Kubernetes).

- Rate Limiting: Apply limits at the ingestion layer (e.g., Kafka rate limits).

- Async Processing: Use non-blocking frameworks to decouple stages.

Tools like Apache Flink have built-in backpressure handling mechanisms and expose metrics.

29. What are the best practices for managing infrastructure as code in data engineering?

Infrastructure as Code (IaC) ensures your environment is reproducible, versioned, and auditable.

Best Practices:

- Use tools like Terraform, Pulumi, or CloudFormation

- Modularize your code

- Version Control

- State Management

IaC enables team collaboration, rollback capability, and audit compliance.

30. How do you ensure security and compliance in your data pipelines?

Security and compliance are non-negotiable in data engineering, especially with sensitive PII or financial data.

Ensure security by:

- Data Encryption

- Access Control

- Auditing & Logging

- Compliance Standards

- Data Masking & Anonymization

- Security in Code

These 30 data engineering interview questions and answers give you a strong foundation to prep from, whether you’re starting or advancing to senior roles.

If you want to learn more about data engineering and gain enough knowledge to ace the interview, consider enrolling in GUVI’s Free Data Engineering Course where you will learn about all the different components of the data pipeline, data warehouses, data marts, data lakes, big data stores, and much more.

Conclusion

In conclusion, interviewing for a data engineering role demands more than theoretical knowledge, it requires a solid understanding of how to build, scale, and maintain reliable data systems in real-world environments.

By mastering these 30 data engineering interview questions and answers, you’re not just preparing to pass interviews, you’re also strengthening the core skills needed to thrive in a fast-paced, data-driven world.

![Top React Interview Questions and Answers! [Updated] 9 React Interview Questions](https://www.guvi.in/blog/wp-content/uploads/2022/01/Top-React-Interview-Questions-and-Answers.webp)

![Top 20 RPA UiPath Interview Questions and Answers [2025] 11 rpa uipath interview questions](https://www.guvi.in/blog/wp-content/uploads/2023/02/Top-20-RPA-UiPath-Interview-Questions-and-Answers.png)

Did you enjoy this article?