10 Interesting Data Science Kubernetes Projects To Upskill Your Knowledge

Mar 12, 2025 5 Min Read 4089 Views

(Last Updated)

Data science is a vast field consisting of several platforms that are used for data processing and one of the most important platform is Kubernetes. Working with Kubernetes is what we call data science Kubernetes.

Now, if you want to showcase your skill in that, the obvious and the easiest way is through data science Kubernetes projects as practical learning is always better than theoretical learning.

That is what we are going to see in this article, 10 interesting data science Kubernetes projects that can help you upskill your knowledge as well as your resume.

So, without further ado, let’s get started on data science Kubernetes projects.

Table of contents

- What is meant by Data Science Kubernetes?

- 10 Interesting Data Science Kubernetes Projects

- Deploying a Machine Learning Model with Kubernetes

- Building a Scalable ETL Pipeline

- Distributed Training of Deep Learning Models

- Real-time Data Processing with Kubernetes and Kafka

- Data Versioning and Experiment Tracking

- Building a Recommender System

- Setting Up a Data Science Workflow with Kubeflow

- Implementing a Data Science CI/CD Pipeline

- Creating a Data Lake with Kubernetes

- Automated Hyperparameter Tuning

- Getting Started with Data Science Kubernetes Projects

- Step 1: Set Up Your Development Environment

- Step 2: Learn Kubernetes Basics

- Step 3: Choose a Project and Plan Your Approach

- Step 4: Learn and Use Kubernetes Tools for Data Science

- Step 5: Join the Community and Seek Help

- Conclusion

- FAQs

- How does Kubernetes improve model deployment in data science?

- What is the role of containerization in data science Kubernetes projects?

- What are the security considerations when using Kubernetes for data science?

- What are some challenges in using Kubernetes for data science?

What is meant by Data Science Kubernetes?

Before we jump into data science Kubernetes projects, let us first understand what is meant by data science kubernetes and why it is important for you as a data scientist.

Data Science Kubernetes refers to using Kubernetes, a powerful platform for managing containerized applications, to run and manage data science workflows efficiently.

It involves containerizing data science models and applications to ensure they run consistently across different environments.

Kubernetes automates deployment, scaling, and resource management, making it easier to handle large datasets, scale processing tasks, and serve models to users.

By combining the automation and scalability of Kubernetes with data science tasks, you can create robust, scalable, and efficient data science applications that are easier to develop, deploy, and maintain. That is why implementing data science Kubernetes projects can give you an edge over others.

Know More: Best Online Kubernetes Course With Certification

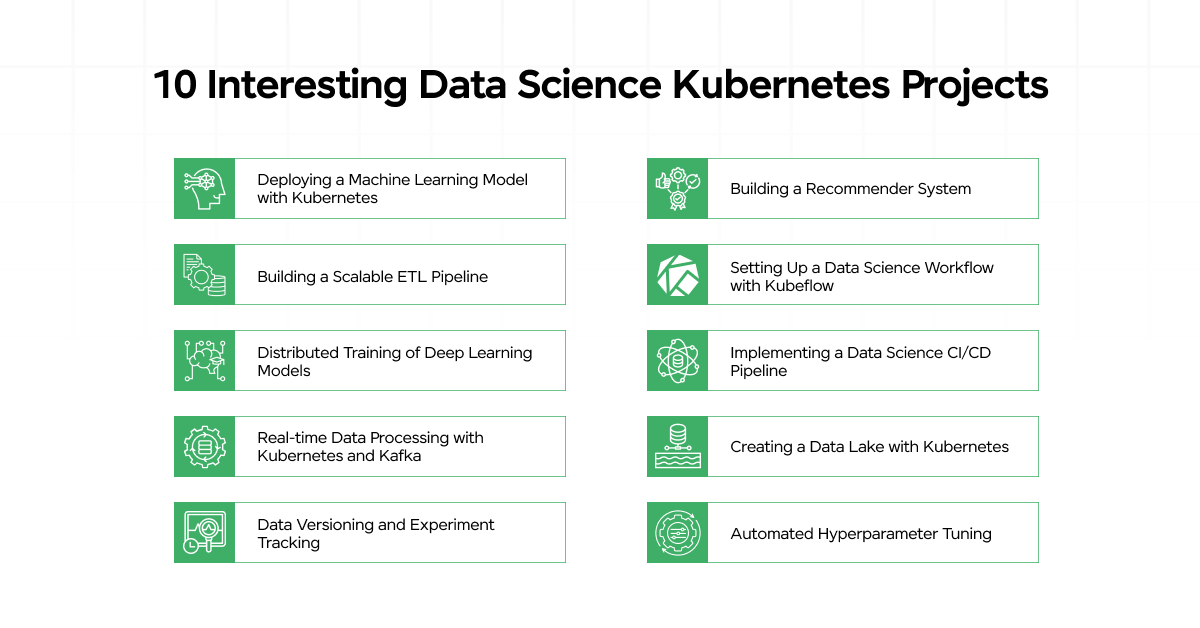

10 Interesting Data Science Kubernetes Projects

Now that you understand what data science Kubernetes is, it is time to see the data science Kubernetes projects that can help you develop as a data scientist.

Before we move any further, it is mandatory that you know the basics of data science and Kubernetes. If not, consider enrolling for a professionally certified online Data Science Course offered by a recognized institution that teaches you the basics and strengthens your foundation.

Here’s a list of 10 interesting data science Kubernetes projects:

1. Deploying a Machine Learning Model with Kubernetes

Let’s start our journey into data science Kubernetes projects by deploying machine learning models.

Deploying machine learning models is a critical step in turning your data science project from a concept into a real-world application.

Kubernetes can simplify this process by managing your model’s lifecycle, scaling it based on demand, and ensuring high availability.

Steps to get started:

- Containerize your machine-learning model using Docker.

- Create a Kubernetes deployment file to specify how your model should be deployed.

- Set up a service to expose your model’s API.

- Use Kubernetes’ autoscaling feature to manage traffic spikes.

2. Building a Scalable ETL Pipeline

Extract, Transform, and Load (ETL) processes are essential for data preprocessing. Kubernetes can help in creating scalable ETL pipelines that can handle large datasets efficiently.

Key components:

- Extraction: Use tools like Apache NiFi or custom Python scripts in containers to extract data from various sources.

- Transformation: Utilize Spark or Dask within Kubernetes pods to clean and transform the data.

- Loading: Load the transformed data into a data warehouse or a data lake.

3. Distributed Training of Deep Learning Models

Deep learning models often require significant computational resources. Kubernetes can help distribute the training process across multiple nodes, speeding up the training time.

How to approach this:

- Use Kubernetes to deploy TensorFlow or PyTorch distributed training jobs.

- Configure the cluster with GPU support if needed.

- Implement a Kubernetes job to manage the training process.

4. Real-time Data Processing with Kubernetes and Kafka

Real-time data processing is crucial for applications like fraud detection, stock trading, and real-time analytics. Kubernetes can be used to manage the infrastructure required for real-time data processing.

Implementation steps:

- Set up Kafka on Kubernetes to handle real-time data streams.

- Use tools like Flink or Spark Streaming to process data in real-time.

- Deploy microservices that consume the processed data for further analysis or storage.

5. Data Versioning and Experiment Tracking

Next up on our list of data science Kubernetes projects, we have data versioning and experiment tracking. Keeping track of different versions of your datasets and experiments is essential for reproducibility in data science. Kubernetes can help manage these aspects effectively.

Tools to use:

- DVC (Data Version Control): Use DVC to version your datasets and track changes.

- MLflow: Deploy MLflow on Kubernetes to track your experiments, models, and results.

Also Explore: Is Coding Required For Data Science???

6. Building a Recommender System

Recommender systems are widely used in e-commerce, streaming services, and social media. Kubernetes can help deploy a scalable and efficient recommender system.

Project outline:

- Preprocess and clean your dataset using a Kubernetes ETL pipeline.

- Train a recommendation model using collaborative filtering or deep learning techniques.

- Deploy the model using Kubernetes, ensuring it can scale to meet user demands.

7. Setting Up a Data Science Workflow with Kubeflow

Kubeflow is an open-source platform built on Kubernetes, specifically designed for machine learning workflows. It can help manage end-to-end ML workflows, from data preparation to model deployment.

Key features:

- Use Kubeflow Pipelines to orchestrate complex workflows.

- Manage Jupyter notebooks for interactive data analysis.

- Deploy trained models with Kubeflow Serving.

8. Implementing a Data Science CI/CD Pipeline

Continuous Integration and Continuous Deployment (CI/CD) are essential practices in software development, and they are equally important in data science to ensure that models and data pipelines are always up-to-date and reliable. Kubernetes can help automate and manage these processes effectively.

Steps to set up:

- Use tools like Jenkins or GitLab CI to set up a CI/CD pipeline for your data science projects.

- Containerize your data preprocessing, model training, and deployment steps.

- Create Kubernetes jobs and deployments to automate these steps whenever new data is available or when there are updates to your codebase.

- Integrate monitoring tools like Prometheus and Grafana to keep track of your pipeline’s performance and health.

9. Creating a Data Lake with Kubernetes

A data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. Kubernetes can help you manage and scale your data lake infrastructure efficiently.

Implementation outline:

- Use tools like MinIO or Apache Hudi to set up a scalable object storage solution on Kubernetes.

- Ingest data from various sources using Kafka or Apache Nifi into your data lake.

- Use Kubernetes to deploy processing engines like Apache Spark or Presto for analyzing the data stored in your data lake.

- Implement access controls and security measures to protect your data.

10. Automated Hyperparameter Tuning

Hyperparameter tuning is a critical step in improving the performance of your machine-learning models. Kubernetes can help automate this process, making it more efficient and scalable.

How to approach this:

- Use tools like Katib, which is a Kubernetes-native project for automated hyperparameter tuning.

- Define the search space for your hyperparameters and configure the tuning strategy (e.g., grid search, random search, or Bayesian optimization).

- Deploy Katib on your Kubernetes cluster and run your hyperparameter tuning experiments in parallel.

- Analyze the results to identify the best hyperparameters for your model.

These 10 data science Kubernetes projects can provide even more opportunities to explore the combination of data science and Kubernetes.

By implementing these, you’ll gain valuable experience and insights into how Kubernetes can streamline and enhance your data science workflows.

Learn More: How To Make A Career Switch To Data Science?

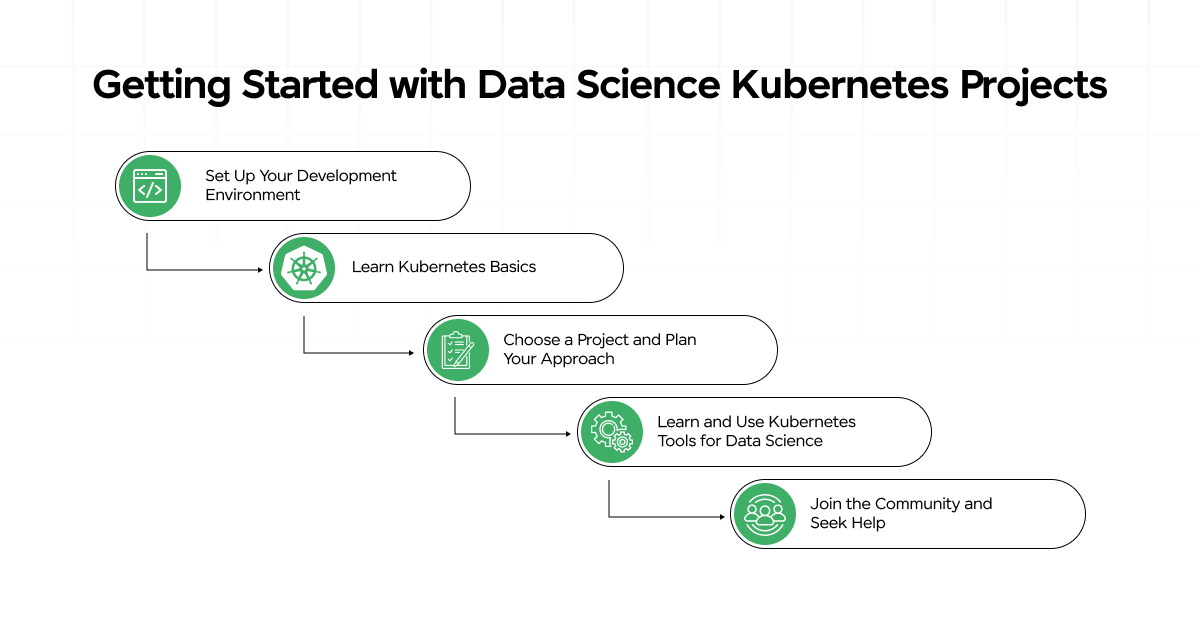

Getting Started with Data Science Kubernetes Projects

The previous section consisted of the data science Kubernetes projects and this section will hold the answer to getting started with those.

Starting your data science journey with these data science Kubernetes projects can seem daunting at first, but with a structured approach, you’ll be well on your way to utilizing Kubernetes for your data science workflows.

Here’s a step-by-step guide to get you started on data science Kubernetes projects:

Step 1: Set Up Your Development Environment

Before diving into the projects, ensure you have the necessary tools and environment set up.

- Install Docker: Docker is essential for containerizing your applications. Install Docker and ensure it’s running properly.

- Install Kubernetes: Set up a Kubernetes cluster. You can use Minikube for local development or a cloud provider like Google Kubernetes Engine (GKE), Amazon EKS, or Azure Kubernetes Service (AKS) for a more scalable setup.

- Install kubectl: kubectl is the command-line tool for interacting with your Kubernetes cluster.

Step 2: Learn Kubernetes Basics

Familiarize yourself with Kubernetes concepts such as Pods, Deployments, Services, and ConfigMaps. Useful resources include:

Step 3: Choose a Project and Plan Your Approach

Select a project from the list of data science Kubernetes projects and outline the steps needed to complete it. Let’s take “Deploying a Machine Learning Model with Kubernetes” as an example.

Project Outline:

- Containerize the Model:

- Create a Dockerfile for your ML model.

- Build and test the Docker image locally.

- Deploy to Kubernetes:

- Apply the Deployment and Service files to your Kubernetes cluster using

kubectl apply -f <file-name>.yaml. - Verify the deployment using

kubectl get podsandkubectl get services.

- Apply the Deployment and Service files to your Kubernetes cluster using

- Test and Monitor:

- Test your deployed model’s API to ensure it’s working correctly.

- Set up monitoring tools like Prometheus and Grafana to track the performance and health of your deployment.

Step 4: Learn and Use Kubernetes Tools for Data Science

Familiarize yourself with data science tools and frameworks that integrate well with Kubernetes, such as:

- Kubeflow: For managing end-to-end machine learning workflows.

- MLflow: For tracking experiments, model versioning, and deployment.

- Apache Spark on Kubernetes: For scalable data processing.

Step 5: Join the Community and Seek Help

Join Kubernetes and data science communities to seek help, share your progress, and learn from others. Useful communities include:

By following these 5 steps, you can easily get started with data science Kubernetes projects!

If you want to learn more about Kubernetes and Data Science, then consider enrolling in

GUVI’s Certified Data Science Course not only gives you theoretical knowledge but also practical knowledge with the help of real-world projects.

Also Read: Data Science vs Data Analytics | Best Career Choice

Conclusion

In conclusion, combining data science with Kubernetes offers a powerful way to enhance your data science Kubernetes projects through improved scalability, automation, and resource management.

By utilizing Kubernetes for tasks like model deployment, ETL pipelines, and real-time data processing, you can streamline complex workflows and ensure consistent, efficient operation.

These data science Kubernetes projects provide a practical starting point for exploring the vast potential of Data Science Kubernetes, empowering you to build robust, scalable applications that can handle diverse data challenges.

Must Explore: Navigating the Best Datasets for Your Data Science Projects [2025]

FAQs

Kubernetes automates the deployment process, ensuring that models are consistently deployed across different environments and can be easily scaled based on demand.

Containerization packages applications and their dependencies into isolated containers, ensuring consistent performance across different environments.

Implement access controls, use secure images, and regularly update Kubernetes and its components to address security concerns.

Challenges include the initial learning curve, managing complex configurations, and ensuring security and compliance.

![How to Make Amazing Projects for Internships and Placements? [2025] 5 projects for internships and placements](https://www.guvi.in/blog/wp-content/uploads/2024/04/Feature-Image-2.png)

Did you enjoy this article?