20 Important Data Science Technologies That You Should Know

Mar 15, 2025 6 Min Read 5141 Views

(Last Updated)

Suppose you are someone who has a liking towards data science and wants to explore more about it. In that case, the first thing that you need to keep in mind is that data science is a vast field that combines statistics, computer science, and domain expertise to extract meaningful insights from data.

To master this combination of fields, you need to learn all the required data science technologies that can help you understand all about the domain.

For you to not dingle-dangle over the internet for these data science technologies, we made this article that compiles the trendy 20 data science technologies that professionals are learning every day. Let us get started now!

Table of contents

- What does a Data Scientist Do?

- 20 Data Science Technologies That You Need to Learn

- Python

- R

- SQL

- Hadoop

- Spark

- Tableau

- TensorFlow

- Keras

- PyTorch

- Jupyter Notebook

- D3.js

- MATLAB

- Excel

- RapidMiner

- SAS

- BigML

- H2O.ai

- KNIME

- Git

- Airflow

- Why You Need to Learn These 20 Data Science Technologies?

- Versatility in Tools

- Comprehensive Skill Set

- Handling Big Data

- Efficient Data Processing

- Advanced Analytics and Machine Learning

- Data Visualization

- Experimentation and Model Building

- Collaboration and Version Control

- Conclusion

- FAQs

- What is the difference between Hadoop and Spark?

- How does Excel still play a role in data science?

- What unique feature does KNIME offer to data scientists?

- Why should you consider learning D3.js for data visualization?

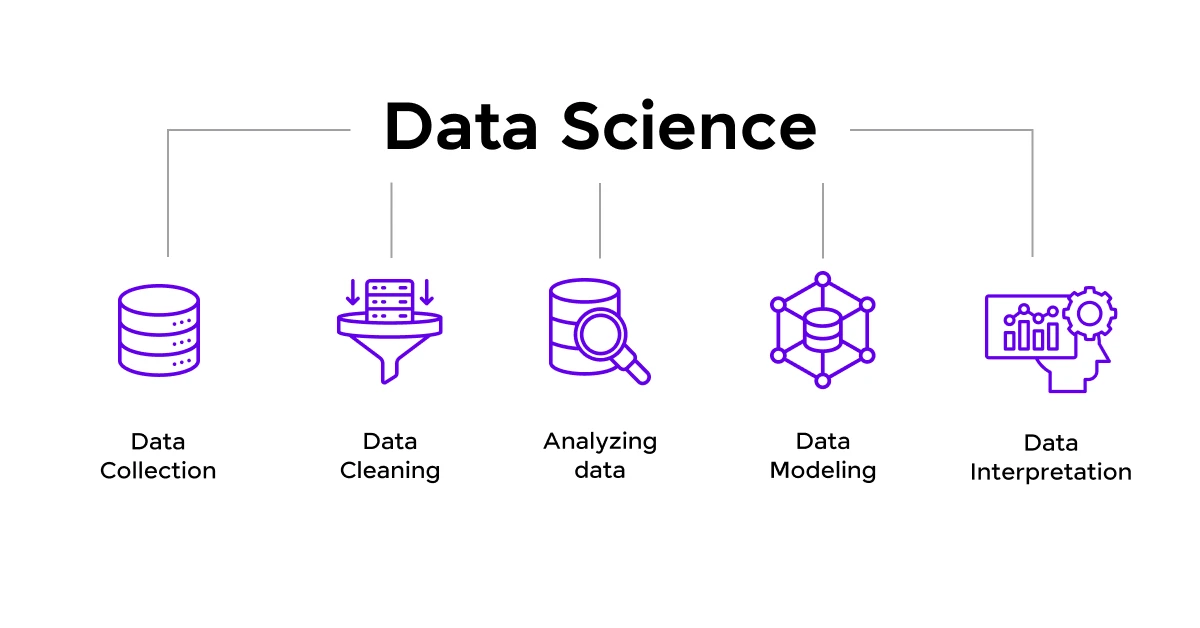

What does a Data Scientist Do?

Earlier we mentioned that data science is a vast field that involves a lot of subjects and the data science technologies that we are going to see involve all of those. But first, you need to know the role of a data scientist to understand the importance of those data science technologies.

Now, as a data scientist, your job involves working with lots of data to find useful insights.

Your everyday job starts by gathering data from different sources, cleaning it up, and making sure it’s accurate. Then, you analyze the data using statistical methods and machine learning techniques to uncover patterns and trends.

You’ll build models to predict future outcomes and create visualizations to share your findings clearly. Part of your role is to turn complex data into easy-to-understand business strategies.

You’ll also design experiments to test ideas, ensure data is secure, and work closely with other teams to understand their needs and help them make data-driven decisions.

This is the everyday role of a data scientist and after you become one, this is your routine work. To do this efficiently, you need to learn data science technologies that are coming up in the next section.

20 Data Science Technologies That You Need to Learn

The role of a data scientist is crucial and for you to excel in that, you need to learn the data science technologies mentioned below.

But before we go any further, if you want to learn and explore more about Data Science and its functionalities, consider enrolling in a professionally certified online Data Science Course that teaches you everything about data and helps you get started as a data scientist.

Let us now see the 20 data science technologies with a small description of their features:

These 20 data science technologies not only empower you with the knowledge of various fields but also make you stand out from the crowd as you have every domain knowledge at your fingertips.

1. Python

You’ve probably heard of Python. It’s one of the most popular programming languages in the world, and for a good reason.

Python is easy to learn and incredibly versatile. With libraries like NumPy for numerical data, Pandas for data manipulation, and Scikit-Learn for machine learning, Python makes it straightforward to work with data. If you’re new to coding, Python is a great place to start.

2. R

R might look a bit intimidating at first, but it’s a powerful tool for statistical analysis. If you love data visualization and statistical models, R is your friend.

It has a vast array of packages like ggplot2 for creating stunning visuals and dplyr for data manipulation. R is designed by statisticians, so if you’re delving into heavy statistical analysis, this is the language you’ll want to learn.

3. SQL

SQL stands for Structured Query Language, and it’s used for managing databases. Think of it as a tool that helps you talk to databases to fetch the data you need.

Whether you’re working with a small database or a massive one, SQL helps you retrieve and manipulate data efficiently. You’ll often use SQL to prepare data before analysis.

4. Hadoop

When you hear “big data,” think Hadoop. This framework helps you store and process huge amounts of data across many computers.

Imagine you have a massive spreadsheet that’s too big for one computer to handle. Hadoop splits it up and processes it on multiple machines, making it manageable.

5. Spark

Apache Spark is like Hadoop’s faster, more flexible cousin. It can handle large-scale data processing, but it does so much faster by keeping data in memory.

This makes it perfect for real-time data processing. If you’re dealing with large datasets and need speed, Spark is your go-to tool.

6. Tableau

Tableau is all about making data beautiful and understandable. It’s a data visualization tool that helps you create interactive and shareable dashboards.

Whether you’re presenting your findings to a team or just trying to make sense of complex data, Tableau makes it easy to visualize data in a clear and compelling way.

7. TensorFlow

Developed by Google, TensorFlow is a powerful library for deep learning. Think of deep learning as a way for computers to learn from data in a very human-like way.

TensorFlow helps you build and train complex neural networks, which can be used for tasks like image and speech recognition.

8. Keras

Keras is an easier-to-use interface for TensorFlow. If TensorFlow feels too complex, Keras simplifies the process of building neural networks.

It’s user-friendly and allows you to prototype quickly, making it a great starting point for deep learning projects.

9. PyTorch

PyTorch, created by Facebook, is another deep-learning framework. It’s known for its dynamic computation graph, which makes it easier to work with. If you need to change your model architecture on the fly, PyTorch is very flexible and user-friendly.

10. Jupyter Notebook

Jupyter Notebooks are fantastic for documenting your data science process. They allow you to combine code, visualizations, and narrative text in one place.

This makes them perfect for sharing your work with others or keeping a clear record of your experiments and results.

11. D3.js

If you love web-based visualizations, D3.js is for you. This JavaScript library lets you create stunning, interactive visuals right in your web browser. It’s a bit more technical than some other visualization tools, but it offers incredible flexibility and control.

12. MATLAB

MATLAB is a programming platform designed for engineers and scientists. It’s particularly strong in matrix operations and technical computing.

If you’re working on complex mathematical calculations, simulations, or algorithm development, MATLAB is incredibly powerful.

13. Excel

Don’t underestimate Excel! It’s a powerful tool for data analysis, especially for small to medium-sized datasets.

Excel is great for quick data manipulation, creating charts, and performing basic statistical analysis. Plus, it’s user-friendly and widely used in many industries.

14. RapidMiner

RapidMiner is a data science platform that integrates data preparation, machine learning, deep learning, text mining, and predictive analytics.

It’s designed to be user-friendly and doesn’t require much coding, making it accessible for those who might be less technically inclined.

15. SAS

SAS (Statistical Analysis System) is a software suite used for advanced analytics. It’s been around for a long time and is trusted by many industries for its reliability in data analysis, business intelligence, and predictive analytics.

16. BigML

BigML provides a user-friendly machine learning platform that automates the process of building and deploying models.

It’s great for those who want to focus on solving business problems rather than getting bogged down in the technical details of machine learning.

17. H2O.ai

H2O.ai is an open-source platform that makes it easy to build machine learning models. It supports many algorithms and can handle large datasets efficiently. If you’re looking to implement machine learning in your projects, H2O.ai is a solid choice.

18. KNIME

KNIME (Konstanz Information Miner) is an open-source data analytics platform. It’s highly visual, allowing you to create data workflows by dragging and dropping components. KNIME is excellent for those who prefer a more graphical approach to data analysis.

19. Git

Git is a version control system that helps you keep track of changes in your code. It’s essential for collaborating with others and managing different versions of your projects. Git ensures that you can always go back to a previous version if something goes wrong.

20. Airflow

Apache Airflow is a tool for automating and scheduling data workflows. If you have complex data pipelines that need to run regularly, Airflow helps you manage and monitor them efficiently. It’s particularly useful for ensuring that data processing tasks are executed in the correct order.

Each of these data science technologies plays a unique role in the data science landscape, and understanding when and how to use them will make you a more effective and versatile data professional. Happy learning!

If you are just getting started with Python and want to excel in it, consider joining GUVI’s Python course which lets you learn and get certified at your own pace.

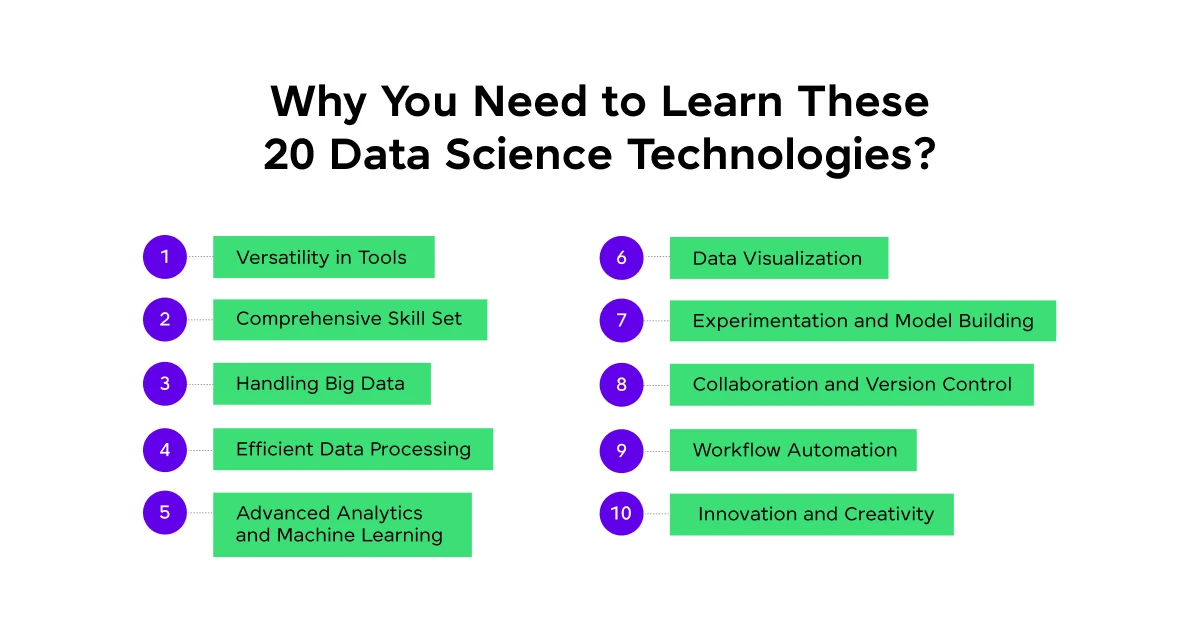

Why You Need to Learn These 20 Data Science Technologies?

You may get questions like, “Why should I learn all these? Is it really necessary? What if I learn just one or two and skip others?” after seeing this long list of data science technologies, but this section provides answers to those questions

Here are the reasons why you should consider mastering the above-mentioned data science technologies:

1. Versatility in Tools

Imagine you’re a chef. Would you stick to just one knife in your kitchen? Probably not. The same goes for data science. Different projects demand different tools, and knowing a variety of technologies allows you to pick the right one for the task.

For example, Python might be great for general-purpose programming, but when you need advanced statistical analysis, R could be your best bet. Each technology that we discussed in the above data science technologies has its strengths, and being versatile ensures you can tackle any challenge that comes your way.

2. Comprehensive Skill Set

Employers love candidates who bring a lot to the table. By mastering these 20 data science technologies, you’re not just making yourself more employable, you’re making yourself indispensable.

Companies often look for data scientists who can handle a range of tasks, from data cleaning with SQL to building complex machine learning models with TensorFlow or PyTorch.

3. Handling Big Data

We live in an era of big data. The sheer volume of data being generated every day is staggering, and traditional tools can’t handle it all. Technologies like Hadoop and Spark are designed to process massive datasets efficiently.

4. Efficient Data Processing

Data science technologies like SQL, Pandas, and Jupyter Notebooks make data manipulation and analysis more efficient.

SQL is essential for database management, while Pandas is perfect for data manipulation in Python. Jupyter Notebooks allow you to document your process and share it with others, making your workflow transparent and reproducible.

5. Advanced Analytics and Machine Learning

Machine learning and deep learning are at the heart of data science. Data science technologies like TensorFlow, Keras, and PyTorch enable you to build sophisticated models that can predict outcomes, classify data, and even recognize images and speech.

6. Data Visualization

Data is only as good as the insights you can draw from it. Data science technologies like Tableau, D3.js, and Matplotlib help you create compelling visualizations that make complex data understandable and actionable.

7. Experimentation and Model Building

RapidMiner, SAS, and BigML offer platforms for building and testing models without getting too deep into the coding.

8. Collaboration and Version Control

Git is essential for version control, especially when working in a team. It helps you keep track of changes, collaborate with others, and manage different versions of your code. This is crucial for maintaining the integrity and reproducibility of your work.

We hope these reasons are enough for you to get started with these data science technologies and master them along the way.

If you want to learn more about Data science and its implementation in the real world, then consider enrolling in GUVI’s Certified Data Science Course which not only gives you theoretical knowledge but also practical knowledge with the help of real-world projects.

Conclusion

In conclusion, mastering the 20 data science technologies is essential for anyone looking to thrive in this dynamic field.

These data science technologies not only help you process and analyze data more efficiently but also enable you to create compelling visualizations, build sophisticated models, and automate workflows.

By equipping yourself with this diverse toolkit, you position yourself at the forefront of innovation, ready to tackle complex data challenges and drive impactful business decisions.

FAQs

Hadoop is designed for distributed storage and processing of big data, while Spark offers faster processing by keeping data in memory, suitable for real-time data analysis.

Excel is user-friendly and widely used for quick data analysis, manipulation, and visualization, particularly with small to medium-sized datasets.

KNIME’s visual workflow interface allows for easy drag-and-drop data processing and analysis, making it accessible for those who prefer graphical tools over coding.

D3.js allows you to create highly customized, interactive web-based visualizations, offering greater control and flexibility than many other tools.

![Top Data Science Programming Languages All Beginners Must Know [2025] 7 data science programming language](https://www.guvi.in/blog/wp-content/uploads/2025/06/Feature-Image-3.png)

![Top 40 Data Science Interview Questions for Freshers [2025] 8 data science interview questions for freshers](https://www.guvi.in/blog/wp-content/uploads/2025/06/Top-40-Data-Science-Interview-Questions-for-Freshers-2025.png)

Did you enjoy this article?