What is a Decision Tree in Machine Learning – A Step-by-Step Guide

Oct 22, 2024 6 Min Read 1680 Views

(Last Updated)

In the dynamic world of machine learning, decision trees stand out as one of the most intuitive and powerful tools for both classification and regression tasks. Decision tree in machine learning can provide clear, interpretable results that mimic human decision-making processes. In this step-by-step blog, we’ll explore the concept of decision trees in machine learning and their inner workings. Let’s begin!

Table of contents

- What are Decision Trees?

- What is a Decision Tree in Machine Learning - A Step-by-Step Guide

- How Decision Trees Work

- Building a Decision Tree

- Pruning Decision Trees

- Evaluating Decision Tree Performance

- Advantages and Disadvantages of Decision Trees

- Decision Tree Algorithms

- Conclusion

- FAQs

- What are the key advantages of using decision trees in machine learning?

- How do decision trees handle missing values in the dataset?

- What are some common techniques to prevent overfitting in decision trees?

What are Decision Trees?

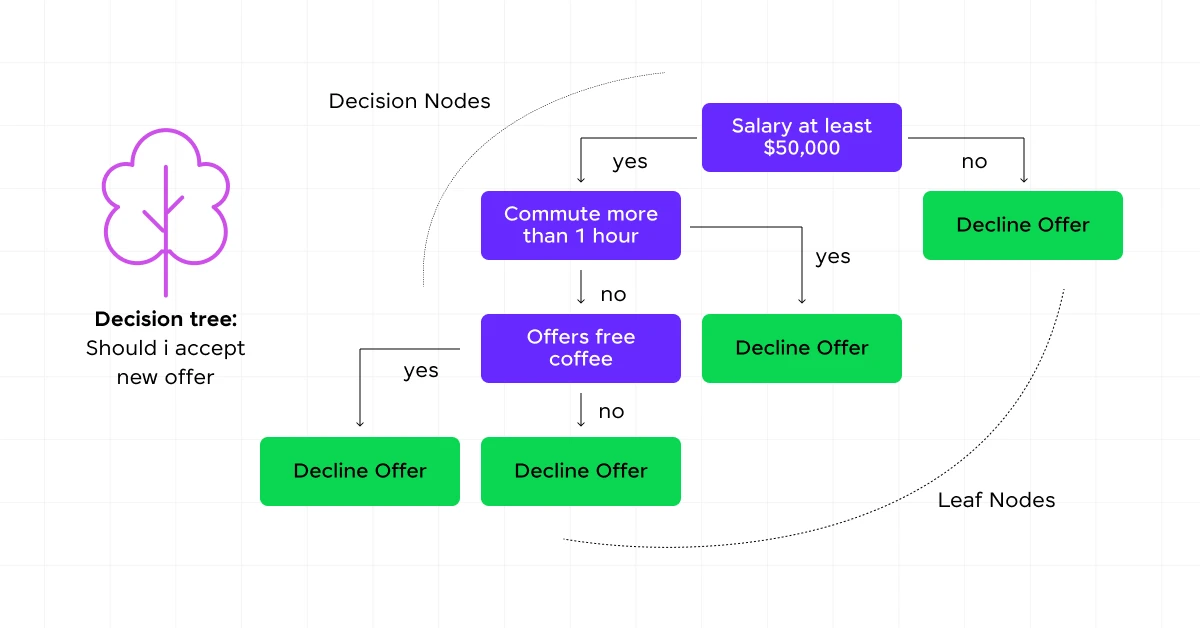

Decision trees are flowchart-like structures that model decisions and their possible consequences. They start with a single node (the root) and branch out into possible outcomes, ultimately leading to a final decision or prediction (the leaves).

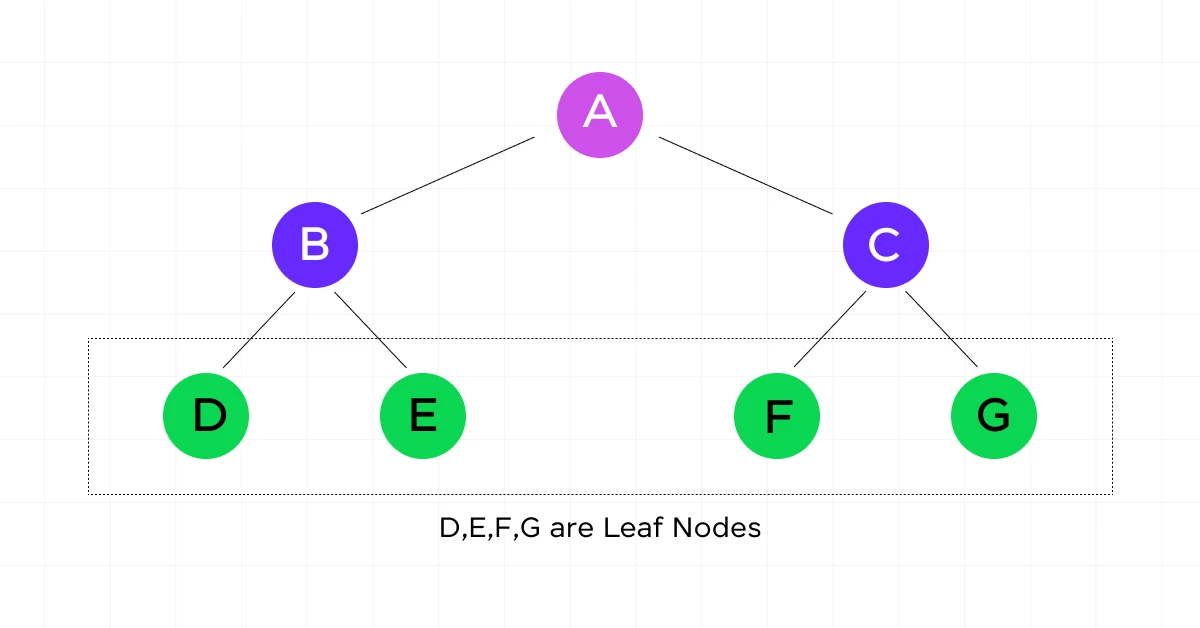

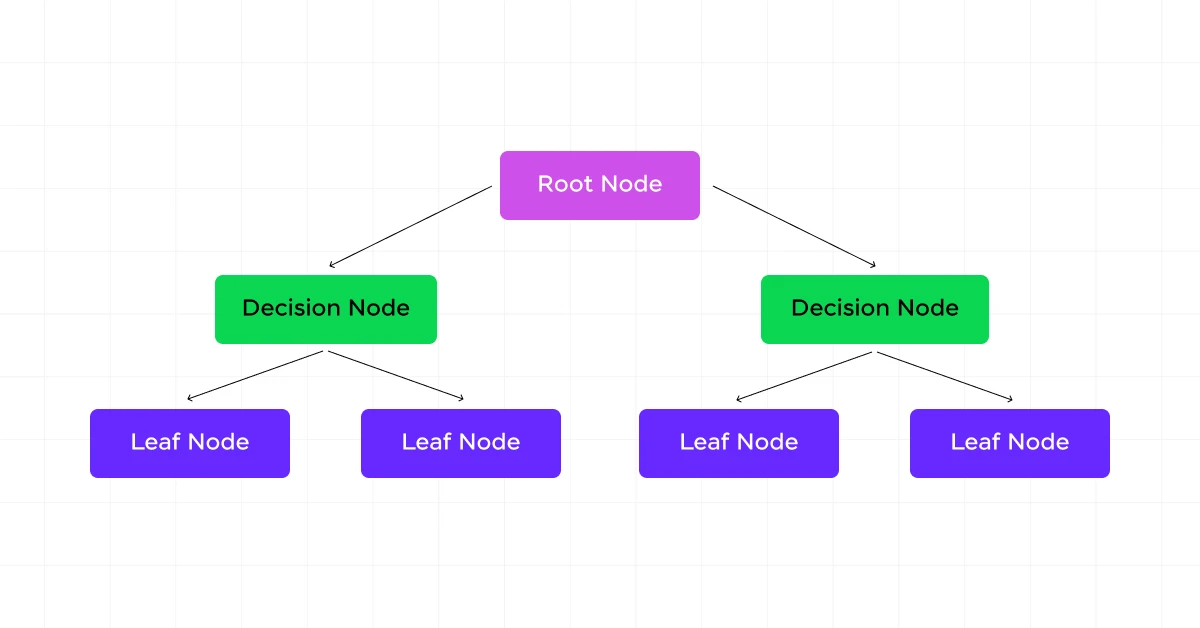

The basic structure of a decision tree includes:

- Root Node: The topmost node, representing the entire dataset.

- Internal Nodes: Decision points where the data is split based on certain conditions.

- Branches: The outcomes of a decision, connecting nodes.

- Leaf Nodes: Terminal nodes that represent the final output or decision.

Before we move into the next section, ensure you have a good grip on data science essentials like Python, MongoDB, Pandas, NumPy, Tableau & PowerBI Data Methods. If you are looking for a detailed course on Data Science, you can join GUVI’s Data Science Course with Placement Assistance. You’ll also learn about the trending tools and technologies and work on some real-time projects.

Additionally, if you want to explore Python through a self-paced course, try GUVI’s Python course.

Now, let’s explore how decision trees are used in machine learning with a step-by-step guide.

What is a Decision Tree in Machine Learning – A Step-by-Step Guide

Let’s explore how they work, and how to build and optimize them.

How Decision Trees Work

Decision trees work by recursively splitting the dataset into subsets based on the most significant attribute at each node. The goal is to create homogeneous subsets with respect to the target variable. This process continues until a stopping criterion is met, such as reaching a maximum depth or having a minimum number of samples in a leaf node.

For classification tasks, the decision tree predicts the class label of a new instance by traversing from the root to a leaf node, following the path determined by the decision rules at each internal node. For regression tasks, the prediction is typically the average target value of the training instances that reach a particular leaf node.

The key to understanding how decision trees work lies in grasping the concept of information gain and entropy (for classification) or variance reduction (for regression). These metrics guide the tree-building process by determining the best splits at each node.

Building a Decision Tree

Building a decision tree involves several steps:

a) Feature Selection

Choose the most important feature to split the data at each node. This is typically done using metrics like:

- Information Gain: Measures the reduction in entropy after a dataset is split on an attribute.

- Gini Impurity: Measures the probability of incorrectly classifying a randomly chosen element.

- Variance Reduction: Used for regression trees to minimize the variance in the target variable.

b) Splitting

Once the best feature is selected, the data is split into subsets. For categorical features, this might involve creating a branch for each category. For continuous features, a threshold is chosen to create binary splits.

c) Recursive Partitioning

Steps a and b are repeated recursively for each subset until a stopping criterion is met.

d) Assigning Leaf Nodes

Once splitting stops, the majority class (for classification) or average value (for regression) of the remaining instances is assigned to the leaf node.

The process of building a decision tree aims to maximize the homogeneity of the target variable within each subset. This is achieved by choosing splits that result in the highest information gain or the largest reduction in impurity.

Let’s consider a simple example: Suppose we’re building a decision tree to predict whether a customer will purchase a product based on their age and income. We start with the root node containing all customers. We then evaluate which feature (age or income) provides the best split. Let’s say income gives the highest information gain.

We create two branches: one for “income <= Rs. 50,000” and another for “income > Rs. 50,000”. We then repeat this process for each of these new nodes, possibly splitting on age next. This continues until we reach our stopping criteria.

Pruning Decision Trees

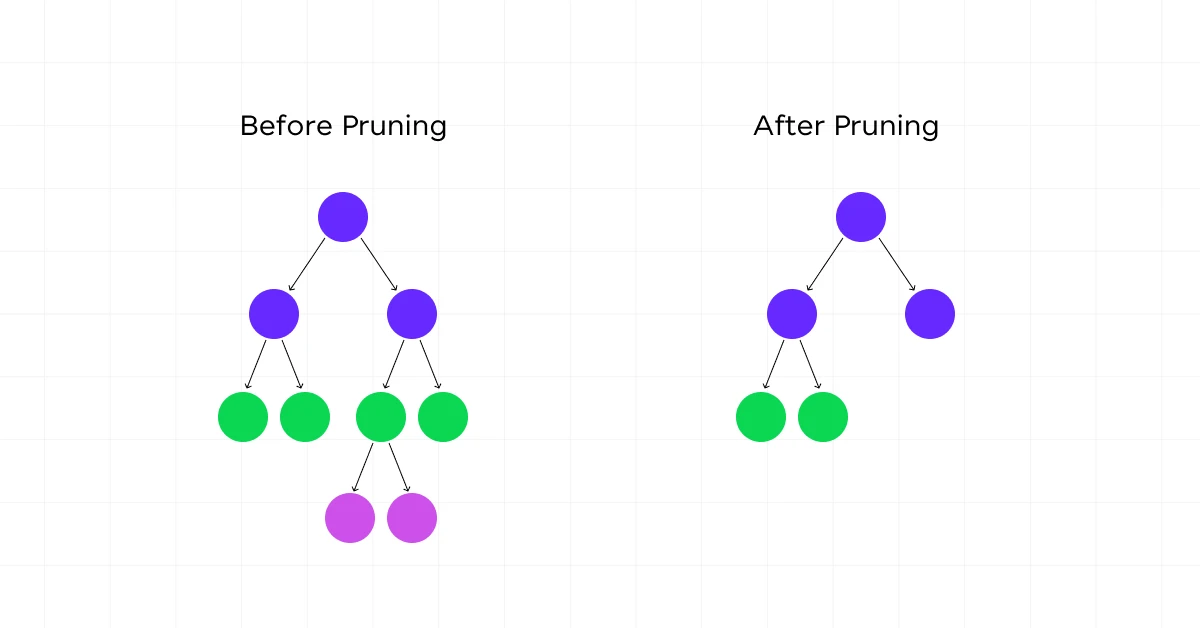

While decision trees are powerful, they can easily overfit the training data, leading to poor generalization on unseen data. Pruning is a technique used to reduce the size of decision trees by removing sections of the tree that provide little power to classify instances.

There are two main approaches to pruning:

a) Pre-pruning (Early Stopping)

- Set constraints before tree construction begins.

- Examples include setting a maximum tree depth, a minimum number of samples required to split an internal node, or a minimum number of samples required to be at a leaf node.

- While computationally efficient, it can be challenging to choose the right parameters without prior knowledge.

b) Post-pruning

- Build the full tree first, then remove branches that don’t provide significant predictive power.

- Common methods include:

- Reduced Error Pruning: Replace each node with its most popular class. If the prediction accuracy doesn’t decrease, keep it pruned.

- Cost Complexity Pruning: Balance between tree size and misclassification rate using a complexity parameter.

Pruning helps to combat overfitting by reducing the complexity of the final classifier, which often improves predictive accuracy on unseen data. It’s an important step in the decision tree-building process, especially when dealing with noisy or limited data.

You must also go through the Top 9 Machine Learning Project Ideas For All Levels [with Source Code].

Evaluating Decision Tree Performance

Evaluating the performance of a decision tree model is important to understand its effectiveness and to compare it with other models. Several metrics and techniques are commonly used:

a) Accuracy: The proportion of correct predictions (both true positives and true negatives) among the total number of cases examined. While intuitive, accuracy can be misleading for imbalanced datasets.

b) Precision and Recall: Precision is the ratio of true positives to all positive predictions, while recall (also known as sensitivity) is the ratio of true positives to all actual positive cases. These metrics are particularly useful when class imbalance is present.

c) F1 Score: The harmonic mean of precision and recall, providing a single score that balances both metrics.

d) Confusion Matrix: A table that describes the performance of a classification model by showing the counts of true positives, true negatives, false positives, and false negatives.

e) ROC Curve and AUC: The Receiver Operating Characteristic (ROC) curve plots the true positive rate against the false positive rate at various threshold settings. The Area Under the Curve (AUC) provides an aggregate measure of performance across all possible classification thresholds.

f) Mean Squared Error (MSE) or Mean Absolute Error (MAE): For regression trees, these metrics measure the average squared or absolute differences between predicted and actual values.

g) Cross-validation: A technique to assess how the results of a statistical analysis will generalize to an independent dataset. It’s particularly useful for evaluating how well a decision tree model will perform on unseen data.

When evaluating decision trees, it’s important to consider not just the overall performance metrics, but also the tree’s interpretability and complexity. A slightly less accurate but more interpretable tree might be preferred in some applications where understanding the decision-making process is important.

Advantages and Disadvantages of Decision Trees

Like any machine learning algorithm, decision trees have their strengths and weaknesses. Understanding these can help in deciding when to use decision trees and how to mitigate their limitations.

Advantages

a) Interpretability: Decision trees are easy to understand and interpret, even for non-experts. They can be visualized, and the decision-making process can be easily explained.

b) Handling of Both Numerical and Categorical Data: Unlike some algorithms that require numerical input, decision trees can handle both numerical and categorical variables without the need for extensive data preprocessing.

c) Non-Parametric: Decision trees don’t assume a particular distribution of the data, making them suitable for a wide range of problems.

d) Feature Importance: They provide a clear indication of which features are most important for classification or prediction.

e) Handling Missing Values: Many decision tree algorithms can handle missing values in the data without requiring imputation.

f) Minimal Data Preparation: They require little data preparation compared to other techniques. There’s no need for normalization, scaling, or centering of data.

Disadvantages

a) Overfitting: Decision trees can create over-complex trees that don’t generalize well from the training data. This is particularly true for deep trees.

b) Instability: Small variations in the data might result in a completely different tree being generated. This instability can be mitigated using ensemble methods like random forests.

c) Biased with Imbalanced Datasets: They can create biased trees if some classes dominate. It’s necessary to balance the dataset before training to mitigate this issue.

d) Suboptimal: The greedy approach used in many decision tree algorithms doesn’t guarantee to return the globally optimal decision tree.

e) Difficulty with XOR, Parity, or Multiplexer Problems: These types of problems can be challenging for decision trees to solve.

f) Limitations in Regression: When used for regression, decision trees can’t produce continuous output and have limited ability to extrapolate beyond the range of the training data.

Also Read: Supervised and Unsupervised Learning: Explained with Detailed Categorization

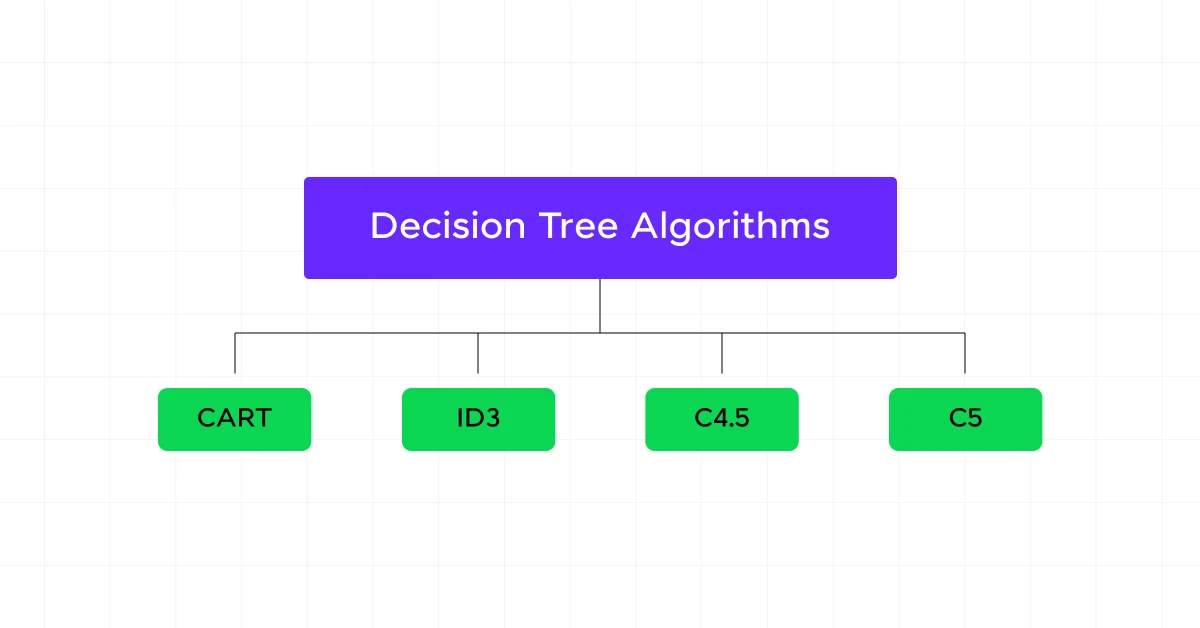

Decision Tree Algorithms

Several algorithms have been developed for constructing decision trees. The most popular ones include:

a) ID3 (Iterative Dichotomiser 3)

- Developed by Ross Quinlan in 1986.

- Uses Information Gain as the splitting criterion.

- Can only handle categorical attributes.

- Doesn’t support pruning.

b) C4.5 (Successor of ID3)

- Also developed by Ross Quinlan.

- Uses Gain Ratio as the splitting criterion to address a bias in ID3.

- Can handle both continuous and discrete attributes.

- Supports pruning.

- Can handle incomplete data and different attribute costs.

c) CART (Classification and Regression Trees)

- Developed by Breiman et al. in 1984.

- Uses Gini impurity for classification and variance reduction for regression as splitting criteria.

- Produces only binary trees.

- Supports both classification and regression tasks.

- Includes built-in pruning methods.

d) CHAID (Chi-square Automatic Interaction Detector)

- Uses chi-square tests to determine the best split at each node.

- Can generate non-binary trees (more than two branches per node).

- Primarily used for classification.

e) Conditional Inference Trees

- Uses statistical tests as splitting criteria.

- Aims to address selection bias towards variables with many categories in other algorithms.

- Generally results in smaller trees and doesn’t require pruning.

These algorithms differ in their splitting criteria, the types of problems they can handle (classification, regression, or both), how they prune trees, and how they handle missing values and different types of attributes. The choice of algorithm often depends on the specific characteristics of the dataset and the problem at hand.

Kickstart your Data Science journey by enrolling in GUVI’s Data Science Course where you will master technologies like MongoDB, Tableau, PowerBI, Pandas, etc., and build interesting real-life projects.

Alternatively, if you would like to explore Python through a Self-paced course, try GUVI’s Python course.

Conclusion

Decision trees are a fundamental algorithm in machine learning, offering a balance of performance and interpretability. They are an excellent starting point for many machine learning applications and tasks and provide insights into feature importance. While they have limitations, techniques like pruning and ensemble methods can address many of these issues.

As you continue your journey in machine learning, understanding decision trees will provide a solid foundation for exploring more complex algorithms and techniques.

FAQs

What are the key advantages of using decision trees in machine learning?

Decision trees offer several advantages, including:

1. Interpretability: The tree structure is easy to visualize and understand, making it simple to interpret and explain the decision-making process.

2. Handling of Non-Linear Data: Decision trees can capture non-linear relationships between features without requiring extensive data preprocessing.

3. Versatility: They can be used for both classification and regression tasks, making them a flexible tool in a data scientist’s arsenal.

How do decision trees handle missing values in the dataset?

Decision trees have built-in mechanisms to handle missing values. During the tree-building process, if a feature value is missing for a certain sample, the algorithm can:

1. Surrogate Splits: Use surrogate splits, which are alternative splits based on other features that closely mimic the primary split, to make a decision.

2. Imputation: Estimate the missing values based on the most common value or mean of the feature, depending on the type of data.

What are some common techniques to prevent overfitting in decision trees?

Overfitting is a common issue in decision trees, where the model becomes too complex and captures noise rather than the underlying pattern. To prevent overfitting, you can use techniques such as:

1. Pruning: Remove parts of the tree that do not provide significant power to classify instances, either through pre-pruning (setting constraints like maximum depth) or post-pruning (removing nodes after the tree is built).

2. Setting Minimum Split Criteria: Require a minimum number of samples in a node for a split to be considered.

3. Cross-Validation: Use cross-validation to evaluate the model’s performance on different subsets of the data, ensuring it generalizes well to unseen data.

Did you enjoy this article?