Top 11 Python Libraries For Machine Learning in 2025

Apr 11, 2025 7 Min Read 3411 Views

(Last Updated)

Before mastering machine learning algorithms or data science programs, firstly, we need to understand the libraries of Python. We use these Python libraries to create data science and machine learning programs.

The following external open-source Python library files are used to create data science and machine learning programs. Here in this article, we will walk you through the list of Python libraries used for machine learning.

Most Importantly, we can use all Python library files in the program or a combination of some Python libraries in the program. Above all, Python libraries have their features to solve machine learning and data science problems.

Table of contents

- Best Python Libraries For Machine Learning

- NumPy- one of the best Python Libraries

- Pandas- one of the best Python libraries

- SciPy

- SymPy

- Matplotlib

- Seaborn

- Bokeh

- Plotly

- Scikit-learn

- Beautiful Soup- another python library

- Scrapy

- Conclusion

Best Python Libraries For Machine Learning

Let’s understand the machine-learning Python libraries in detail. To understand more about any library, just go to the mentioned website link of the respective library files.

Make sure you understand machine learning fundamentals like Python, SQL, deep learning, data cleaning, and cloud services before we explore them in the next section. You should consider joining GUVI’s Machine Learning Career Program, which covers tools like Pyspark API, Natural Language Processing, and many more and helps you get hands-on experience by building real-time projects.

Instead, if you would like to explore Python through a Self-paced course, try GUVI’s Python Self-Paced course.

1. NumPy- one of the best Python Libraries

NumPy was created in 2005 by Travis Oliphant. It is a Python external package, which stands for ‘Numerical Python.’ And it works with arrays. NumPy is used for efficient operation on regular data, which are stored in arrays. We can say NumPy in the manipulation of numerical data. It is only due to the NumPy library, that Python becomes equivalent to MATLAB, Yorick, and IDL.

NumPy provides different numerical operations for processing arrays like a log, LCM, etc. It provides Fourier transform and routines for shape manipulation, and logical operations on arrays. Additionally, this Python library provides operations related to linear algebra and random number generation. Most importantly, by using NumPy, we can create multidimensional array objects like vectors, matrices, etc.

Why do we need to learn this Python library- NumPy?

In Python language; Tuple and List arrays are available and we can do all array-related works by using them.

So, why do we learn a new array based on data types?

- Mainly, in data science and machine learning, we work on multi-dimensional arrays, and in tuples and lists; we can create a one-dimensional array only. So, to resolve data science and machine learning array-based problems, we use NumPy.

- It is faster than other Python libraries and we can do mathematical calculations easily by using it.

- NumPy is written in C and C++ language.

- By using it, we can easily do the shaping, sorting, indexing, etc. array-based operations.

- Also, NumPy is 50 times faster than List. It is improved to work with the latest CPU architectures.

Installing and Importing NumPy

- We can install NumPy in Python by writing the command “pip install NumPy” in the system command prompt.

- NumPy comes with a pandas library so when we install pandas, then automatically, NumPy is installed in Python.

- We can import NumPy libraries in our program by using the below syntax. Import NumPy as np

2. Pandas- one of the best Python libraries

In data science and machine learning, a pandas library is very important. Firstly, it is the most used library. This is because pandas are used for implementing the first few steps of data analysis. These steps are loading data, organizing data, cleaning messy data sets, exploring data, manipulating data, modeling data, and analyzing data.

By using pandas, we can easily analyze big and complex data. After that based on statistical theories, we can make conclusions. The process of pandas is to clean disorganized data sets. In addition, it makes them readable and important. The name “Pandas” comes from “Panel Data”, and “Python Data Analysis”. It was created by Wes McKinney in 2008 and written in Cython, C, and Python.

Above all, Pandas is a fast, flexible, and easy-to-use data analysis and manipulation tool compared to other tools. Pandas mainly work on data tables. Most importantly, it has many easy functions for data analysis. Python with pandas is used in a variety of academic and commercial domains. These domains include sectors like finance, economics, statistics, advertising, web analytics, etc.

The Key features of Pandas used for data processing and analysis

- Firstly it is a fast and efficient creation of a Data Frame with default and modified indexing.

- Load data in any format

- Data alignment and integrated handling of missing data.

- Reshaping and pivoting of data sets.

- Label-based slicing, indexing, and sub-setting of large data sets.

- Apply CRUD operations on a data frame

- Group by data for aggregation and transformations.

- Merging and joining of data.

- Also, Time Series functionality.

Installing and Importing Pandas

- We can install Pandas in Python by writing the command “pip install pandas” in the system command prompt.

- Also, we import pandas libraries in our program by using the below syntax. import pandas as pd.

3. SciPy

SciPy was created by NumPy’s creator Travis Oliphant and written in Python and C language.

It is a scientific library of Python, which is used in mathematics, scientific computing, engineering, and technical computing. This Python library uses NumPy underneath and stands for scientific python. NumPy provides many functions related to linear algebra, Fourier transforms, and random number generation. However, they are not equivalent to SciPy functions.

Most importantly, SciPy supports functions like gradient optimization, integration, differentiation, etc. In short, we can say that all the general numerical computing is done via SciPy.

SciPy provides more utility functions for optimization, stats, and signal processing. These are frequently used in Data Science. SciPy is organized into sub-packages, which cover different scientific computing domains.

Installing and Importing SciPy

- We can install SciPy in Python by writing the command “pip install SciPy” in the system command prompt.

- We can import the SciPy library into our program by using the below syntax- ‘import sciPy’.

4. SymPy

SymPy is popular in the scientific Python ecosystem. It was developed by Ondrej CertiK and Maurer in 2007. SymPy is just like symbolic mathematics and is used as an interactive mode. It is a programmatic application. It is a full-featured computer algebra system (CAS). SymPy is written in Python. It depends on mpmat, which is a Python library for arbitrary floating-point arithmetic.

SymPy has functions for calculus, polynomials, discrete math, statistics, geometry, combinatorics, matrices, physics, and plotting. It can format the results in various forms like MathML, LaTeX, etc.

Installing and Importing SymPy

- We can install SymPy in Python by writing the command “pip install SymPy” in the system command prompt.

- We can import SymPy libraries in our program by using the below syntax. So, import SymPy

5. Matplotlib

Matplotlib was developed by John D. Hunter and written in Python and some parts in C and JavaScript.

Above all, Matplotlib is a low-level graph plotting library used to create 2D/3D graphs and plots. It is used with graphical tools like wxPython, Tkinter, and PyQt. To use Matplotlib with NumPy is to create an alternative to MATLAB. It has a module named pyplot, which is used for plotting graphs and provides functions to control line styles, size of the graph, font properties, formatting axes, etc.

We can create different kinds of graphs and plots like histograms, line charts, bar charts, power spectra, error charts, subplots, etc. by using Matplotlib.

Installing and Importing Pandas

- We can install Matplotlib in Python by writing the command “pip install Matplotlib” in the system command prompt.

- Certainly, we can import the Matplotlib library into our program by using the below syntax. From Matplotlib import pyplot as plt.

6. Seaborn

Primarily, Seaborn is used for statistical data visualization. It provides a high-level interface to draw attractive and useful statistical graphics. In addition to this, Seaborn extends Matplotlib. By using seaborn, we can easily do hard things with Matplotlib.

Seaborn works on data frames and arrays. It helps us to explore and understand the data. Seaborn performs necessary semantic mapping and statistical aggregation to produce informative plots.

We can create a histogram, joint plot, pair plot, factor plots, violin plots, etc. by using seaborn.

This Python library is mainly used in machine learning compared to data science.

Key Features of Seaborn

- Firstly, there are lots of themes available in seaborn to work with different graphics

- We can visualize both univariate and multivariate data in seaborn.

- Seaborn support for visualizing varieties of regression model data in ML.

- So, it allows easy plotting of statistical data for time-series analytics.

- Also, All-in-one performance with Pandas, NumPy, and other Python libraries

Installing and Importing Seaborn

- We can install seaborn in Python by writing the command “pip install seaborn” in the system command prompt.

- Also, we can import the seaborn library in our program by using the below syntax. Import seaborn as sns.

7. Bokeh

As per the Bokeh documentation, Bokeh is used for creating interactive visualizations for modern web browsers and it provides very interactive charts and plots.

It helps us to build beautiful graphics, ranging from simple plots to complex dashboards with streaming datasets. With Bokeh, we can create JavaScript-powered visualizations without writing any JavaScript.

We can easily integrate the bokeh plot with any website, that has been created in Django and Flask framework. Bokeh can bind with Python, R, Lua, and Julia languages and produce JSON files, which works with BokehJs to present data to web browsers. Above all, we can easily convert Bokeh results in a notebook, HTML, and server.

The reasons as easy interactivity, intelligent suggestions on errors, exporting to HTML, easy integration with pandas, easy work with Jupyter Notebook, and themes that attract us to use Bokeh for plotting. By using Bokeh, we can make our visuals stand out compared to Matplotlib charts.

Key features of Bokeh

- By using the simple commands of Bokeh, we can easily and quickly build complex statistical plots.

- Bokeh can easily work with websites and transform visualizations, which are created in other plots like seaborn, Matplotlib, etc.

- In addition, Bokeh has flexibility for applying interaction, layouts, and different styling options to plots.

Installing and Importing Bokeh

- We can install Bokeh in Python by writing the command “pip install bokeh” in the system command prompt.

- We can import the bokeh library in our program by using the below syntax. Import bokeh.

8. Plotly

These are the features, which attract us to learn Plotly.

- The plots created in Plotly are interactive

- Plotly exports plot for print or publication

- It allows manipulating or embedding the plot on the web.

- Plotly stores charts as JSON files. And allows them to open and read in different languages like R, Python, MATLAB, and Julia.

Plotly is a data visualization library. It plots different types of graphs and charts like scatter plots, line charts, box plots, pie charts, histograms, animated plots, etc. In the bokeh plot, we can do endless customization to make our plot more meaningful and understandable.

Mainly for machine learning classification plots and charts. So, we use Plotly libraries to make our data plot more understandable. Plotly makes interactive graphs online and allows us to save them offline as per our requirements.

Installing and Importing Plotly

- We can install Plotly in Python by writing the command “pip install Plotly” in the system command prompt.

- We can import the Plotly library into our program by using the below syntax. So, import Plotly.

9. Scikit-learn

It was developed by David Cournapeau in 2007. Later, in 2010, Fabian Pedregosa, Gael Varoquaux, Alexandre Gramfort, and Vincent Michel, from FIRCA (French Institute for Research in Computer Science and Automation), took this project to another level. And made the first public release (v0.1 beta) on 1st Feb. 2010.

Scikit-learn (Sklearn) is mainly used in machine learning for modeling the data. It is an extended form of SciPy. Also, it provides methods for learning algorithms and statistical modeling like classification, regression, clustering, etc.

Sklearn is written in Python. And, it was built upon SciPy, Matplotlib, and Numpy.

Above all, it provides supervised and unsupervised learning algorithms via a consistent interface in Python.

Scikit-learn is distributed under many Linux distributions. So, it encourages academic and commercial uses.

Scikit-learn includes functionality for regression like linear and logistic regression, classification like K-Nearest Neighbors, model selection, preprocessing like min-max normalization, and clusterings like K-Means and K-Means++.

Scikit-Learn Models

The following group of models is presented in sci-kit-learn.

1. Supervised Learning Algorithms

It provides functions for all the supervised learning algorithms like Linear Regression, Support Vector Machine (SVM), Decision Tree, naïve Bayes, discriminant analysis, etc.

2. Unsupervised Learning Algorithms

It provides functions for all the unsupervised learning algorithms from clustering, PCA, and factor analysis to unsupervised neural networks.

3. Clustering

We use clustering for grouping unlabeled data like K-Means.

4. Manifold Learning

Manifold Learning is usually used to summarize and represent complex multi-dimensional data.

5. Cross-Validation

Cross-validation is in use to check the accuracy of supervised models on hidden data.

6. Dimensionality Reduction

We use Dimensionality Reduction for reducing parameters in data. Specifically, the parameters that can be used in the future for summarization, visualization, and feature selection like PCA (Principal Component Analysis).

7. Ensemble Methods

We implement Ensemble Methods for joining the predictions of multiple supervised models.

8. Feature Extraction

Firstly, Feature Extraction is usually used to take the parameters from data. It then defines them in image and text data.

9. Parameter Tuning

Parameter Tuning is in implementation for getting most of the data out of the supervised models.

10. Feature Selection

It helps to classify the meaningful parameters to create supervised models.

11. Datasets

It is used to test datasets and to generate datasets with specific parameters for investigating model performance.

Installing and Importing Scikit-learn

- We can install Scikit-learn in Python by writing the command “pip install sci-kit-learn” in the system command prompt.

- Before installing scikit-learn, we need to install pandas, NumPy, SciPy, and Matplotlib.

- We can import the sci-kit-learn library in our program by using the below syntax. Import sklearn

10. Beautiful Soup- another python library

The beautiful Soup is used to pull the data/text from HTML and XML documents. So, it is used for easy web scraping tasks. Best Soup is a web scraping package. As the name suggests, it parses the annoying data. Thereby, helps to establish and format the untidy web data by fixing bad HTML and present it to us in easily traversable XML structures. This Python library is named after a Lewis Carroll poem of the same name in “Alice’s Adventures in Wonderland”.

Installing and Importing Beautiful Soup

- We can install Beautiful Soup in python by writing the command “pip install beautifulsoup4” in the system command prompt.

- Before installing beautiful soup, we need to install requests and urllib2 library files.

- Finally, import beautiful soup libraries in the program by using the below syntax. From bs4 import Beautiful Soup.

11. Scrapy

Scrapy is used for large-scale web scraping. By using it, we can easily extract data from websites and then process it as per the requirement and then store it in proper structure and format. We can fetch millions of data by using Scrapy. Scrapy uses spiders, which are self-contained crawlers. Scrapy is easy to build and scale large crawling projects by using reuse code.

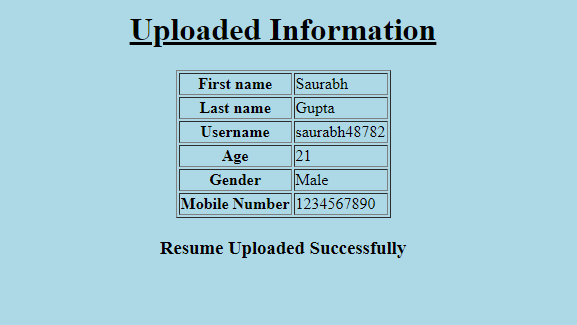

Difference between the 2 Python Libraries- Scrapy and BeautifulSoup

In data science, we use Scrapy and Beautiful Soup for data extracting from the web. However, due to some reasons, Scrapy is more popular than beautiful soup for complex data extracting.

| Beautiful Soup |

Scrapy |

| Beautiful Soup is an HTML and XML parser and used with requests, urllib2 library files to open URLs and save the result. | Scrapy is a complete package for extracting web pages means no need for any additional library. It processes the extracting data and saves it in files and databases. |

| Most importantly, it is used for simple scraping work. If we use it without multiprocessing, it is slower than Scrapy. Moreover, Beautiful Soup works like synchronous means we can go forward to the next work after completing the previous work. | It is used for complex scraping work. It can extract a group of URLs in a minute. The time taken for group extracting is depending on the group size. It uses Twister, which works non-blocking for concurrency means we can go forward to the next work before completing previous work. |

| Easy to understand and takes less time to learn. It can do smaller tasks within a minute. | Provides lots of ways to extract the web page and lots of functions so it is not easy to understand and learn. |

| In short, we use Beautiful Soup where more logic is not required. | On the other hand, we use Scrapy where more customization is required like data pipelines, managing cookies, proxies, etc. |

Installing and Importing Scrapy

- We can install Scrapy in Python by writing the command “pip install Scrapy” in the system command prompt.

- Use Scrapy in anaconda or Miniconda.

- Finally, import the Scrapy library in our program by using the below syntax. Import Scrapy.

Kickstart your Machine Learning journey by enrolling in GUVI’s Machine Learning Career Program where you will master technologies like matplotlib, pandas, SQL, NLP, and deep learning, and build interesting real-life UI/UX projects. Alternatively, if you want to explore Python through a Self-paced course, try GUVI’s Python Self-Paced certification course.

Conclusion

Above all, the high appetite for computer expertise would necessitate greater refinement of specialized roles throughout data science. Also, it will be fascinating to see how this domain unravels within the next couple of years.

As we have finally understood the libraries of Python, we are ready to dive into the exciting lucrative world of data science and machine learning.

Did you enjoy this article?