Data Engineer Roles and Responsibilities in 2025

Mar 28, 2025 6 Min Read 3667 Views

(Last Updated)

Data Engineering is amongst the top trending careers today. As organizations rely more on informed decision-making, understanding a data engineer’s role is vital for a successful career.

These professionals architect and maintain data systems that power modern businesses. Their responsibilities span from building streamlined data pipelines to maintaining data quality and security.

This article outlines the core data engineer roles and responsibilities that leading tech companies demand in 2025. You’ll discover everything from pipeline development to data governance and learn the skills required to thrive in this ever-changing field.

Table of contents

- Who is a data engineer and what do they do?

- Top Data Engineer Roles and Responsibilities

- 1) Key Data Engineering Responsibilities

- 2) Top 5 Data Engineering Roles in 2025

- 1) Data Engineer

- 2) Machine Learning Engineer

- 3) Data Architect

- 4) Big Data Engineer

- 5) DataOps Engineer

- FAQs

- What are data engineer roles and responsibilities?

- What are data engineer roles and responsibilities?

- Is an ETL developer a data engineer?

- What skills should a data engineer have?

Who is a data engineer and what do they do?

A data engineer builds and maintains the systems that collect, process, and store data. Your main goal will be to develop expandable data pipelines and architectures that data scientists use for analysis.

Data engineers handle the vital first stage of the data science workflow: data collection and storage. You will create systems to gather, house, and clean raw data that data scientists need for their work. You will also prepare data for analysis by fixing incomplete, corrupted, or improperly formatted information.

Core Responsibilities:

Data engineers design and maintain data pipelines that turn raw information from multiple sources into clean, reliable data. You will create and optimize data models to support analytics and machine learning initiatives. Your work will give data a smooth and secure flow from source to destination.

Key Technical Functions:

- Pipeline Development: You will build automated systems that collect data from many sources, including structured and unstructured data. These pipelines need constant monitoring and updates as data requirements change.

- Data Architecture: You will select the right technologies and write code for customizations. This work includes managing databases, data warehouses, and making storage more efficient.

- ETL Process Management: You will manage Extract, Transform, Load (ETL) operations to move data through defined stages. The order might change to ELT (Extract, Load, Transform) based on specific needs.

Collaboration and Business Impact:

Data engineers work hand in hand with data scientists, analysts, and business stakeholders. You will bridge the gap between software developers and data science positions. Through teamwork, you will help teams:

- Make data models better for business intelligence tools

- Support evidence-based decision making in organizations

- Keep production data accurate for key stakeholders

Top Data Engineer Roles and Responsibilities

A data engineer’s job needs specific skills that blend technical and business knowledge. You’ll design, build, and maintain data systems that help modern organizations run smoothly.

Data engineers connect raw data to useful insights.

Your main job as a data engineer is to create flexible systems that can handle growing data loads while staying reliable. Good system design helps keep data available and accurate for everyone who needs it.

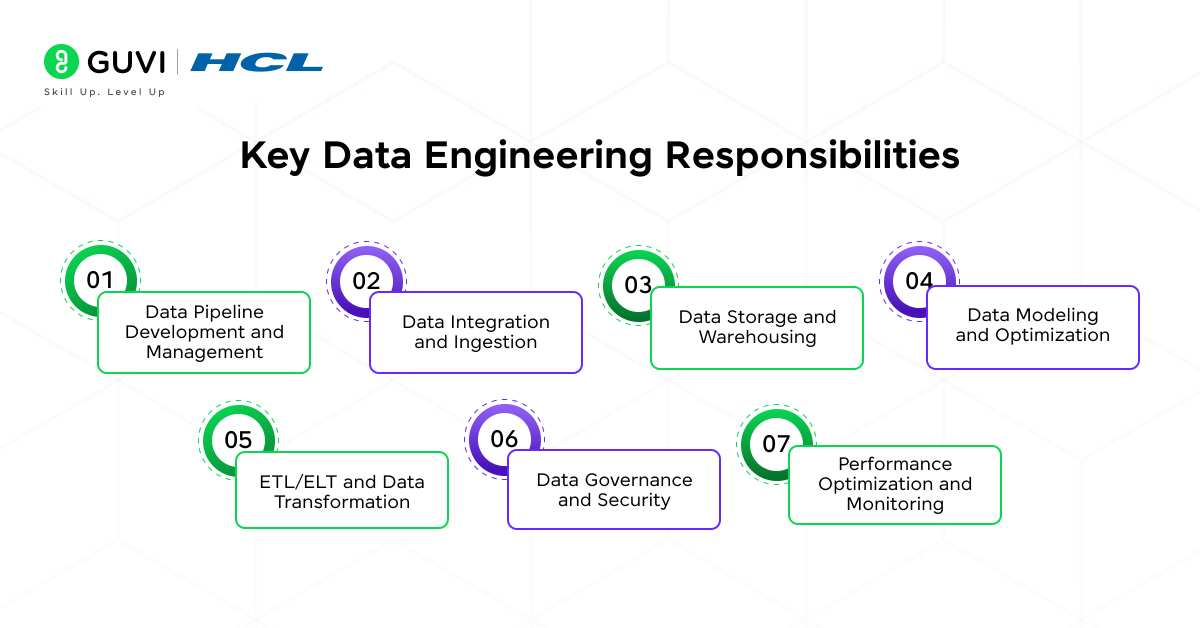

1) Key Data Engineering Responsibilities

Data engineering today covers many responsibilities that are the foundations of data-driven organizations. Your role as a data engineer requires mastery over multiple technical domains and business processes, ranging from foundational architecture to advanced analytics support.

A) Data Pipeline Development and Management

Building reliable data pipelines is the life-blood of successful data engineering. These pipelines work like arteries in your data infrastructure and continuously move information across systems. What you must know:

- Building and Maintaining Scalable Data Pipelines – Data engineers develop ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) pipelines using frameworks like Apache Airflow, Apache Beam, and AWS Glue. These pipelines automate data movement across multiple systems, ensuring real-time or batch processing efficiency.

- Handling Batch and Real-Time Data Processing – Batch processing is ideal for scheduled data workflows, whereas real-time processing supports live analytics and monitoring applications. Technologies like Apache Spark, Apache Kafka, and Flink help engineers optimize processing workflows based on business needs.

- Ensuring Data Integrity and Minimal Latency – Engineers implement data validation, deduplication, and schema enforcement to maintain high-quality datasets. Minimizing data latency is crucial for applications requiring real-time analytics, such as fraud detection and stock market prediction.

B) Data Integration and Ingestion

Your success with data integration depends on knowing how to handle data sources of all types. Organizations now generate huge amounts of information. You need to become skilled at data integration to keep a unified view of your business operations.

Data engineers integrate data from multiple sources—including APIs, databases, IoT devices, and third-party platforms—into a centralized system. What you must know:

- Extracting Data from Various Sources – Engineers work with structured data (e.g., relational databases), semi-structured data (e.g., JSON, XML), and unstructured data (e.g., logs, images). Extraction tools like Apache NiFi, AWS Data Pipeline, and Google Dataflow facilitate automated data ingestion.

- Using Streaming & Batch Ingestion Tools – For real-time data streaming, engineers leverage Apache Kafka, AWS Kinesis, and Confluent. Batch ingestion, on the other hand, relies on tools like Sqoop, Talend, and Informatica.

- Transforming and Loading Data Efficiently – Data engineers preprocess and clean data using transformations, schema mapping, and aggregation before storing it in warehouses or data lakes. Cloud-based solutions like Google Cloud Dataflow and Azure Data Factory streamline this process.

C) Data Storage and Warehousing

Smart storage strategies build the foundation of resilient data management systems. You’ll need to create sophisticated solutions that balance performance, cost, and accessibility while keeping data secure and available.

Data engineers handle multiple storage solutions, ensuring optimal performance and accessibility. What you must know:

- Managing Databases, Data Lakes, and Warehouses – Engineers oversee various storage solutions, including:

- Relational Databases (e.g., PostgreSQL, MySQL, SQL Server) for structured data.

- NoSQL Databases (e.g., MongoDB, Cassandra, DynamoDB) for handling semi-structured and unstructured data.

- Data Warehouses (e.g., Snowflake, Redshift, BigQuery, Azure Synapse) for business intelligence and analytics.

- Data Lakes (e.g., AWS S3, Azure Data Lake) for storing large-scale raw data.

- Optimizing Storage Performance – Engineers implement indexing, partitioning, and compression techniques to enhance query performance and reduce storage costs. For example, columnar storage formats like Parquet and ORC significantly improve read speeds in analytical queries.

D) Data Modeling and Optimization

Data modeling works as a visual blueprint of information systems that helps organize and interpret complex datasets easily. You’ll build frameworks that power both analytics and machine learning initiatives when you design and implement them properly.

Effective data modeling ensures efficient query performance and supports analytical applications. What you must know:

- Designing Scalable Data Models – Engineers create normalized and denormalized database schemas based on business requirements. The star schema and snowflake schema are commonly used for data warehousing.

- Optimizing Query Performance – Data engineers write optimized SQL queries and use materialized views, indexing, and caching strategies to reduce execution time.

- Choosing the Right Database Type – Depending on the use case, engineers select:

- SQL Databases (PostgreSQL, MySQL) for transactional consistency.

- NoSQL Databases (MongoDB, DynamoDB) for flexible, high-speed storage.

E) ETL/ELT and Data Transformation

Raw data becomes valuable insights when you master both ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes. These methods are the foundations of modern data pipelines that help organizations process and analyze information.

What you must know:

- Developing Automated ETL Pipelines – Engineers build pipelines using tools like Apache Airflow, DBT (Data Build Tool), and Talend to process data at scale.

- Implementing Business-Specific Transformations – Data cleansing, feature engineering, and aggregation are performed to tailor data for analytics and reporting.

- Optimizing ETL Performance – Engineers implement parallel processing, caching, and workload partitioning to enhance ETL efficiency.

Organizations that use these transformation strategies well end up with consistent data, accurate analysis, and better decisions. Data engineers who pay attention to both technical details and business needs build reliable transformation pipelines that help organizations grow and innovate.

F) Data Governance and Security

Organizations just need to implement strong security measures and governance frameworks to protect sensitive data assets. Trust and compliance depend on complete data protection strategies as companies handle more personal information.

With increasing data privacy regulations, data governance and security are crucial for compliance. What you must know:

- Implementing Security Best Practices – Engineers enforce encryption (AES-256, TLS), role-based access control (RBAC), and multi-factor authentication (MFA) to protect sensitive data.

- Ensuring Compliance with Regulations – Adhering to GDPR, HIPAA, and CCPA requires setting up access controls, anonymizing personal data, and logging data lineage.

- Monitoring Data Quality & Anomalies – Engineers use tools like Great Expectations, Monte Carlo, and Collibra to automate data quality checks, track schema changes, and detect anomalies.

This complete security and governance approach will protect sensitive data while maintaining regulatory compliance. Your data will stay secure, available, and properly managed throughout its lifecycle.

G) Performance Optimization and Monitoring

System performance optimization lies at the core of smooth data operations. Data volumes grow exponentially, and database operations need fine-tuning. System health monitoring becomes crucial to peak efficiency.

Data engineers are responsible for optimizing system performance to ensure efficiency and reliability. What you must know:

- Query Optimization – Engineers fine-tune SQL queries by indexing, partitioning, and using caching mechanisms to speed up execution times.

- System Monitoring & Performance Tuning – Engineers track system performance using monitoring tools like Datadog, Prometheus, and Grafana to identify bottlenecks and optimize resource utilization.

- Scalability & Load Balancing – Engineers use auto-scaling, parallelism, and containerized deployments (Docker, Kubernetes) to handle increasing data workloads.

These monitoring and optimization strategies help maintain peak system performance and reliable data operations. Your team can solve issues before they grow and keep the system running smoothly.

If you’re looking to build a successful career in data engineering, GUVI’s Big Data Engineering Course is your gateway to mastering cutting-edge tools like Hadoop, Spark, and Kafka. Designed by industry experts, this hands-on program covers data pipelines, cloud platforms, and real-world big data projects to make you job-ready.

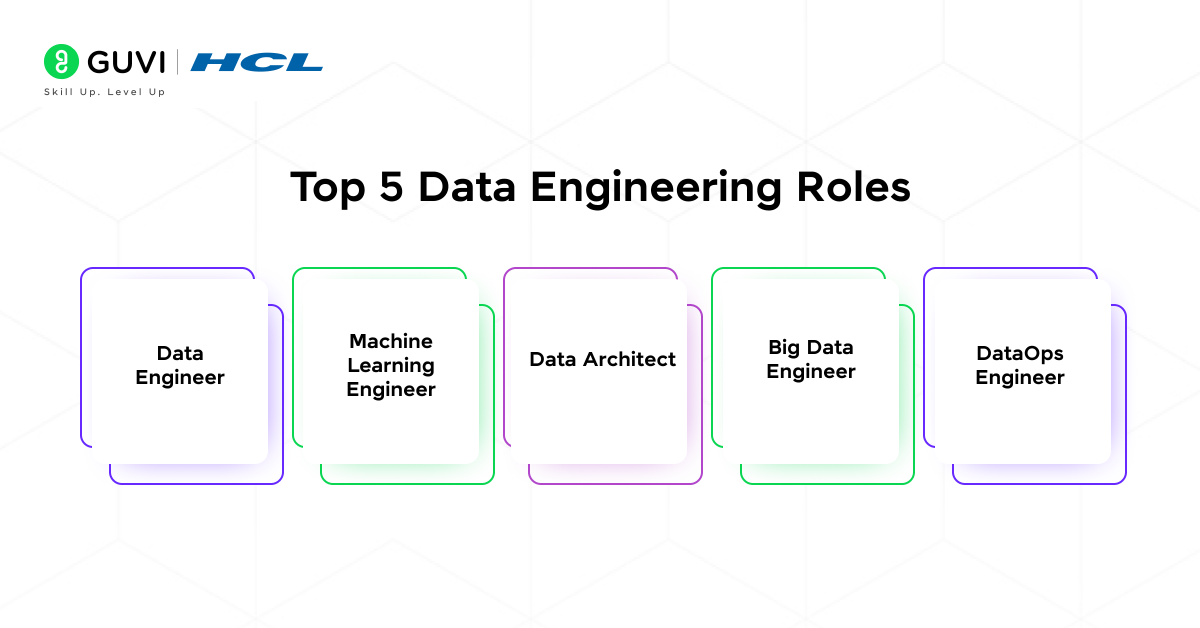

2) Top 5 Data Engineering Roles in 2025

These roles we will be discussing represent the most in-demand career paths in data engineering for 2025. We will learn what people in these roles do, the skills they possess and their salaries.

1) Data Engineer

What do they do?

As a data engineer, your primary responsibility is to build, maintain, and optimize scalable data pipelines that enable businesses to store, process, and analyze large datasets efficiently. You work closely with data scientists and analysts to ensure that raw data is transformed into a usable format, stored in data warehouses, and accessible via APIs or other data processing tools.

Must-Have Skills:

- Strong proficiency in SQL, Python, and Java

- Expertise in cloud platforms like AWS, GCP, or Azure

- Experience with big data frameworks like Hadoop, Spark, and Kafka

- Knowledge of ETL (Extract, Transform, Load) processes

- Data modeling and database management skills

Salary in India: The average salary for a data engineer in India ranges between ₹7-15 LPA, with senior-level professionals earning ₹20-30 LPA.

Related blog: Data Engineer Salary in India

2) Machine Learning Engineer

What do they do?

As a machine learning engineer, you focus on building and deploying machine learning models at scale. Your role involves working with data engineers to create robust data pipelines that enable the training and inference of ML models. You also optimize models for performance, scalability, and real-time applications.

Must-Have Skills:

- Proficiency in Python, TensorFlow, and PyTorch

- Strong understanding of data preprocessing techniques

- Experience with MLOps tools like Kubeflow and MLflow

- Knowledge of cloud-based AI services like AWS SageMaker or Google Vertex AI

- Model deployment using APIs and containerization (Docker, Kubernetes)

Salary in India: The average salary for a machine learning engineer is ₹10-20 LPA, with top professionals earning ₹30-40 LPA.

3) Data Architect

What do they do?

As a data architect, you design and implement scalable data infrastructure solutions that support an organization’s analytics and machine learning needs. Your focus is on ensuring data quality, security, and governance while structuring data storage solutions for optimal performance.

Must-Have Skills

- Expertise in data modeling, database design, and cloud storage solutions

- Proficiency in SQL, NoSQL, and distributed database systems

- Experience with data governance frameworks and compliance standards

- Knowledge of big data technologies like Hadoop, Spark, and Snowflake

- Strong problem-solving and analytical skills

Salary in India: The average salary for a data architect is ₹15-30 LPA, with highly experienced professionals earning up to ₹50 LPA.

4) Big Data Engineer

What do they do?

As a big data engineer, you handle massive datasets that require high-performance computing solutions. Your work involves designing data lakes, implementing real-time processing frameworks, and optimizing data pipelines for large-scale analytics.

Must-Have Skills

- Expertise in Apache Spark, Hadoop, and Kafka

- Proficiency in Python, Scala, and Java

- Strong understanding of distributed computing frameworks

- Experience with real-time data processing and streaming analytics

- Cloud data engineering with AWS, Azure, or GCP

Salary in India: The average salary for a big data engineer ranges between ₹8-18 LPA, with experienced professionals earning ₹25-35 LPA.

5) DataOps Engineer

What do they do?

As a DataOps engineer, you streamline the data engineering workflow by integrating DevOps principles into data management. Your goal is to automate data pipelines, monitor data quality, and ensure smooth data operations across cloud and on-premise environments.

Must-Have Skills

- Strong experience with CI/CD pipelines and automation tools

- Knowledge of Kubernetes, Docker, and Terraform

- Expertise in cloud data platforms like AWS Glue, Google Dataflow, and Azure Data Factory

- Proficiency in scripting languages like Python and Bash

- Data security and compliance knowledge

Salary in India: The average salary for a DataOps engineer is ₹10-20 LPA, with senior professionals earning ₹25-40 LPA.

Concluding Thoughts…

Data engineers need to excel in multiple technical domains, from pipeline development to performance optimization. Successful data engineers don’t just focus on technical skills. They balance system architecture with business objectives to keep data available, secure, and valuable for stakeholders.

Tools and technologies change faster every day, but core engineering principles stay the same. Knowing how to design flexible solutions, implement strong security measures, and optimize system performance will affect your business success.

Data engineering gives you great career growth opportunities, especially as companies build their data capabilities. These skills make you perfect for roles in companies of all sizes, from startups to large enterprises.

FAQs

A data engineer designs, builds, and maintains data pipelines, ensuring data is collected, stored, and processed efficiently. Responsibilities include data modeling, ETL development, database management, and optimizing data workflows for analytics.

A data engineer develops scalable data architectures, automates data ingestion, ensures data integrity, and collaborates with data scientists to improve accessibility and performance of data systems.

Yes, an ETL developer is a specialized data engineer focusing on Extract, Transform, Load (ETL) processes to integrate and manage data from various sources into a structured format.

Key skills include SQL, Python, Java, ETL tools, cloud platforms (AWS, Azure, GCP), big data technologies (Hadoop, Spark), database management, and data pipeline automation.

Did you enjoy this article?