9 Must-Have Skills to Become a Data Engineer

Apr 23, 2025 6 Min Read 5771 Views

(Last Updated)

Are you confused about what skills you should learn to become a data engineer? I totally get it – figuring out the right skills to focus on can be tricky when you’re just starting out. But don’t worry, I’ve got you covered!

In this blog, we’ll go through the 9 essential data engineer skills you need to become a successful data engineer. Whether you’re new to the field or looking to level up your expertise, this guide will give you a clear path to follow. Let’s dive in and get you on the right track!

Table of contents

- Who is a Data Engineer?

- Roles and Responsibilities

- Top 9 Technical Data Engineer Skills To Become a Data Engineer

- Programming Languages

- Database Management

- Data Modeling Techniques

- ETL

- Data Pipelines

- Big Data Technologies

- Machine Learning

- Cloud Computing

- DevOps

- Non-technical Skills For a Data Engineer [Bonus]

- Problem Solving Skills

- Communication Skills

- Collaboration Skills

- Conclusion

- FAQs

- Q1. How much does a data engineer earn in India?

- Q2. Is a degree in computer science necessary to become a data engineer?

- Q3. What are the top programming languages I should learn as a data engineer?

- Q4. Can I transition to a data engineering role from a different field?

Who is a Data Engineer?

Data Engineer is a software professional who builds, designs and maintains the infrastructure needed to collect, store, manage, analyze and process data. They work with large amounts of data to help businesses to make better decisions. Let’s know more about the roles and responsibilities of a data engineer.

Roles and Responsibilities

The roles and responsibilities of a data engineer includes

- Creating pipelines to ensure the data is collected, cleaned and available for analysis.

- Designing and managing databases, data warehouses for efficient storage and retrieval.

- Optimizing the data flow so that it can be easily accessed by data scientists and analysts.

If you are strongly interested in working with databases, coding, and building pipelines then it might be best for you.

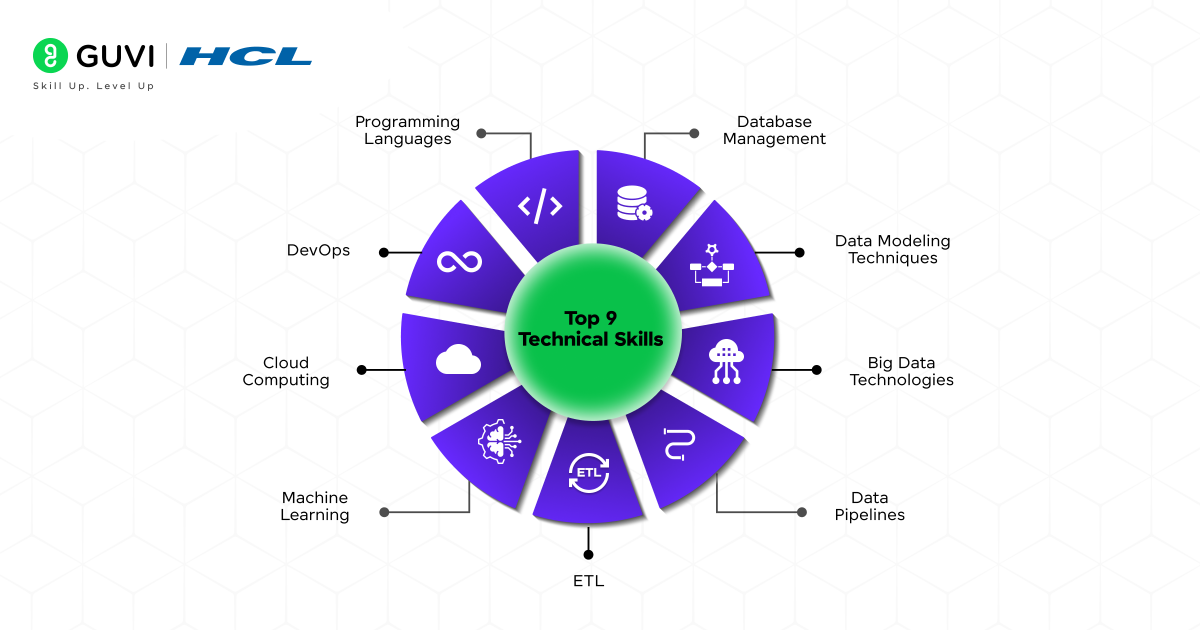

Top 9 Technical Data Engineer Skills To Become a Data Engineer

Technical skills are mandatory for every data engineer. It paves the way to do the roles and responsibilities of a data engineer. In this section, we will see what are the top 9 technical skills one should learn in order to become a successful data engineer.

1. Programming Languages

Programming languages are the number 1 skill that a data engineer should master. There are several programming languages such as C, C++, Java, and etc. But for the data engineering tasks of handling the data, you need to master Python.

Python is the most widely used programming language for managing data and famous for its simpler syntax. People with no technical background can easily learn and start programming using python in a week or so. Data engineers use Python for data processing, automation, and pipeline development.

Libraries like Pandas and NumPy enable efficient data manipulation, while frameworks like Apache Airflow help in workflow orchestration. Understanding Python basics, including control structures, functions, and object-oriented programming, is essential for building scalable and maintainable data solutions. Additionally, integrating Python with databases, APIs, and cloud services enhances its role in data engineering workflows.

2. Database Management

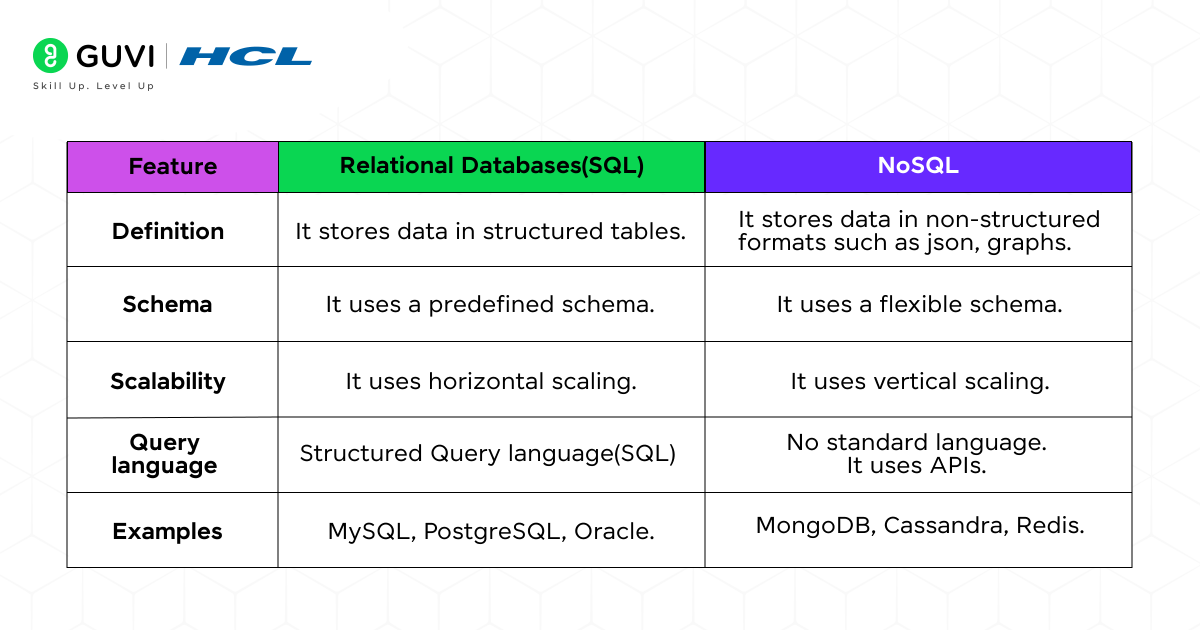

The number 2 skill that a data engineer should learn is database management. Databases are structured collections of data that are stored electronically. Database Management is a system to collect, store, and manage the transactions of the data.

There are two types of databases, relational databases and NoSQL. We will see the difference between these two databases.

| Feature | Relational Databases(SQL) | NoSQL |

| Definition | It stores data in structured tables. | It stores data in non-structured formats such as json, graphs. |

| Schema | It uses a predefined schema. | It uses a flexible schema. |

| Scalability | It uses horizontal scaling. | It uses vertical scaling. |

| Query language | Structured Query language(SQL) | No standard language. It uses APIs. |

| Examples | MySQL, PostgreSQL, Oracle. | MongoDB, Cassandra, Redis. |

Understanding these differences and implementation of these databases in the python programming language is a mandatory requirement to be successful in data engineering.

3. Data Modeling Techniques

Data Modeling is the process of designing the structure of data storage and retrieval methods to ensure efficiency, scalability, consistency and availability of data. This involves creating conceptual, logical and physical models about how the data should be organized, related and stored in databases.

It is mandatory to understand the different data modeling techniques for different databases. For relational databases such as MySQL, entity-relationship modeling is used as a data modeling technique. For non-relational databases(NoSQL) such as MongoDB, it uses schema design as a data modeling technique.

Mastering these foundational skills provides a strong base for aspiring data engineers, enabling them to work efficiently with data pipelines, databases, and analytical systems.

4. ETL

Next comes ETL, which stands for Extract, Transform and Load. It is a foundation of building data pipelines. The Extract phase involves collecting raw data from various sources such as databases, APIs, log files, or streaming services.

The Transform phase processes the data by cleaning, filtering, aggregating, or enriching it to fit the required format. Finally, the Load phase stores the transformed data in a data warehouse, data lake, or analytics system for further analysis.

Modern data pipelines may also use ELT (Extract, Load, Transform), where raw data is first loaded and then transformed within the storage system, allowing greater flexibility and scalability.

5. Data Pipelines

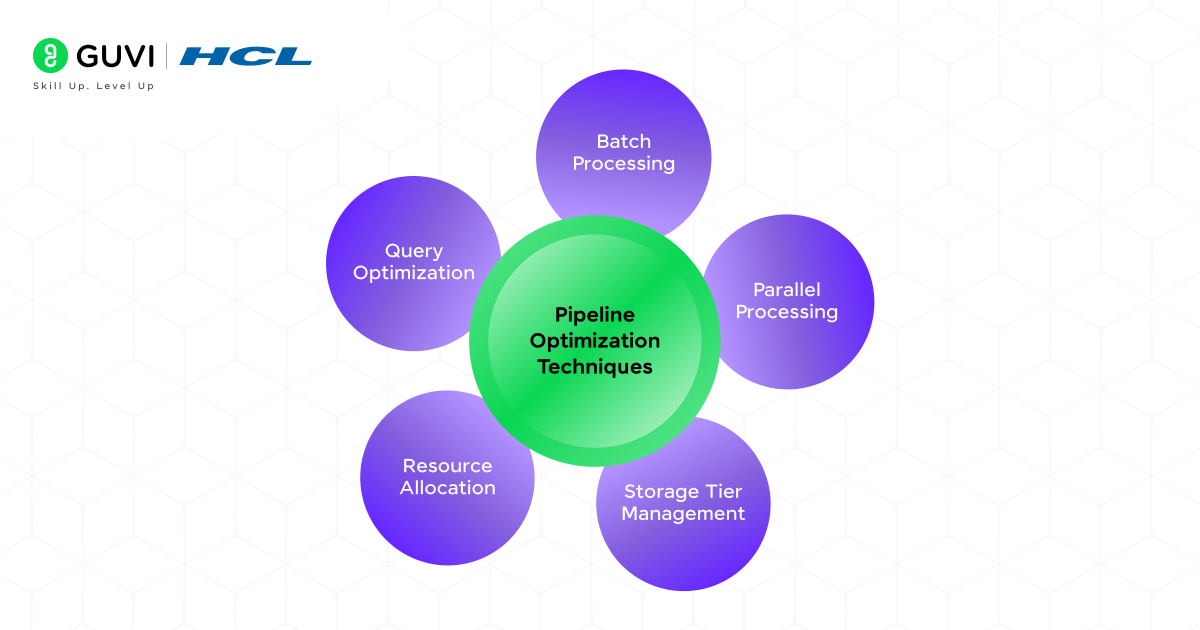

The next important skill is data pipelines. The entire data engineering depends on the pipeline creation, and management. Data pipelines are a system that moves data from various sources, transforms it and stores it for analysis. It handles the ETL process while ensuring scalability and reliability. We will see the pipeline optimization techniques for maintaining smooth data operations.

Pipeline Optimization Strategies

Pipeline optimization techniques are strategies used to improve the performance, efficiency, and reliability of data pipelines. Some of the strategies are:

- Batch Processing: It is a process of grouping data into smaller batches and processing them together at the same time. It helps in reducing the processing time of individual data points.

- Parallel Processing: Large data tasks split into smaller, manageable chunks processed at the same time. This method reduces processing time by a lot and makes the pipeline more efficient.

- Storage Tier Management: Automatic data lifecycle policies work based on access patterns. High-availability storage holds frequently accessed data, while affordable storage options work for rarely used information.

- Resource Allocation: Systems scale automatically based on immediate demand to use resources well. This includes scaling down or stopping during quiet periods.

- Query Optimization: Database queries improve through indexing, partitioning, and picking the right join types. These changes make execution faster and speed up data retrieval.

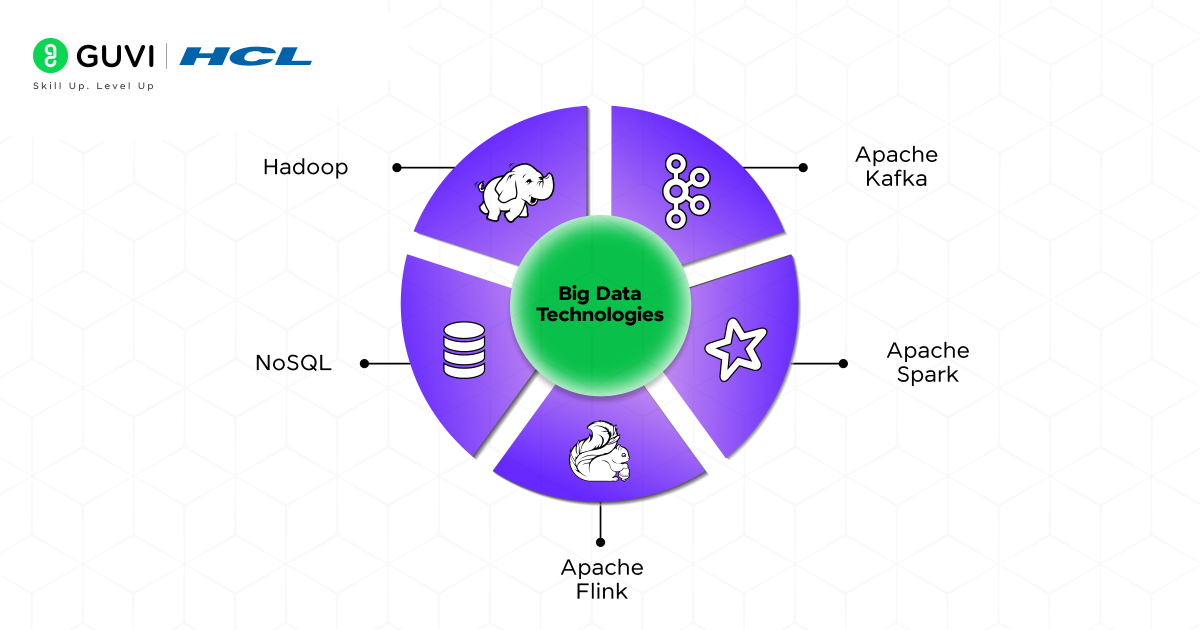

6. Big Data Technologies

The next skill to master is Big Data technologies. Big data is a collection of huge and complex data sets that are difficult to store, process, and analyze. It may include structured, unstructured, and semi-structured data. The technologies used to handle the huge volumes of data are known as big data technologies. Companies now generate more data than ever before, and engineers need to master big data processing tools to build flexible data solutions. These includes the following:

a. Hadoop

Hadoop is an open source framework that is used for distributed storage and processing large amounts of datasets across computers. It is used in many industries such as finance, marketing and scientific research. Hadoop is important in data engineering because of its ability to scale horizontally i.e., adding more servers to distributed data, and it is one of the most widely used tools for big data storage and batch processing.

b. Apache Kafka

Apache Kafka is an open source event streaming platform capable of handling high inputs and low latency for data streaming. It is used to build real-time applications and data pipelines. It can be used to build streaming applications enabling the companies to use live data for processing and analyzing.

c. Apache Spark

Apache Spark is a fast unified analytics engine used for large-scale data processing. It is an open source distributed processing system used for big data workloads. It reduces data processing time making it a good choice for real-time analysis.

d. Apache Flink

Apache Flink is an open source platform for processing data streams and batches. It is a great choice for real-time stream processing, large scale analytics and event driven applications such as fraud detection, detecting anomalies and creating dashboards.

e. NoSQL

NoSQL is a non-relational database used to handle large volumes of data which includes unstructured and semi-structured data. It is ideal for big data because it can scale more easily than traditional relational databases.

7. Machine Learning

Due to the release of generative AI models in 2019, there is a sudden spike in integrating Machine Learning and AI technologies into various fields. Data Engineering is one among them, most of the modern data engineering workflows uses machine learning methodologies.

As a data engineer, you should have a decent understanding of basic ML concepts such as supervised learning, unsupervised learning, reinforcement learning and feature engineering. You don’t need to be an expert in machine learning, but you should understand the concepts that help in designing efficient data pipelines.

Once you understand the basic concepts, focus on learning ML technologies used to integrate with data pipelines, deploying models in cloud platforms and monitoring the deployed models.

8. Cloud Computing

The next most popular skill is cloud computing. Data engineers who know cloud computing will be in high demand by 2025. Companies are moving their data workloads to the cloud and they need experts who can build expandable and economical data pipelines. Let’s see some popular cloud platforms for a data engineer.

a. AWS

Amazon Web Services (AWS) is the most widely used cloud platform, offering a comprehensive suite of tools for data engineering. Key AWS services include Amazon S3 (for scalable object storage), AWS Lambda (for serverless computing), Amazon RDS and DynamoDB (for relational and NoSQL databases), and AWS Glue (for ETL operations).

Data engineers should also be familiar with Amazon Redshift, a cloud data warehouse that supports large-scale analytics. Understanding AWS IAM (Identity and Access Management) is crucial for securing data access and managing permissions.

b. Azure

The next popular cloud platform is Microsoft Azure. It is widely used in enterprise applications. It provides Azure Data Lake Storage for big data workloads, Azure Synapse Analytics (formerly Azure SQL Data Warehouse) for large-scale data processing, and Azure Data Factory for orchestrating ETL workflows.

Azure also integrates well with Power BI, making it a strong choice for organizations leveraging Microsoft’s ecosystem. Data engineers should also understand Azure Functions for serverless execution and Azure Kubernetes Service (AKS) for containerized data applications.

9. DevOps

The last most important skill a data engineer should learn is Development Operations(DevOps). It is crucial to understand this concept in order to automate the data pipeline process of collecting, storing and managing data. Organizations need to master these practices as they grow their data infrastructure to maintain efficient, reliable systems.

CI/CD (Continuous Integration/Continuous Deployment) is a set of practices designed to automate the software development, testing, and deployment process. Continuous Integration (CI) ensures that data engineers frequently integrate code/data into a shared repository, with automated tests running to catch errors early and prevent breaking changes. Mastering tools such as Jenkins will help you to automate the pipelines.

If you’re looking to build a strong career in data engineering, GUVI’s Big Data Engineering Course is an excellent choice. This industry-aligned program covers essential skills like Big Data processing, ETL pipelines, Hadoop, Spark, and Cloud technologies, helping you master real-world data workflows.

Non-technical Skills For a Data Engineer [Bonus]

Non-technical skills are as important as technical skills. These skills act as an intermediate between technical and non-technical people. Non-technical skills are also known as Soft skills. Similar to technical skills, it can also be studied and learned. Skills such as communication, problem-solving, and collaboration skills are essential for effective collaboration and project execution. These skills help data engineers navigate challenges, work with teams, and ensure projects are completed on time and to client expectations. In this section, we will see about each of these non-technical skills individually.

1. Problem Solving Skills

The top non-technical skill that a data engineer should master is problem solving. Problem solving skills are the ability to break down complex problems into manageable parts is at the heart of development. This involves a systematic and logical approach, known as analytical thinking, as well as a creative mindset to find innovative solutions to unique problems. Additionally, it includes effective debugging techniques to quickly identify and resolve issues in code.

2. Communication Skills

The next skill is communication skills. Clear communication is essential when working in teams and with non-technical stakeholders. This involves technical writing, where you document data pipelines, processes, and system architectures, making it easier for others to understand and maintain.

The presentation skills are important for explaining complex data concepts, such as database designs or data processing workflows, to non-technical audiences. Additionally, active listening helps you understand requirements from stakeholders, ensuring that you build data solutions that meet their needs and incorporate valuable feedback.

3. Collaboration Skills

The last and most important non-technical skill is collaboration skills. Collaboration skills are vital for a data engineer, as much of the work involves working closely with cross-functional teams. This includes effective communication, where you share complex data concepts and findings with both technical and non-technical stakeholders.

Teamwork is a big part of being a data engineer! You’ll often find yourself working alongside data scientists, analysts, and software engineers to design, build, and maintain data systems. It’s not just about writing code; it’s about collaborating, sharing your expertise, and learning from others.

Conclusion

And, that’s all it takes to become a data engineer! By mastering the right skills, from programming and database management to big data technologies and collaboration, you’ll be well on your way to building a successful career in data engineering.

FAQs

On average, a data engineer in India can expect to earn around ₹6,00,000 to ₹15,00,000 per year. But if you’re just starting out, expect the lower end, and as you gain experience and skills, the number goes up! In big cities like Bangalore, Hyderabad, or Mumbai, you might see salaries closer to the higher range, especially if you work for tech giants or startups.

A degree in computer science can help, but it’s not a strict requirement. Many data engineers have degrees in fields like information technology, mathematics, or even physics. What really matters is your ability to learn and apply the skills needed for the role – things like programming, databases, and data pipeline management. If you don’t have a CS degree, don’t worry! You can still make it by taking the right courses and building a strong portfolio of projects.

If you’re looking to get into data engineering, you’ll want to get comfortable with a few key languages. First up: Python. It’s widely used for data processing and automation tasks, and its libraries like Pandas and NumPy are super popular. SQL is another must-have, especially for interacting with relational databases. And for more advanced tasks or performance-heavy projects, you’ll want to learn Scala. These are great for building robust data pipelines.

Absolutely! Many data engineers come from diverse backgrounds – software development, data analysis, system administration, even non-technical fields. The key is to leverage the skills you already have and build up your data engineering knowledge.

Did you enjoy this article?