Must-Have Machine Learning Skills in 2025

Feb 28, 2025 9 Min Read 4498 Views

(Last Updated)

Machine Learning (ML) is evolving rapidly, and in 2025, staying relevant requires mastering both foundational and advanced skills. It’s not enough to build models—you must know how to optimize, deploy, and explain them.

Employers seek professionals who can handle big data, deep learning, and MLOps while ensuring AI is ethical and scalable. This guide covers the must-have Machine Learning skills for 2025, helping you stay ahead in the ever-changing AI landscape.

Table of contents

- What is Machine Learning?

- What Do Machine Learning Engineers Do?

- Key Responsibilities:

- Top Machine Learning Skills in 2025

- Proficiency in Programming

- Strong Foundation in Mathematics and Statistics

- Data Handling and Feature Engineering

- Machine Learning Algorithms

- Deep Learning and Neural Networks

- Natural Language Processing (NLP) and Large Language Models (LLMs)

- Takeaways…

- FAQs

- What skills are most important for machine learning?

- What are the 4 basics of machine learning?

- What is NLP in data science?

What is Machine Learning?

Machine learning (ML) is a branch of artificial intelligence (AI) that enables computers to learn patterns and make decisions from data without being explicitly programmed. Instead of following hard-coded rules, ML models generalize from past observations to make predictions, automate tasks, and extract insights from complex datasets.

You encounter ML daily—whether through personalized recommendations on streaming platforms, fraud detection in banking, or self-driving cars navigating traffic. The power of ML lies in its ability to improve over time, adapting to new data and refining its predictions through experience.

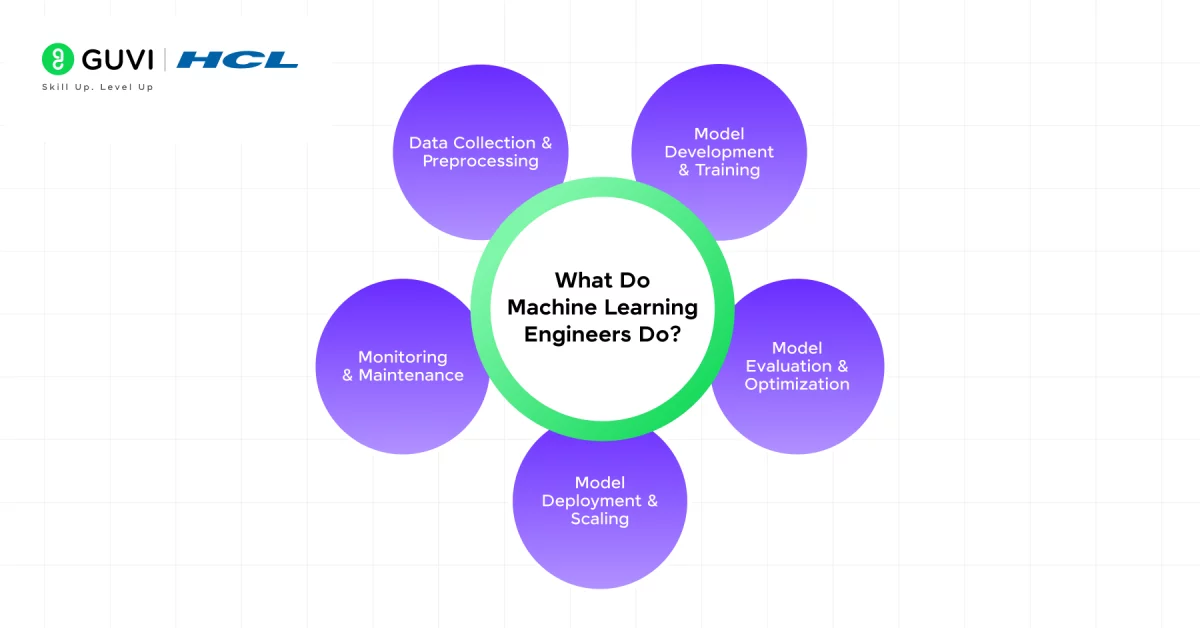

What Do Machine Learning Engineers Do?

As a Machine Learning Engineer, you develop, deploy, and optimize AI models to solve real-world problems. Your role involves working with data, designing algorithms, and ensuring models run efficiently in production.

Key Responsibilities:

- Data Collection & Preprocessing: You gather, clean, and transform raw data into structured formats, ensuring it is suitable for training machine learning models.

- Model Development & Training: You design, experiment, and fine-tune ML models using algorithms ranging from traditional techniques (Decision Trees, SVMs) to deep learning (CNNs, Transformers).

- Model Evaluation & Optimization: Using metrics like accuracy, precision, and recall, you assess model performance, conduct cross-validation, and tweak hyperparameters to improve results.

- Model Deployment & Scaling: You integrate models into production systems using APIs, cloud platforms (AWS, GCP), and containerization (Docker, Kubernetes) to ensure seamless real-world application.

- Monitoring & Maintenance: After deployment, you track model drift, retrain models as needed, and implement MLOps practices to maintain long-term efficiency.

Machine Learning Engineers play a vital role in shaping AI-driven solutions, ensuring they are not only powerful but also reliable, ethical, and scalable. Now, we will discuss the skills that you’re going to need to become one.

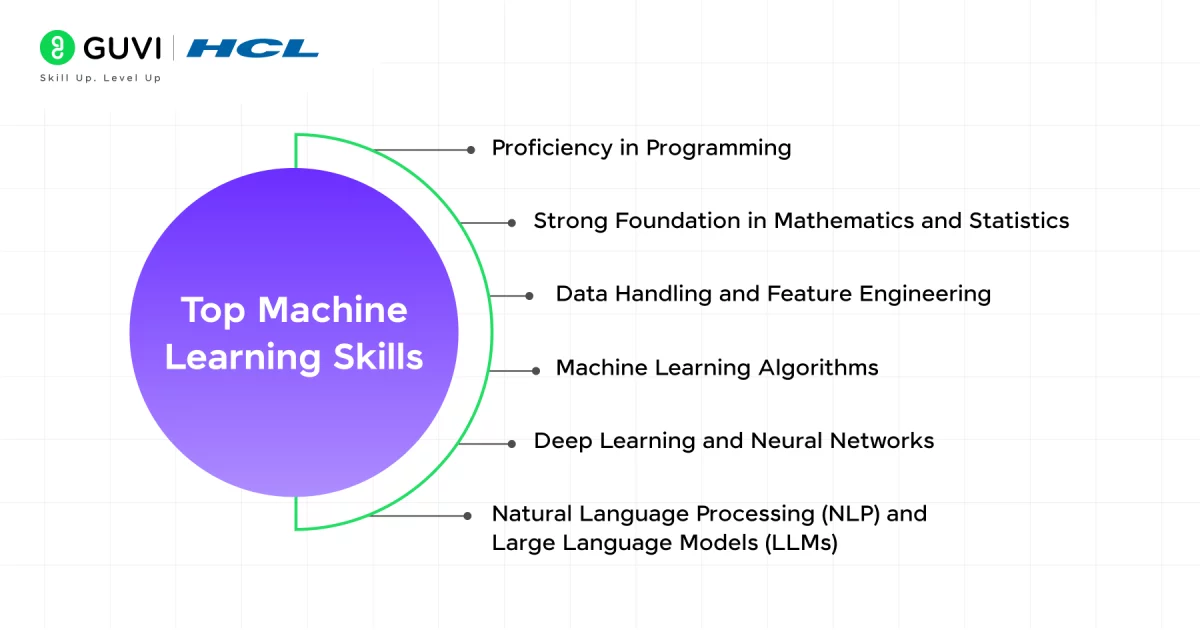

Top Machine Learning Skills in 2025

The field of machine learning (ML) is evolving rapidly, with advancements in artificial intelligence, automation, and big data transforming industries. As an aspiring or experienced ML professional in 2025, you must continuously update your skill set to stay relevant and competitive. Below are the must-have machine learning skills that will set you apart in the AI-driven world.

1. Proficiency in Programming

Machine learning (ML) is not just about understanding theories and algorithms—you must be able to implement them efficiently in code. Strong programming skills allow you to build, optimize, and deploy ML models at scale.

In 2025, companies expect ML professionals to not only develop accurate models but also integrate them into real-world applications. Therefore, mastering key programming languages, understanding software development best practices, and working with deployment tools will set you apart as a machine learning expert.

Key Programming Languages for Machine Learning

To excel in ML, you need proficiency in multiple programming languages, each serving a specific purpose in the ML ecosystem.

1) Python

Python is the most widely used language in ML due to its simplicity, readability, and vast ecosystem of libraries. Libraries like TensorFlow, PyTorch, scikit-learn, NumPy, and Pandas allow you to implement complex ML and deep learning models with ease. You should also be comfortable using Jupyter Notebooks and Python-based ML pipelines for efficient experimentation.

2) R

If you work in academia, research, or data science-heavy fields like bioinformatics and finance, R is a valuable language. It provides powerful statistical analysis and visualization libraries such as ggplot2, dplyr, and caret, making it ideal for exploratory data analysis and building statistical models.

3) SQL and Database Management

Machine learning relies heavily on structured and unstructured data. SQL (Structured Query Language) is essential for querying, retrieving, and manipulating large datasets stored in relational databases like PostgreSQL and MySQL. Additionally, experience with NoSQL databases (e.g., MongoDB, Cassandra) helps when dealing with unstructured data like text and images.

2. Strong Foundation in Mathematics and Statistics

A solid grasp of mathematics and statistics is essential for excelling in machine learning. While many modern libraries abstract away the underlying math, understanding the core principles enables you to design, debug, and optimize models effectively. Without this foundation, you may struggle to interpret results, choose the right algorithms, or fine-tune hyperparameters efficiently.

Mathematics in ML is not just about memorizing formulas—it’s about developing an intuition for how models learn from data. From defining objective functions to optimizing weights in deep learning, mathematical concepts are embedded in every stage of an ML pipeline. Below are the key areas of mathematics and statistics that you must master to become a proficient ML practitioner in 2025.

1) Linear Algebra

Linear algebra is the backbone of machine learning, particularly in deep learning, where data is represented as tensors and matrices.

- Vectors and Matrices: You must understand how data is stored and manipulated using vectors (one-dimensional arrays) and matrices (two-dimensional arrays). Operations like matrix multiplication, transposition, and inversion are fundamental to ML algorithms.

- Eigenvalues and Eigenvectors: These concepts help in dimensionality reduction techniques like Principal Component Analysis (PCA), which is used for feature extraction and compression.

Mastering linear algebra allows you to grasp how ML models represent and transform data internally, making you more effective in implementing and debugging complex algorithms.

2) Probability and Statistics

Machine learning deals with uncertainty, making probability and statistics crucial for developing reliable models.

- Probability Distributions: Many ML algorithms assume data follows certain distributions (e.g., Gaussian/Normal, Poisson, Exponential). Understanding these distributions helps in making probabilistic predictions and handling noisy data.

- Bayes’ Theorem: This is the foundation of Bayesian machine learning, which updates the probability of an event as more evidence is available. It is widely used in spam filtering, medical diagnosis, and recommendation systems.

A strong foundation in probability helps you handle missing data, estimate uncertainty in predictions, and build probabilistic models for real-world applications.

3. Data Handling and Feature Engineering

Data is the backbone of every machine learning (ML) model. No matter how advanced your algorithm is, the quality of your input data determines the model’s success. You must master data handling techniques and feature engineering to optimize model performance and improve predictions.

1) Data Collection and Preprocessing

Before you can build an ML model, you need to gather and preprocess data from various sources. Raw data is often incomplete, inconsistent, and noisy, making data collection and preprocessing a crucial step in any ML pipeline.

Data Sources:

In real-world ML applications, data comes from multiple sources such as databases, APIs, web scraping, IoT sensors, and logs. You must know how to extract and integrate data from these different sources efficiently.

- Structured Data: Found in databases, spreadsheets, and data warehouses. It is organized into tables with predefined relationships. SQL is commonly used to query and manage structured data.

- Unstructured Data: Includes text, images, videos, and audio files. You need specialized tools like NLP techniques for text processing or OpenCV for image analysis.

- Streaming Data: Generated in real-time by sensors, financial transactions, and social media. Apache Kafka and Spark Streaming help manage continuous data streams.

Data Cleaning:

Real-world data is often messy, containing missing values, duplicate entries, and inconsistencies. Data cleaning ensures that your dataset is reliable and free from errors.

- Handling Missing Values: Use imputation techniques like mean/median substitution, forward/backward filling, or predictive modeling to estimate missing values.

- Removing Duplicates: Duplicate data skews results and creates bias. Deduplication techniques involve unique key constraints or fuzzy matching to identify and remove duplicates.

- Outlier Detection: Outliers can distort model predictions. You can use statistical methods (e.g., Z-score, IQR) or ML techniques (e.g., Isolation Forest, DBSCAN) to detect and handle them.

Data Transformation:

Once data is cleaned, you need to transform it into a format that your ML model can interpret.

- Normalization and Standardization: Scaling features ensures that all variables contribute equally to the model. Min-Max scaling (0-1 range) and Z-score standardization (zero mean, unit variance) are commonly used.

- Data Augmentation: In fields like computer vision and NLP, artificially increasing the dataset by adding variations (e.g., rotating images, generating synonyms) improves model generalization.

2) Feature Engineering

Feature engineering is the process of selecting, transforming, and creating new features to improve model performance. Well-engineered features help models learn faster and generalize better.

Feature Selection:

Selecting the most relevant features reduces complexity and enhances model accuracy. Feature selection techniques help eliminate redundant or irrelevant features.

- Filter Methods: Use statistical measures like correlation, mutual information, or Chi-square tests to rank feature importance.

- Wrapper Methods: Utilize ML models (e.g., Recursive Feature Elimination) to iteratively test different subsets of features.

Feature Extraction:

Feature extraction reduces dimensionality while retaining important information. This is particularly useful when working with high-dimensional data.

- Principal Component Analysis (PCA): A linear transformation technique that reduces feature space while preserving variance. It helps speed up computations and avoids overfitting.

3) Big Data Processing and Scalable Pipelines

As datasets grow, traditional data processing tools become inefficient. You need to work with big data frameworks that can handle large-scale, distributed computing.

Big Data Frameworks:

Managing large datasets requires tools that can efficiently store and process vast amounts of information.

- Apache Spark: A distributed computing framework optimized for big data analytics and ML workloads. It supports parallelized data processing, making it suitable for large datasets.

- Hadoop: A distributed file system (HDFS) that enables scalable data storage and processing. It is widely used for big data analytics.

ETL (Extract, Transform, Load) Pipelines:

Building end-to-end ML pipelines involves automating the process of extracting, transforming, and loading data (ETL).

- Airflow: Used for scheduling and automating workflows to handle data pipelines efficiently.

- Kubeflow and MLflow: Help streamline ML workflows by integrating data preprocessing, feature engineering, and model training into reproducible pipelines.

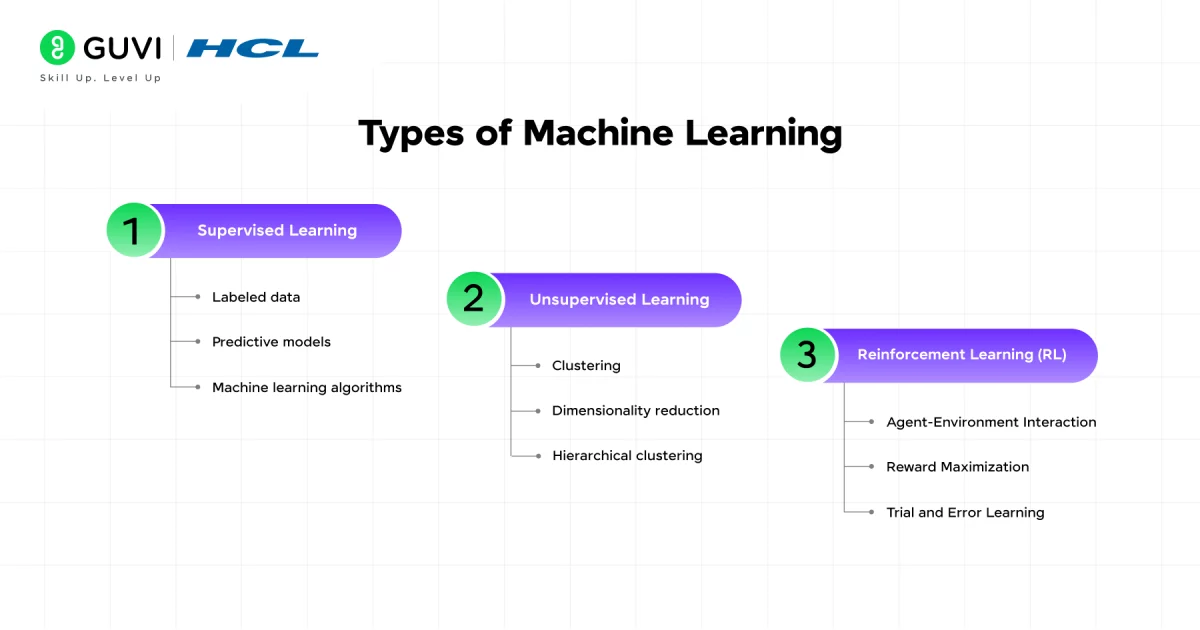

4. Machine Learning Algorithms

To become an expert in machine learning, you must develop a deep understanding of the algorithms that drive predictive modeling, pattern recognition, and intelligent decision-making. Knowing how these algorithms work, their strengths and weaknesses, and when to use them will enable you to build robust AI systems.

1) Supervised Learning: Learning from Labeled Data

Supervised learning is one of the most widely used ML techniques, where models learn from labeled datasets to make predictions or classifications. You must master key algorithms to handle both regression and classification problems effectively.

Regression Algorithms (For Predicting Continuous Values):

Regression models are used when the target variable is continuous, such as predicting house prices, stock values, or temperature changes.

- Linear Regression: This is the simplest regression algorithm, modeling relationships between input features and a continuous target variable using a straight-line equation. While easy to interpret, it struggles with complex, non-linear data.

- Ridge and Lasso Regression: These regularized versions of linear regression help prevent overfitting by penalizing large coefficients, ensuring better generalization on new data.

- Support Vector Regression (SVR): SVR extends support vector machines (SVMs) to regression tasks, effectively handling non-linear relationships with kernel functions.

Classification Algorithms (For Predicting Categorical Labels):

Classification models assign input data to predefined categories, such as spam detection, fraud detection, or medical diagnosis.

- Decision Trees: These models split data into hierarchical branches based on feature values, making them easy to interpret but prone to overfitting.

- Random Forests: By combining multiple decision trees, this ensemble method improves accuracy and reduces overfitting, making it a powerful choice for classification problems.

- Support Vector Machines (SVM): SVM finds an optimal decision boundary between classes using hyperplanes, making it useful for high-dimensional data.

- Gradient Boosting Algorithms (XGBoost, LightGBM, CatBoost): These advanced boosting techniques improve weak learners iteratively, achieving state-of-the-art performance in many Kaggle competitions.

Supervised learning is crucial for many business applications, and understanding how to fine-tune hyperparameters and handle imbalanced data will make you an expert in model development.

2) Unsupervised Learning

Unsupervised learning deals with unlabeled data, where the algorithm finds hidden structures and patterns without explicit guidance. This technique is widely used in clustering, anomaly detection, and data compression.

Clustering Algorithms (For Grouping Similar Data Points)

Clustering algorithms segment datasets into meaningful groups, which is useful for customer segmentation, genetic classification, and market research.

- K-Means Clustering: One of the most popular clustering methods, K-Means partitions data points into K clusters based on similarity. While fast and efficient, it struggles with non-spherical clusters.

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise): Unlike K-Means, DBSCAN doesn’t require specifying the number of clusters. It excels at detecting anomalies and handling irregular cluster shapes.

- Hierarchical Clustering: This method creates a tree-like structure of clusters, making it useful for hierarchical relationships like taxonomies and genealogies.

Dimensionality Reduction (For Feature Extraction and Noise Reduction)

Reducing the number of features while preserving important information improves model efficiency and reduces overfitting.

- Principal Component Analysis (PCA): PCA transforms high-dimensional data into a smaller number of principal components, retaining the most important variance in the dataset.

- t-SNE (t-Distributed Stochastic Neighbor Embedding): t-SNE is particularly useful for visualizing high-dimensional data by mapping similar data points closer together in 2D or 3D space.

- Autoencoders: These deep learning models learn compressed representations of input data, making them useful for anomaly detection and feature extraction.

Unsupervised learning is essential in cases where labeled data is scarce, allowing you to extract insights from raw, unstructured datasets.

3) Reinforcement Learning (RL): Learning Through Rewards and Penalties

Reinforcement learning is a powerful technique where an agent learns optimal strategies by interacting with an environment and receiving rewards or penalties. It is widely used in robotics, gaming, self-driving cars, and financial trading.

Key RL Concepts:

- Markov Decision Processes (MDP): RL problems are typically modeled as MDPs, where an agent takes actions in a state space and receives rewards based on those actions.

- Q-Learning: A model-free RL algorithm that learns the optimal action-selection policy using a Q-table, which estimates the future rewards of actions.

- Deep Q Networks (DQN): By integrating deep learning with Q-learning, DQNs enable agents to handle complex environments with high-dimensional state spaces.

- Policy Gradient Methods: Instead of relying on Q-values, these methods directly optimize policy functions, making them suitable for continuous action spaces.

As RL continues to advance, mastering its algorithms will allow you to build intelligent systems capable of learning and adapting autonomously.

If you want to master Machine Learning and launch a career as a Machine Learning Engineer, then GUVI’s Artificial Intelligence and Machine Learning Course could be the perfect choice.

This industry-relevant program, designed by IIT-Madras, equips you with Python, deep learning, NLP, and real-world AI applications—all through hands-on projects and expert mentorship. Whether you’re a beginner or an experienced coder, this course will help you bridge the gap between theory and practical implementation in AI.

5. Deep Learning and Neural Networks

Deep learning has transformed machine learning by enabling models to learn complex patterns from massive amounts of data. In 2025, deep learning will continue to dominate fields such as natural language processing (NLP), computer vision, and generative AI. To stay ahead, you need to master neural networks, optimization techniques, and the latest frameworks.

1) Understanding Neural Networks

At the core of deep learning are artificial neural networks (ANNs), which mimic the structure and function of the human brain. A neural network consists of multiple layers of neurons:

- Input Layer: Receives raw data (e.g., pixels from an image or words from a text).

- Hidden Layers: Perform computations using activation functions and weight adjustments to extract meaningful features.

- Output Layer: Produces the final prediction, such as classifying an image or translating a sentence.

The depth and complexity of these layers determine the model’s ability to learn abstract features. You must understand how neural networks process information and how different architectures serve different purposes.

2) Key Neural Network Architectures

Each neural network architecture is designed for specific types of data and tasks. As an ML professional, you need to be proficient in the following:

1. Feedforward Neural Networks (FNNs)

These are the simplest form of neural networks where information moves in one direction—from input to output. They are used for basic classification and regression tasks but are limited in handling sequential or spatial data.

2. Convolutional Neural Networks (CNNs)

CNNs are designed for image and video processing. They use convolutional layers to detect spatial features like edges, textures, and objects. Popular architectures include VGG, ResNet, and EfficientNet. If you work in computer vision, mastering CNNs is essential.

3. Recurrent Neural Networks (RNNs) and LSTMs/GRUs

RNNs are specialized for sequential data, such as time series forecasting and speech recognition. However, traditional RNNs suffer from the vanishing gradient problem, which is solved by Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks. These architectures allow models to retain long-term dependencies in data.

4. Generative Adversarial Networks (GANs)

GANs consist of two competing networks—a generator and a discriminator—working together to create realistic data, such as deepfake images or AI-generated art. They are widely used in synthetic data generation and creative AI applications.

3) Optimization Techniques for Deep Learning

Training deep neural networks requires efficient optimization strategies to ensure faster convergence and better generalization.

1. Gradient Descent Variants

- Stochastic Gradient Descent (SGD): Updates weights using small data batches to improve computational efficiency.

- Adam and RMSprop: Adaptive optimization algorithms that adjust learning rates dynamically to speed up convergence and improve stability.

2. Regularization Techniques

- Dropout: Randomly deactivates neurons during training to prevent overfitting.

- L1/L2 Regularization: Penalizes large weights, enforcing simpler models that generalize better.

4) Deep Learning Frameworks and Tools

As a machine learning professional, you need hands-on experience with frameworks that enable efficient model development and deployment.

1. TensorFlow and PyTorch

- TensorFlow is widely used for production-ready AI applications, with robust deployment capabilities via TensorFlow Serving and TensorFlow Lite.

- PyTorch is popular for research and experimentation due to its dynamic computation graph and ease of debugging.

2. Keras

Keras, an abstraction over TensorFlow, simplifies deep learning model development, making it easier to prototype and test models quickly.

6. Natural Language Processing (NLP) and Large Language Models (LLMs)

With the rapid advancements in artificial intelligence, Natural Language Processing (NLP) has become one of the most critical domains in machine learning. As more businesses integrate AI-driven communication, NLP is powering chatbots, voice assistants, sentiment analysis, and automated content generation.

The rise of Large Language Models (LLMs) like GPT-4, BERT, T5, and LLaMA has completely transformed how machines understand and generate human language. Mastering NLP and LLMs will give you a competitive edge in the evolving AI landscape.

1) Understanding Core NLP Techniques

To build effective NLP models, you must first understand the fundamental text processing techniques that allow machines to interpret human language.

1. Tokenization

Tokenization is the process of breaking down text into individual words or subwords (tokens). This is essential for feeding text into ML models. Modern NLP techniques use Byte Pair Encoding (BPE) and WordPiece Tokenization to handle rare words and multilingual text effectively.

2. Stemming and Lemmatization

These techniques reduce words to their base or root form.

- Stemming applies heuristic rules (e.g., “running” → “run”) but can sometimes be inaccurate.

- Lemmatization uses a vocabulary-based approach (e.g., “better” → “good”) to return meaningful root words, making it more accurate than stemming.

2) Must-Know Large Language Models (LLMs) and Transformer Architectures

The biggest revolution in NLP has come from Transformer-based models like GPT (Generative Pre-trained Transformer) and BERT (Bidirectional Encoder Representations from Transformers). These models have redefined AI capabilities in text generation, translation, and comprehension.

1. BERT (Bidirectional Encoder Representations from Transformers)

BERT processes words in both left and right contexts, making it highly effective for tasks like text classification, question answering, and search ranking. It is widely used in Google Search and enterprise AI solutions.

2. GPT (Generative Pre-trained Transformer) Models

GPT models generate human-like text by predicting the next word based on previous words. GPT-4 and future iterations are revolutionizing AI-driven content creation, code generation, and conversational agents. These models are fine-tuned for various applications like chatbots (ChatGPT), automated documentation, and digital assistants.

Takeaways…

To build a successful career in ML, you need more than just technical knowledge—you must be proficient in programming, data handling, model optimization, and explainability. As AI continues to transform industries, those who adapt, experiment, and refine their skills will stand out.

Keep learning, stay updated with the latest advancements, and future-proof your career in the AI-driven world. I hope this article has aided your ML journey, if you have any doubts, reach out to us through the comments section below and we’ll try our best to help you out.

FAQs

The key skills for machine learning include programming (Python, R), mathematics (linear algebra, probability, statistics), data preprocessing, feature engineering, deep learning, model evaluation, and cloud computing.

The four basics of machine learning are:

1) Data Collection & Preprocessing – Gathering and cleaning data.

2) Feature Engineering – Selecting and transforming relevant features.

3) Model Training – Applying algorithms to learn patterns.

4) Evaluation & Optimization – Assessing and improving model performance.

Natural Language Processing (NLP) is a branch of AI in data science that enables machines to understand, interpret, and generate human language, used in chatbots, sentiment analysis, and translation.

![How to Restore Old Photos with Deep Latent Space Translation: A Beginner’s Guide [2025] 8 deep latent space translation](https://www.guvi.in/blog/wp-content/uploads/2025/07/How-to-Restore-Old-Photos-with-Deep-Latent-Space-Translation.png)

![What is the Minerva Deep Learning Model? An Exclusive Beginner’s Guide [2025] 9 minerva](https://www.guvi.in/blog/wp-content/uploads/2025/07/A-Beginners-Guide-to-Data-Visualization-with-Matplotlib.png)

Did you enjoy this article?