Must-Have Data Science Skills in 2025

Oct 22, 2025 10 Min Read 9615 Views

(Last Updated)

In the modern data-driven economy, businesses rely heavily on extracting actionable insights from vast amounts of structured and unstructured data. This is where data science comes in. But what is data science exactly? It is an interdisciplinary field that combines statistics, programming, machine learning, and domain expertise to analyze, interpret, and model data to drive strategic decision-making.

A data scientist’s career is highly competitive, requiring a deep technical skillset combined with strong analytical thinking and business acumen. If you aspire to succeed in this field, you need to master several must-have data science skills to efficiently handle, process, and analyze data while communicating insights effectively.

This article dives deep into the most crucial data science skills, categorized into technical competencies and soft skills, ensuring you are well-equipped to thrive in this ever-evolving domain.

Table of contents

- What is Data Science?

- The Key Components of Data Science

- A. Core Technical Skills

- 1) Programming Proficiency

- 2) Statistical Analysis & Mathematics

- 3) Machine Learning & Deep Learning

- 4) Data Wrangling & Feature Engineering

- 5) Big Data Technologies

- 6) Cloud Computing & Model Deployment

- Essential Soft Skills for Data Scientists

- Communication & Data Storytelling

- Collaboration & Teamwork

- Critical Thinking & Problem-Solving

- Concluding Thoughts…

- FAQs

- What skills are needed in data science?

- Is SQL needed for data science?

- Is coding required for data science?

- Which language is best for data science?

What is Data Science?

Before diving into the essential data science skills, it’s crucial to understand what data science is and why it plays a pivotal role in modern industries. At its core, data science is an interdisciplinary field that combines statistics, machine learning, programming, and domain expertise to extract meaningful insights from data. You are not just dealing with raw numbers; you are uncovering patterns, making predictions, and enabling businesses to make data-driven decisions.

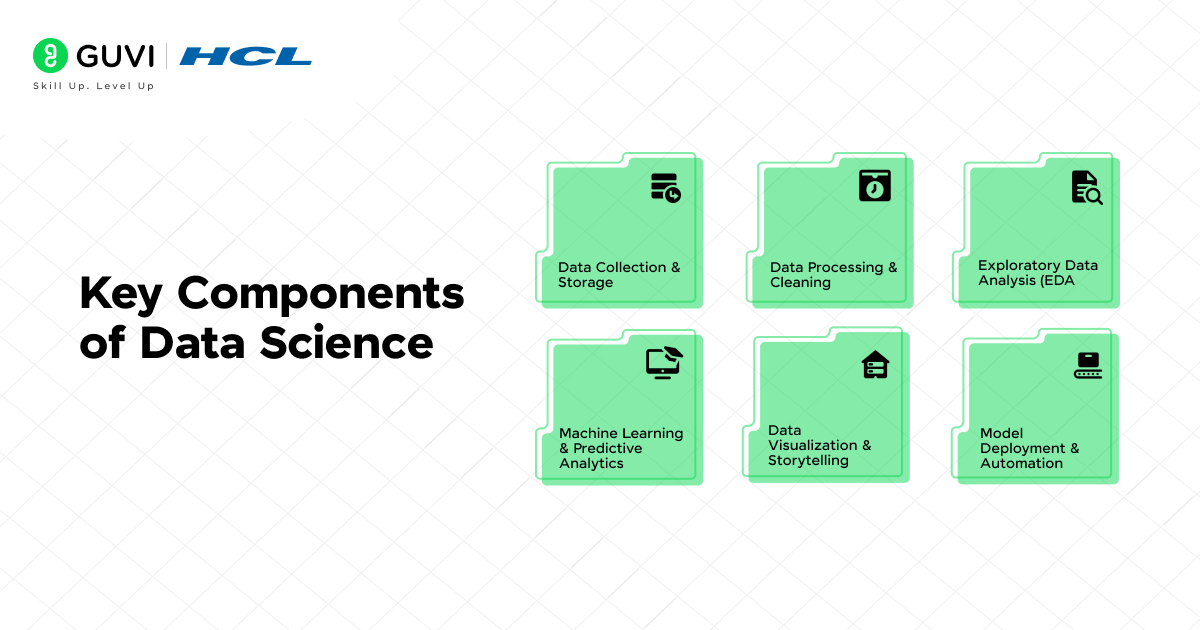

The Key Components of Data Science

To truly grasp what data science is, you need to understand its foundational elements:

- Data Collection & Storage: You work with vast amounts of structured and unstructured data from databases, APIs, IoT devices, and cloud storage. Managing and organizing this data is crucial for downstream analytics.

- Data Processing & Cleaning: Real-world data is messy. You spend a significant amount of time handling missing values, removing inconsistencies, and transforming raw data into a usable format.

- Exploratory Data Analysis (EDA): Before building any models, you need to understand the underlying structure of your dataset. Using statistical techniques and visualization tools, you identify correlations, distributions, and outliers.

- Machine Learning & Predictive Analytics: Data science involves building and fine-tuning machine learning models to recognize patterns and make predictions. Whether it’s classification, regression, clustering, or deep learning, you apply the right algorithms to solve real-world problems.

- Data Visualization & Storytelling: Raw numbers don’t tell a story on their own. You must translate your findings into actionable insights using charts, graphs, and dashboards to communicate effectively with stakeholders.

- Model Deployment & Automation: A great model is useless if it never sees production. Deploying models via cloud platforms, APIs, or automated pipelines ensures that your solutions provide continuous value.

Now, we will discuss the skills you must have as a data scientist to excel in your career.

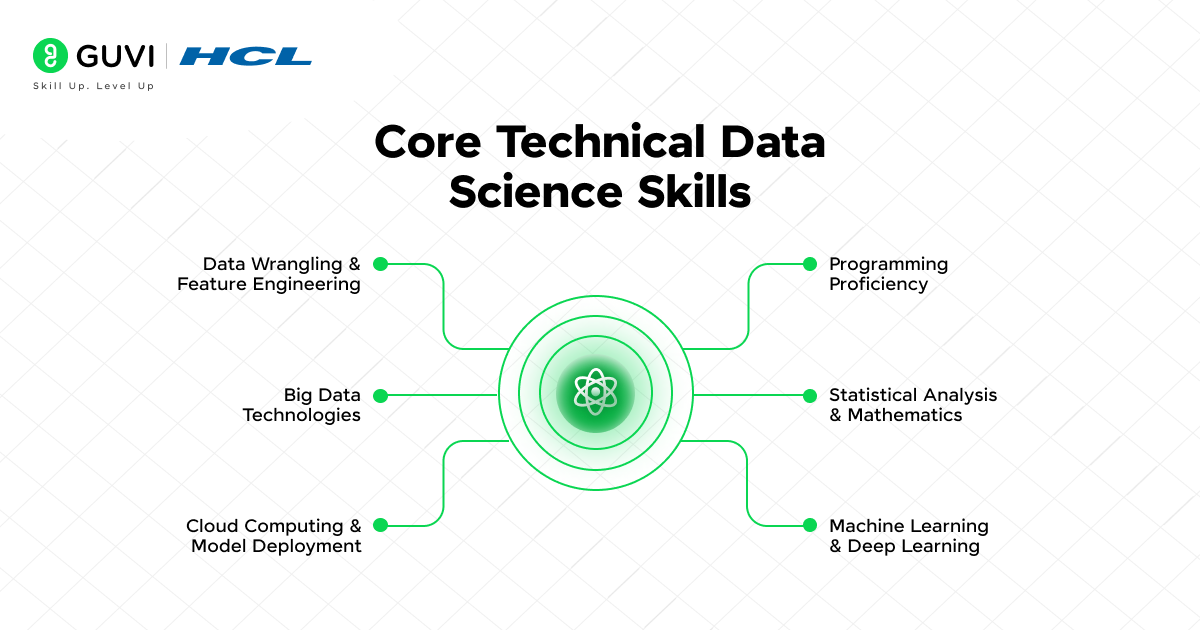

A. Core Technical Skills

A strong technical foundation is a non-negotiable prerequisite for any data scientist. Mastering the following areas will set you apart in the field:

1) Programming Proficiency

As a data scientist, your ability to write efficient, scalable, and well-structured code is one of the most critical skills you need to develop. Since data science involves working with vast datasets, implementing machine learning models, and automating workflows, having a strong command over programming languages ensures that you can process, analyze, and visualize data effectively. Without programming proficiency, you will struggle to clean data, build models, and deploy solutions in real-world scenarios.

Key Programming Languages for Data Science:

1. Python – The Industry Standard for Data Science

Python is the most popular programming language for data science, and for good reason. It provides an extensive ecosystem of libraries and frameworks tailored for data manipulation, machine learning, deep learning, and visualization. If you are serious about data science, mastering Python should be your top priority. You will use Python for:

- Data Manipulation: Libraries like Pandas and NumPy help with data cleaning, transformation, and numerical computations.

- Statistical Analysis: Libraries such as SciPy provide statistical tests, probability distributions, and hypothesis testing.

- Machine Learning & Deep Learning: Frameworks like Scikit-learn, TensorFlow, and PyTorch allow you to implement models ranging from simple regressions to complex neural networks.

- Data Visualization: Using Matplotlib, Seaborn, and Plotly, you can create insightful graphs and dashboards to communicate your findings.

- Automation & Scripting: Python helps automate repetitive tasks, such as web scraping (BeautifulSoup, Scrapy) and data pipeline management (Airflow).

2. R – The Language for Statistical Computing

While Python dominates data science, R is an equally powerful tool, especially for statistical analysis and research-based projects. If your work requires advanced statistical modeling, hypothesis testing, or academic research, R can be highly beneficial. You should use R when:

- Performing Advanced Statistical Analysis: R’s built-in functions and packages like stats, car, and lme4 allow in-depth statistical modeling.

- Creating Stunning Visualizations: ggplot2 is one of the most powerful visualization libraries, enabling publication-quality charts.

- Handling Large Datasets: R’s data.table package is optimized for fast manipulation of massive datasets.

Though not as commonly used for machine learning as Python, R is essential in academic research, econometrics, and healthcare analytics.

3. SQL – The Language for Data Querying

SQL (Structured Query Language) is indispensable for working with structured data stored in relational databases. As a data scientist, you often deal with large datasets that are stored in SQL-based databases like MySQL, PostgreSQL, or Microsoft SQL Server. You must know:

- Basic Queries: SELECT, INSERT, UPDATE, DELETE – fundamental commands for interacting with databases.

- Data Filtering & Aggregation: Using WHERE, GROUP BY, HAVING, and ORDER BY to refine datasets for analysis.

- Joins & Subqueries: Merging datasets efficiently using INNER JOIN, LEFT JOIN, and complex nested queries.

- Window Functions: Running calculations over subsets of data using ROW_NUMBER, RANK, LEAD/LAG for time-series analysis.

- Optimization Techniques: Indexing, query optimization, and database normalization to ensure faster processing of large datasets.

2) Statistical Analysis & Mathematics

As a data scientist, your ability to make sense of data depends heavily on your expertise in statistical analysis and mathematics. These skills are the foundation for making accurate predictions, uncovering trends, and validating hypotheses. Without a deep understanding of these concepts, your models and insights could be misleading or unreliable.

Statistics helps you interpret and infer patterns from data, while mathematics ensures that you understand the underlying logic behind algorithms. Whether you are working with machine learning models, conducting A/B testing, or performing exploratory data analysis, a solid grasp of statistics and mathematical principles is essential for making sound data-driven decisions.

1. Descriptive Statistics

Before you dive into complex modeling, you must first understand how to summarize and describe datasets. Descriptive statistics help you measure central tendencies, dispersion, and data distributions, giving you a clear picture of the dataset before applying machine learning models.

- Measures of Central Tendency: You use mean (average), median (middle value), and mode (most frequent value) to summarize data and understand its general behavior.

- Measures of Dispersion: Variance, standard deviation, and interquartile range tell you how spread out the data is, helping you detect inconsistencies and outliers.

- Data Distribution: Understanding distributions like normal distribution, skewness, and kurtosis allows you to choose the right statistical tests and modeling techniques.

2. Inferential Statistics

While descriptive statistics summarize the data you have, inferential statistics allow you to make predictions and generalizations about an entire population based on sample data. This is crucial when you don’t have access to all possible data points.

- Probability Distributions: You need to understand normal, binomial, Poisson, and exponential distributions because they model real-world processes and form the basis of statistical inference.

- Hypothesis Testing: Techniques like t-tests, chi-square tests, ANOVA, and p-values help you determine whether observed patterns in your data are statistically significant or just random noise.

- Confidence Intervals & Significance Levels: These help you measure the certainty of your estimates and ensure that your conclusions are backed by rigorous statistical validation.

By mastering inferential statistics, you ensure that your conclusions are not based on random chance but on solid probabilistic reasoning.

3. Probability Theory

Probability is the backbone of machine learning and predictive analytics. It helps you quantify uncertainty and make informed decisions based on likelihoods.

- Bayes’ Theorem: This fundamental concept is used in machine learning, spam detection, and recommendation systems to update probabilities based on new evidence.

- Law of Large Numbers & Central Limit Theorem: These principles allow you to understand how sample statistics converge to population statistics, which is essential when working with large datasets.

- Markov Chains & Stochastic Processes: Many advanced models, such as Hidden Markov Models (HMMs) in natural language processing, rely on understanding probability transitions over time.

Whether you are building recommendation systems, fraud detection models, or reinforcement learning algorithms, probability theory plays a vital role in your data science toolkit.

4. Linear Algebra & Matrix Operations

Machine learning algorithms rely heavily on linear algebra. When you work with large datasets and high-dimensional spaces, understanding matrix operations becomes crucial.

- Vectors & Matrices: Most datasets and machine learning models are represented as matrices, where each row is a data point, and each column is a feature.

- Dot Products & Matrix Multiplication: These operations are fundamental in neural networks, dimensionality reduction (PCA), and recommendation systems.

- Eigenvalues & Eigenvectors: These are used in Principal Component Analysis (PCA) for feature reduction and noise removal, helping improve model efficiency.

A solid foundation in linear algebra allows you to optimize algorithms, reduce computational complexity, and improve model performance.

5. Statistical Modeling & Regression Analysis

Regression analysis is a crucial statistical technique that helps you identify relationships between variables and make predictions.

- Linear Regression: One of the simplest yet most powerful techniques for modeling relationships between dependent and independent variables.

- Logistic Regression: Used for classification problems where the outcome is binary (e.g., spam detection, medical diagnosis).

- Multivariate Regression: Helps you analyze multiple factors influencing an outcome, improving model accuracy.

- Regularization Techniques (L1 & L2): Used to prevent overfitting in regression models by adding penalty terms that control complexity.

Regression models form the basis for predictive analytics, allowing you to forecast trends, assess risks, and make data-driven decisions.

If you’re serious about becoming a Data Scientist and would like to gain all these Data Science skills and much more, HCL GUVI’s Data Science Course is your perfect launchpad! This industry-focused program equips you with hands-on experience, real-world projects, and expert mentorship to help you land top Data Science roles.

3) Machine Learning & Deep Learning

As a data scientist, one of the most critical skills you must master is machine learning (ML) and deep learning (DL). These technologies allow you to build predictive models that can recognize patterns, make data-driven decisions, and automate complex tasks.

Understanding Machine Learning

Machine learning is a subset of artificial intelligence (AI) that enables computers to learn from data without being explicitly programmed. Instead of writing rule-based instructions, you train models using data to recognize underlying patterns and make accurate predictions. Machine learning is broadly categorized into three types:

- Supervised Learning: In this approach, you train models using labeled data, meaning each input has a corresponding output. You use supervised learning for classification (e.g., spam detection, sentiment analysis) and regression tasks (e.g., sales forecasting, house price prediction). Common algorithms include:

- Linear & Logistic Regression – Used for numerical predictions and binary classification.

- Decision Trees & Random Forests – Provide interpretable results and handle both regression and classification tasks.

- Support Vector Machines (SVMs) – Work well in high-dimensional spaces for classification problems.

- Gradient Boosting (XGBoost, LightGBM, CatBoost) – Advanced ensemble methods that provide high accuracy on structured data.

- Unsupervised Learning: Unlike supervised learning, you work with unlabeled data, meaning the model identifies hidden patterns without explicit outputs. You use unsupervised learning for:

- Clustering (e.g., K-Means, Hierarchical Clustering, DBSCAN) – Groups similar data points together, useful in customer segmentation and anomaly detection.

- Dimensionality Reduction (e.g., PCA, t-SNE, Autoencoders) – Reduces the number of features while preserving important information, improving model efficiency.

- Reinforcement Learning: In reinforcement learning, your model learns by interacting with an environment and receiving rewards or penalties based on its actions. It is widely used in robotics, game AI (e.g., AlphaGo), and automated trading systems.

Understanding Deep Learning

Deep learning is an advanced subset of machine learning that mimics the human brain using artificial neural networks. Unlike traditional ML models that rely on manually engineered features, deep learning models automatically extract features from data, making them highly effective for complex tasks such as image recognition, natural language processing (NLP), and speech processing.

The key architectures in deep learning include:

- Artificial Neural Networks (ANNs): The foundation of deep learning, ANNs consist of layers of neurons that process data and learn hierarchical representations.

- Convolutional Neural Networks (CNNs): Designed for image processing, CNNs extract spatial features from images, making them essential for tasks like object detection and facial recognition.

- Recurrent Neural Networks (RNNs) & Long Short-Term Memory (LSTMs): Used for sequential data, such as time series forecasting and speech recognition, where previous information influences predictions.

- Transformers (e.g., BERT, GPT-3, T5): Revolutionized natural language processing (NLP) by enabling context-aware text understanding and generation, used in chatbots, text summarization, and language translation.

4) Data Wrangling & Feature Engineering

Raw data is rarely perfect. It often contains missing values, inconsistencies, outliers, and noise, making it difficult to extract meaningful insights. This is where data wrangling and feature engineering come into play—two essential steps in any data science workflow.

Data Wrangling: Preparing Data for Analysis

As a data scientist, you will spend a significant portion of your time cleaning and transforming data. Data wrangling involves reshaping, merging, and handling inconsistencies to ensure data quality before applying machine learning models.

- Handling Missing Data: Real-world datasets often contain missing values, which can negatively impact model performance. You must decide whether to remove, impute, or infer missing values based on the dataset’s characteristics. Techniques include mean/mode imputation, interpolation, or using predictive models to fill gaps.

- Removing Duplicates & Inconsistencies: Duplicate entries can skew results, and inconsistent formats (e.g., date formats, categorical labels) can lead to errors. You need to standardize data representations for consistency.

- Outlier Detection & Treatment: Outliers can distort machine learning models. Using statistical methods like Z-score, IQR (Interquartile Range), and visualization tools (box plots, scatter plots) helps in identifying and handling extreme values.

- Data Transformation & Encoding: Sometimes, raw data needs transformation—scaling numerical values (Min-Max Scaling, Standardization) or encoding categorical variables (One-Hot Encoding, Label Encoding) to make it machine-readable.

Feature Engineering: Creating Predictive Power

Having clean data is not enough. Feature engineering enhances your dataset by creating new variables or modifying existing ones to improve model performance.

- Feature Extraction: Sometimes, useful information is embedded within unstructured data. In text data, techniques like TF-IDF (Term Frequency-Inverse Document Frequency) or word embeddings (Word2Vec, BERT) help extract meaningful features. In time-series data, you may extract seasonality, trends, or moving averages.

- Feature Creation: You can generate new features by combining existing ones. For example, if you have “purchase amount” and “customer income,” creating a “spending ratio” feature may provide better insights.

- Feature Selection: Not all features contribute positively to model accuracy. Techniques like Recursive Feature Elimination (RFE), Principal Component Analysis (PCA), and Mutual Information help you retain only the most relevant features.

- Polynomial & Interaction Features: Sometimes, relationships between features are non-linear. Creating polynomial features (e.g., squaring or cubing numerical values) or interaction terms between variables can improve predictive power.

5) Big Data Technologies

In today’s data-driven world, you are not just working with small datasets; you are often dealing with massive volumes of data generated at high velocity from multiple sources. This is where Big Data Technologies come into play. These tools and frameworks enable you to efficiently store, process, and analyze large-scale datasets that traditional databases cannot handle.

Why Do You Need Big Data Technologies?

As a data scientist, you frequently work with structured, semi-structured, and unstructured data from sources like social media, IoT devices, transaction logs, and real-time monitoring systems. Handling such massive datasets requires specialized technologies that provide scalability, distributed computing, and real-time processing capabilities. Without these technologies, analyzing large datasets would be time-consuming and computationally expensive.

Essential Big Data Technologies:

To work effectively with large-scale data, you must familiarize yourself with the following Big Data Technologies:

- Apache Hadoop: Hadoop is one of the foundational big data frameworks that enables distributed storage and processing of vast datasets. It consists of the Hadoop Distributed File System (HDFS) for data storage and MapReduce for parallel data processing. You use Hadoop when dealing with batch-processing tasks over massive data volumes.

- Apache Spark: While Hadoop is powerful, Apache Spark takes big data processing to the next level by offering in-memory computing, making it much faster than Hadoop’s MapReduce. Spark supports real-time data processing and integrates seamlessly with machine learning libraries like MLlib for large-scale model training.

- NoSQL Databases (MongoDB, Cassandra, HBase): Traditional relational databases struggle with high-volume, schema-less, or unstructured data. NoSQL databases like MongoDB (document-based) and Cassandra (column-based) allow you to store and retrieve large datasets efficiently while ensuring high availability and fault tolerance.

- Kafka & Stream Processing Technologies: If your data arrives continuously from multiple sources (e.g., website activity logs, IoT devices, financial transactions), you need real-time data streaming solutions. Apache Kafka is a distributed messaging system that allows real-time event processing, while frameworks like Apache Flink and Apache Storm enable low-latency, real-time analytics.

- Cloud-Based Big Data Solutions (AWS, Google Cloud, Azure): Cloud platforms provide scalable and cost-effective solutions for big data storage and processing. Services like AWS S3, Google BigQuery, and Azure Data Lake allow you to manage large datasets without maintaining on-premises infrastructure. You can also deploy machine learning models on cloud-based platforms for distributed training and deployment.

6) Cloud Computing & Model Deployment

As a data scientist, your work doesn’t end with building machine learning models. The real impact comes when you deploy these models into production, allowing businesses to leverage real-time predictions and automation. This is where cloud computing and model deployment come into play. By using cloud platforms, you ensure that your models are scalable, accessible, and efficiently managed in production environments.

Key Cloud Platforms for Data Science:

Several cloud platforms are widely used in the industry for machine learning and data science workflows:

- Amazon Web Services (AWS): Offers services like SageMaker for model training and deployment, Lambda for serverless computing, and EC2 for scalable cloud instances.

- Google Cloud Platform (GCP): Provides tools like Vertex AI for end-to-end ML model management and BigQuery for large-scale data analytics.

- Microsoft Azure: Features Azure Machine Learning for model training and deployment, along with robust AI-driven services.

Model Deployment: Making Machine Learning Models Production-Ready

Once you build a machine learning model, you need to deploy it so that users or applications can interact with it. There are several ways to achieve this:

- Deploying via APIs: You can use frameworks like Flask or FastAPI to expose your model as a web service. This allows applications to send data and receive predictions in real time.

- Containerization with Docker: Docker helps package your model, dependencies, and environment into a container, ensuring consistency across different platforms. This is crucial for deploying models across multiple cloud services.

- Using Kubernetes for Scalability: Kubernetes automates the deployment, scaling, and management of containerized applications. If your model needs to handle thousands of requests per second, Kubernetes ensures efficient load balancing.

- Serverless Deployment: Cloud providers offer serverless computing services like AWS Lambda or Google Cloud Functions, allowing you to deploy models without managing servers.

Monitoring & Maintaining Deployed Models

Deployment is not a one-time process—you must continuously monitor and improve your model’s performance. This involves:

- Model Drift Detection: Over time, data distributions change, making your model less effective. Monitoring tools like Evidently AI help track model performance and detect drift.

- Logging & Debugging: Services like AWS CloudWatch or GCP Logging help track errors and optimize performance in production.

- Retraining Pipelines: Automating model retraining using cloud-based workflows ensures that your model stays up to date with new data.

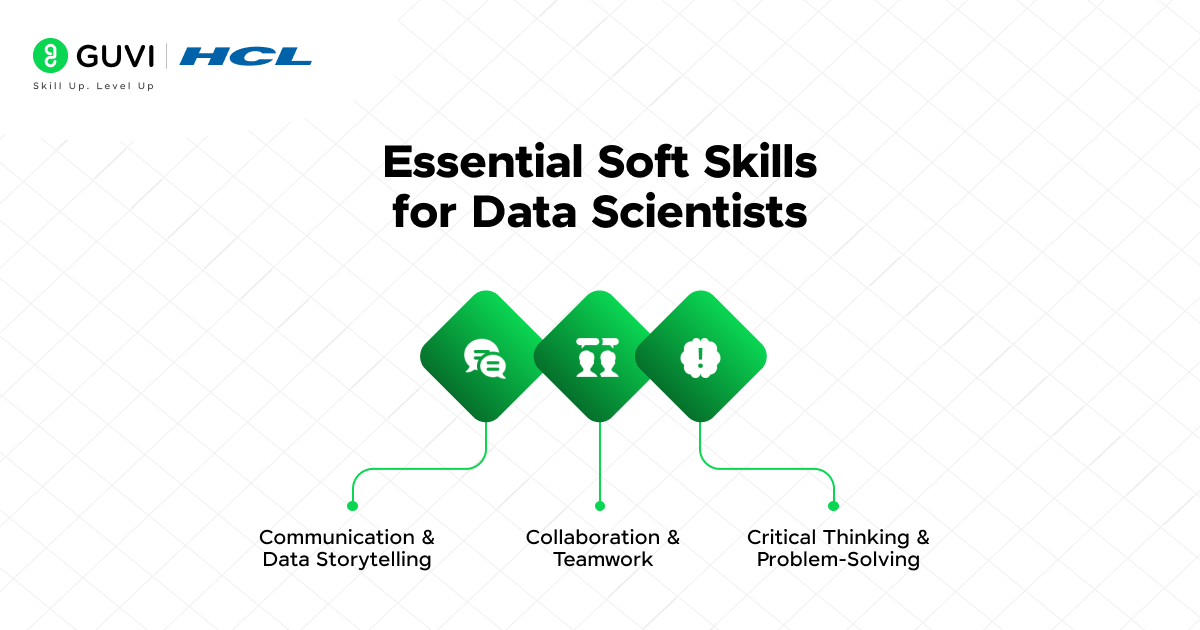

2. Essential Soft Skills for Data Scientists

While technical expertise is fundamental in data science, your ability to effectively communicate your findings and work collaboratively with diverse teams is equally critical. Soft skills are often the differentiator between a good data scientist and a great one.

As you progress in your data science career, honing these essential soft skills will make you more valuable to your team and organization. Here’s a breakdown of the key soft skills you should focus on:

1. Communication & Data Storytelling

As a data scientist, your role is not just to analyze data but to translate complex technical findings into actionable insights for stakeholders who may not have a technical background. Effective communication is crucial in this process.

- Data Storytelling: You need to present your findings in a way that engages your audience. Think of your analysis as a story with a beginning, middle, and end—laying out the problem, the data you analyzed, and the insights or recommendations you’ve derived. Using visualizations and simplified language can help convey your message clearly and ensure that decision-makers understand the significance of your work.

- Clear Reporting: Being able to summarize technical results into simple reports and presentations is a skill you’ll rely on frequently. Focus on highlighting the key takeaways and actionable steps without overwhelming your audience with technical jargon.

2. Collaboration & Teamwork

In most data science roles, you won’t be working in isolation. You’ll need to collaborate with other data scientists, software engineers, product managers, and business stakeholders. Building a collaborative mindset will help you effectively contribute to cross-functional teams.

- Cross-Disciplinary Communication: As you work with individuals from various backgrounds, the ability to bridge the gap between technical and non-technical teams becomes essential. You must be able to explain complex algorithms and insights to stakeholders, while also understanding their business requirements.

- Team Problem Solving: Collaboration often leads to better solutions. By pooling knowledge and expertise with colleagues, you can approach challenges from multiple perspectives, leading to more innovative outcomes.

3. Critical Thinking & Problem-Solving

At the heart of data science lies problem-solving. As you work with data, you’ll frequently encounter challenges that don’t have a straightforward solution. You need to be able to break down complex problems and find the most efficient way to solve them.

- Analytical Thinking: You should approach problems systematically, breaking them down into smaller, more manageable pieces. By identifying the root cause of issues and understanding the underlying patterns in data, you’ll be able to make more informed decisions and create better models.

- Creativity in Solutions: Data science often requires innovative approaches. You’ll need to come up with creative solutions when dealing with challenging or messy data. Whether it’s feature engineering, choosing the right model, or handling imbalanced datasets, thinking outside the box can make a big difference.

Concluding Thoughts…

Excelling in a data science career requires mastering a diverse range of data science skills, from programming and machine learning to problem-solving and communication. So, if you’re an aspiring data scientist or looking to refine your expertise, developing both technical and soft skills is critical for staying competitive.

By continuously learning and applying these skills, you can effectively analyze data, build powerful models, and drive meaningful business decisions. The future of data science is evolving, and those who stay ahead of the curve will thrive in this exciting field.

I hope this article has aided your data science journey and if you have any doubts, do reach out to us through the comments section below.

FAQs

1. What skills are needed in data science?

Key skills in data science include programming (Python, R), statistics, machine learning, data visualization, SQL, data wrangling, big data technologies, and domain expertise.

2. Is SQL needed for data science?

Yes, SQL is essential for data science as it helps in querying, managing, and analyzing structured data from relational databases.

3. Is coding required for data science?

Yes, coding is necessary in data science for data processing, statistical analysis, and machine learning model development, primarily using Python or R.

4. Which language is best for data science?

Python is the most preferred language for data science due to its extensive libraries, ease of use, and strong community support.

Did you enjoy this article?