What to Learn to Become a DevOps Engineer in 2025?

Mar 26, 2025 8 Min Read 4034 Views

(Last Updated)

The DevOps market is growing faster than ever and is expected to hit around $26.9 billion by 2028. And the growth is justified by the fact that DevOps engineers in India command impressive salaries – freshers earn between ₹5 Lakhs to ₹9 Lakhs while senior professionals take home up to ₹40 Lakhs per year.

Now, how do you become one? What do you learn and how do you learn it? Well, your journey to become a DevOps engineer will take at least 5-8 months of focused learning. The path requires you to learn Python programming and work with cloud platforms like AWS or Azure. You’ll also need to understand automation tools.

The learning curve will surely challenge you, but 435,520 open positions will definitely make this career path worth pursuing. Let me walk you through exactly what you must learn to become a DevOps engineer in 2025. We’ll start with simple concepts and build up to advanced topics that will set you apart.

Table of contents

- What is DevOps?

- What to learn to become a DevOps Engineer: Step-by-Step

- Step 1) Fundamentals to Master Before DevOps

- a) Operating Systems: Linux (Ubuntu, CentOS), Windows Server

- b) Networking Basics: DNS, HTTP/HTTPS, Load Balancing, Firewalls

- c) Scripting and Programming: Python, Bash, or Go for automation

- Step 2) Version Control Systems (VCS)

- a) Git: Learn branching strategies, repositories, and collaboration

- b) Platforms: GitHub, GitLab, Bitbucket

- Step 3) Continuous Integration and Continuous Development (CI/CD)

- a) Tools to Learn: Jenkins, GitHub Actions, GitLab CI/CD, CircleCI

- b) Best Practices

- Step 4) Containerization and Orchestration

- a) Docker: Understanding Images, containers, and Docker Compose

- b) Kubernetes: Managing container clusters, auto-scaling, deployments

- Step 5) Infrastructure as Code (IaC) and Configuration Management

- a) IaC Tools

- b) Configuration Management

- Step 6) Cloud Computing and Services

- a) Cloud Providers: AWS, Azure, GCP

- b) Important Cloud Services: Compute, Storage, Networking, Security

- c) Cloud Design Patterns: Scalability, fault tolerance, high availability

- Step 7) Monitoring and Logging

- a) Monitoring Tools: Prometheus, Datadog, New Relic, AWS CloudWatch

- b) Log Management: ELK Stack

- Takeaways…

- FAQs

- Q1. How long does it typically take to become a DevOps engineer?

- Q2. What are the key skills required for a DevOps engineer?

- Q3. Which cloud platforms are most important for DevOps professionals?

- Q4. What are some popular tools used in DevOps for monitoring and logging?

- Q5. What career opportunities are available in DevOps?

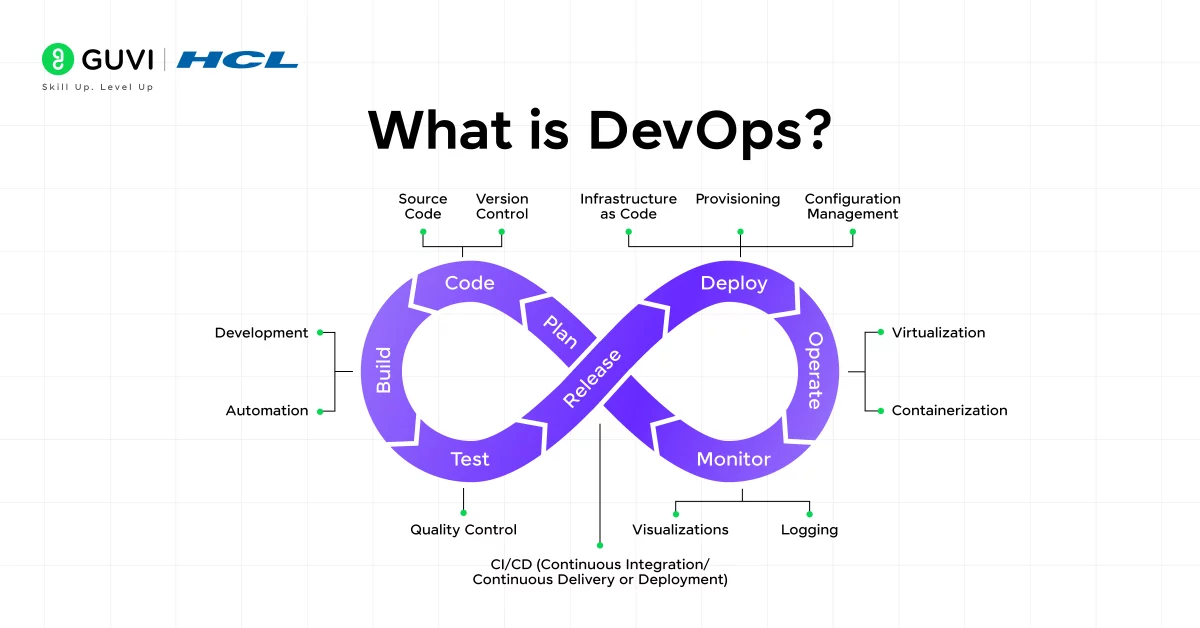

What is DevOps?

DevOps marks a transformation in software development and IT operations that brings once-separate teams together as one unit. DevOps combines cultural philosophies, practices, and tools to help organizations deliver applications and services faster than old-school methods.

Development and operations teams now work together through the entire application lifecycle. Quality assurance and security teams blend with development and operations teams. This creates a smooth flow from development to deployment.

What to learn to become a DevOps Engineer: Step-by-Step

You need a well-laid-out approach to learn various technologies and practices to become a DevOps engineer. A strong foundation will help you understand the basic elements before you start working with specific tools.

This DevOps engineer path requires mastering seven key areas which we will discuss at length below. Each step builds on the previous one. This creates a detailed skill set that lines up with what the industry needs.

Note that real-life experience often proves more valuable than theoretical knowledge alone. Apply your learning through actual projects and scenarios as you progress through each step.

Step 1) Fundamentals to Master Before DevOps

Strong fundamentals give you the problem-solving abilities needed for complex DevOps challenges. A good understanding of operating systems, networking, and programming creates the foundation that supports advanced DevOps tools and practices.

a) Operating Systems: Linux (Ubuntu, CentOS), Windows Server

If you are to become a DevOps engineer, you must have a deep understanding of operating systems, especially Linux and Windows Server, since most cloud and automation tools run on these platforms. Knowledge of these will help you understand:

- File System & Permissions – Managing files, directories, and user permissions.

- Process Management – Using commands like ps, top, and kill to monitor and manage system processes.

- Package Management – Installing and updating software using apt, yum, or choco.

- System Services – Managing services with systemctl, service, and Windows Services.

- Shell Scripting – Writing automation scripts in Bash or PowerShell.

b) Networking Basics: DNS, HTTP/HTTPS, Load Balancing, Firewalls

Network fundamentals are the foundations of distributed systems. Networking is crucial in DevOps for managing cloud infrastructure, deploying applications, and troubleshooting issues. Key areas include:

- DNS (Domain Name System) – How domain names map to IP addresses.

- HTTP/HTTPS – Understanding web communication protocols, request methods, and SSL/TLS security.

- Load Balancing – Distributing traffic across multiple servers for high availability.

- Firewalls & Security Groups – Configuring network access rules to secure applications.

c) Scripting and Programming: Python, Bash, or Go for automation

Automation is a core DevOps principle. Learning scripting and programming languages will help you automate repetitive tasks, manage configurations, and deploy applications. Some of the languages that we and experts recommend are:

- Bash (Linux) & PowerShell (Windows) – Automating system administration tasks.

- Python – Writing automation scripts, working with APIs, and managing cloud infrastructure.

- Go (Golang) – Preferred for high-performance cloud-native applications and DevOps tooling.

Start with one primary language, though expertise in multiple languages will make you more versatile. Python makes an excellent first choice with its gentle learning curve and powerful automation features.

Hands-on projects help reinforce these fundamentals effectively. Small scripts that automate daily tasks, experiments with different OS features, and simple networking tools will strengthen your theoretical knowledge. This practical approach develops the problem-solving skills that DevOps roles demand.

Step 2) Version Control Systems (VCS)

Version control systems are the foundations of modern software development. They help teams track and manage code changes in a systematic way. These systems capture snapshots of files when developers edit code and store each version permanently.

Version control eliminates the chaos of keeping multiple code copies on local machines. This prevents accidental changes or deletions. Teams can access one version at a time, which creates an organized development process. A detailed history of changes lets you see who made specific modifications and their reasons.

a) Git: Learn branching strategies, repositories, and collaboration

Git stands out as the main version control system with strong features to manage complex projects. It is a distributed version control system that allows teams to work on the same project efficiently. Key concepts to master include:

- Repositories: Store and manage project files with version history.

- Branching Strategies: Learn Git Flow, GitHub Flow, and trunk-based development for efficient team collaboration.

- Commits and Merging: Track changes with commits and combine updates using merge or rebase.

- Pull Requests & Code Reviews: Ensure quality code through peer reviews before merging changes.

- CI/CD Integration: Automate builds and deployments by linking Git with CI/CD pipelines.

b) Platforms: GitHub, GitLab, Bitbucket

These Git-based platforms provide hosting, collaboration tools, and CI/CD integration:

- GitHub: Industry standard for open-source and enterprise development, with Actions for CI/CD.

- GitLab: Provides built-in DevOps tools like pipelines, issue tracking, and security scanning.

- Bitbucket: Popular in enterprise environments, offering seamless integration with Atlassian products like Jira.

Version control workflows create processes and permissions that keep teams productive and arranged properly. Automated testing, code analysis, and deployment mechanisms produce consistent results and save time. Mastering version control becomes crucial as you grow in your DevOps experience. This knowledge helps you manage complex systems and work better with team members.

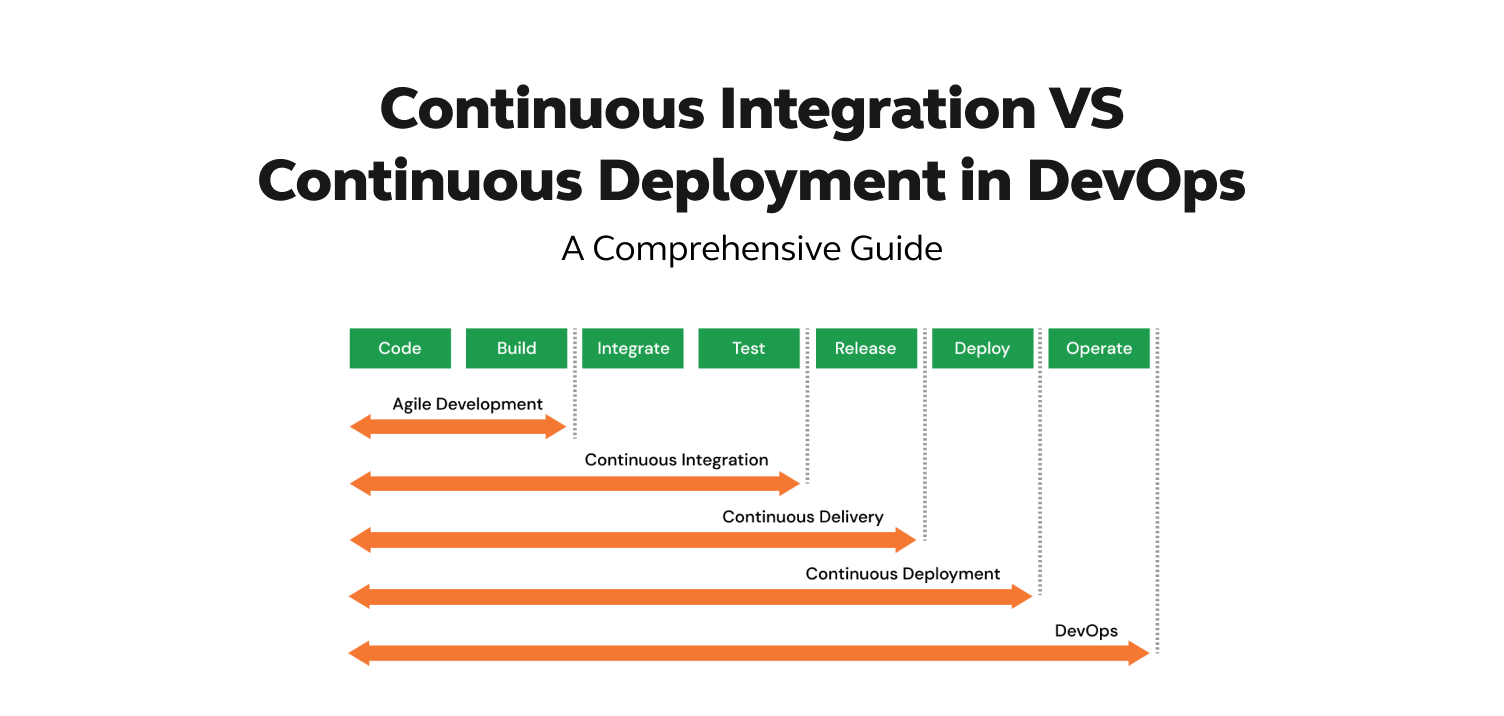

Step 3) Continuous Integration and Continuous Development (CI/CD)

Automated processes in software development form the core of Continuous Integration and Continuous Delivery (CI/CD). The automated pipeline moves code from building and testing to deployment. Quality remains consistent throughout the development lifecycle.

CI/CD brings together two connected practices. Continuous Integration makes code merges, builds, and testing automatic. Developers can share changes more often. Continuous Delivery then gets code changes ready for quick, secure production release.

a) Tools to Learn: Jenkins, GitHub Actions, GitLab CI/CD, CircleCI

Now, let’s discuss the tools you must learn to become an efficient DevOps Engineer and why:

- Jenkins – A widely used open-source automation server that helps in building, testing, and deploying applications.

- GitHub Actions – A CI/CD tool integrated with GitHub, allowing developers to automate workflows directly from their repositories.

- GitLab CI/CD – A robust tool that offers integrated CI/CD pipelines within GitLab, making it easy to automate builds and deployments.

- CircleCI – A cloud-based CI/CD platform that enables rapid and scalable application testing and deployment.

b) Best Practices

The best DevOps engineers out there are ones that not only learn DevOps best practices in theory but also make it a practice to apply them daily and throughout their career, let’s look at some of the best practices one must know:

- Automate Everything – Implement automation for code integration, testing, and deployment to minimize manual intervention.

- Use Version Control (Git) – Ensure all CI/CD configurations and pipelines are stored in version control for easy tracking and rollback.

- Implement Frequent Code Integration – Regularly merge code changes to detect and fix integration issues early.

- Ensure Fast and Reliable Testing – Automate testing in the pipeline to catch bugs before deployment.

- Monitor and Optimize Pipelines – Continuously analyze CI/CD performance to optimize build times and resource utilization.

Your project’s specific needs should guide how you apply these practices. Automated testing plays a vital role. It helps find dependencies and issues early in the software development lifecycle.

Your CI/CD pipeline should keep code ready for release at any time. This approach helps teams work better. Bugs get fixed as soon as they appear and updates can go live quickly when needed.

Step 4) Containerization and Orchestration

Container technology pioneers modern application deployment by providing lightweight, portable environments that run applications consistently on different platforms. This solves the classic problem of environment differences between development and production systems.

Containerization wraps applications and their dependencies into isolated units that behave consistently whatever the underlying infrastructure. The system automates container deployment, management, scaling, and networking throughout their lifecycle.

a) Docker: Understanding Images, containers, and Docker Compose

Docker leads the way in container creation and management. The platform combines several components that work naturally together:

- Docker Images: They are basically pre-packaged environments containing application code, dependencies, and configurations. These read-only templates contain instructions to create containers.

- Containers: These are lightweight, isolated runtime environments created from Docker images. Containers share the host operating system kernel, making them substantially more efficient than virtual machines.

- Docker Engine: A tool to define and manage multi-container applications using a simple YAML file (docker-compose.yml).

By mastering Docker, DevOps engineers can ensure seamless development, testing, and deployment workflows.

b) Kubernetes: Managing container clusters, auto-scaling, deployments

Google developed Kubernetes based on 15 years of production workload experience. This powerful orchestration platform helps manage containerized applications at scale. Let’s look at some many components you’ll need to know and master:

- Managing Clusters: Kubernetes organizes containers into clusters and automates resource allocation.

- Auto-Scaling: Ensures optimal performance by automatically adjusting the number of running containers based on traffic.

- Deployments: Uses controllers like ReplicaSets and StatefulSets to enable seamless application updates and rollbacks.

Organizations need container orchestration more than ever as they adopt microservices architectures. Kubernetes makes complex deployments simpler with a unified API that manages distributed systems reliably.

Docker and Kubernetes together create a strong foundation for modern application deployment. DevOps engineers can manage application lifecycles, maintain consistent environments, and keep high availability in infrastructure setups of all sizes.

Step 5) Infrastructure as Code (IaC) and Configuration Management

Modern software development needs automated approaches to manage infrastructure and configurations. IaC has emerged as a DevOps practice that companies use to provision and deploy IT infrastructure through machine-readable definition files.

IaC lets you treat infrastructure pieces as code. This approach makes automation, consistency, and repeatable processes possible when deploying and managing resources. DevOps teams can maintain high availability and manage risk for cloud environments at scale with IaC.

a) IaC Tools

Now that we know what IaC is and why it is important for you to learn, let’s discuss several powerful tools you can use to implement infrastructure automation:

- Terraform – A widely used declarative tool for provisioning cloud and on-prem infrastructure.

- AWS CloudFormation – A native AWS tool for managing infrastructure through YAML or JSON templates.

- Pulumi – Supports multiple programming languages (Python, Go, JavaScript) for infrastructure automation.

- Google Cloud Deployment Manager – A tool for managing GCP resources using configuration files.

By learning these tools, DevOps engineers can deploy infrastructure in a repeatable, version-controlled manner.

b) Configuration Management

Configuration management offers an automated way to keep computer systems and software in a known, consistent state. This practice ensures system reliability through systematic control of resources.

- Ansible – Agentless automation tool using YAML-based playbooks for managing configurations.

- Chef – Uses Ruby-based scripts (cookbooks) to automate infrastructure and application configuration.

- Puppet – A declarative tool for automating system configurations at scale.

- SaltStack – A powerful tool for remote execution and infrastructure automation.

The best results come from practices that ensure secure, repeatable, and reliable cloud infrastructure provisioning. IaC pipeline executions should produce similar results each time you run the same code. Teams can maintain consistency across environments throughout the development lifecycle with this approach.

Step 6) Cloud Computing and Services

Cloud platforms are the foundations of modern software delivery. They provide expandable infrastructure and services that power DevOps practices. The global cloud computing market keeps growing and experts project it to reach USD 243,002,87 billion by 2030.

Cloud computing gives you computing resources through the internet. You get servers, storage, databases, networking, software, and intelligence. This helps you create breakthroughs faster through flexible resources and cost savings.

a) Cloud Providers: AWS, Azure, GCP

AWS dominates with 32% market share and more than 250 services, Azure claims 23% of the market, and GCP holds 9% market share.

These three dominant cloud platforms—Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP)—offer extensive services for infrastructure automation, CI/CD, and security. Learning these platforms helps DevOps engineers manage cloud-based deployments, automate workflows, and optimize cloud costs.

b) Important Cloud Services: Compute, Storage, Networking, Security

Let’s discuss some important cloud services that you must master in brief:

- Compute: Virtual machines (EC2, Azure VM, GCE), containers (ECS, AKS, GKE), serverless (Lambda, Azure Functions, Cloud Functions).

- Storage: Object storage (S3, Blob Storage, Cloud Storage), block storage (EBS, Persistent Disks), file storage (EFS, FSx).

- Networking: VPC, Load Balancers, DNS, CDN, VPNs, and hybrid cloud connectivity.

- Security: IAM, encryption, firewalls, zero-trust architecture, and compliance frameworks.

c) Cloud Design Patterns: Scalability, fault tolerance, high availability

Cloud design patterns solve common problems in distributed systems. These patterns help build reliable, secure, and fast applications.

- Scalability: Auto-scaling, load balancing, microservices, and Kubernetes.

- Fault Tolerance: Multi-region deployments, redundancy, disaster recovery strategies.

- High Availability: Active-active failover, database replication, and distributed caching.

Pick patterns based on what your workload needs, not what’s trending. Learn why specific patterns work better in certain cases. Good pattern choices make your applications more resilient, scalable, and easier to maintain across cloud platforms.

Step 7) Monitoring and Logging

Immediate monitoring capabilities have become crucial to maintain resilient software systems. DevOps teams learn about system behavior, spot irregularities, and keep performance optimal across distributed environments with proper monitoring and logging practices.

Teams can spot and fix problems quickly because monitoring and logging show the system’s health clearly. These methods help plan capacity, optimize resources, and keep applications running smoothly. DevOps professionals track deployment effects and find bottlenecks through complete log analysis.

a) Monitoring Tools: Prometheus, Datadog, New Relic, AWS CloudWatch

Monitoring ensures the observability of infrastructure, applications, and services by tracking key performance metrics and generating alerts. Let’s discuss the top tools you must master:

- Prometheus – Open-source monitoring with a time-series database, ideal for cloud-native environments with robust alerting (Alertmanager).

- Datadog – A comprehensive observability platform offering real-time dashboards, log analysis, and AIOps for cloud and hybrid environments.

- New Relic – A full-stack monitoring solution providing application performance management (APM), distributed tracing, and error analytics.

- AWS CloudWatch – Amazon’s monitoring service for AWS resources, enabling log insights, automated alarms, and anomaly detection.

b) Log Management: ELK Stack

The ELK Stack leads the pack as the most popular log management platform globally, with millions of downloads. Three primary tools make up this powerful combination:

ELK Stack (Elasticsearch, Logstash, Kibana) – A powerful open-source log management solution:

- Elasticsearch – A search and analytics engine for indexing logs.

- Logstash – A data processing pipeline for collecting and transforming log data.

- Kibana – A visualization tool for real-time log analysis and dashboarding.

The ELK Stack excels at gathering and processing data from multiple sources. Engineers keep applications running smoothly with this unified system that grows as data expands.

DevOps engineers track system performance, spot potential issues, and maintain resilient applications with these monitoring and logging solutions. System efficiency and reliability across complex infrastructures depend on these tools’ visibility.

Now, if you’re serious about becoming a top DevOps engineer, then GUVI’s DevOps Course is the perfect launchpad for your career. This industry-focused program covers CI/CD, Kubernetes, Docker, AWS, and more, equipping you with hands-on experience through real-world projects. With expert mentorship and placement support, you’ll gain the skills and confidence to land high-paying DevOps roles.

Takeaways…

Woah, now that was a lot of research jotted down into 7 easy-to-follow pointers custom-made just for you, so I hope you do follow these steps and become a top DevOps engineer! DevOps engineering as we know is a rewarding career that offers great growth potential. Professionals can earn between ₹5 Lakhs to ₹40 Lakhs per year in India.

Success in DevOps isn’t about learning one skill. You’ll need to build expertise in seven key areas that range from technical fundamentals to advanced monitoring tools. Each skill builds on what you learned before, creating a complete skill set that lines up with what companies need.

Move step by step as we discussed through version control, CI/CD, containerization, and cloud services. Build practical experience with hands-on projects along the way. This systematic approach will set you up for success in the ever-changing world of DevOps engineering.

FAQs

With dedicated study, it usually takes about 5-8 months to learn the essential skills needed to become a DevOps engineer. This timeline assumes consistent daily practice and hands-on project work.

Essential skills for a DevOps engineer include proficiency in operating systems (especially Linux), networking basics, programming (Python or similar), version control systems, CI/CD pipelines, containerization, cloud computing, and monitoring tools.

The three major cloud platforms DevOps engineers should be familiar with are Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Each offers unique features and services that are crucial for modern DevOps practices.

Common monitoring and logging tools in DevOps include Prometheus, Datadog, New Relic, AWS CloudWatch, and the ELK Stack (Elasticsearch, Logstash, and Kibana). These tools help in tracking system performance and identifying issues.

DevOps offers diverse career paths, including roles such as DevOps Cloud Engineer, Site Reliability Engineer (SRE), Security Engineer (DevSecOps), and Build Engineer. As you gain experience, you can progress to leadership positions like DevOps Team Lead, Manager, or Architect.

Did you enjoy this article?